Enterprise AI is no longer limited by model performance. The real challenge today is providing models with the right context at the right time. As LLMs like ChatGPT, Claude, or Gemini expand into production environments, they often operate with limited visibility into the systems they support. Without access to the current state, live tools, or structured data, even the most capable models can produce inconsistent or incomplete results.

The Model Context Protocol (MCP), introduced by Anthropic, is designed to close that gap. It offers a standardised way for AI applications to access external tools, APIs, and services. MCP allows models to retrieve and use contextual information through a shared, governed interface.

MCP uses a clean client-server architecture. Clients, such as AI-powered agents, can request actions or data from servers that expose them in a structured way. It keeps systems modular, scalable, and easier to govern at scale.

Now, let’s break down how MCP works, where it fits into modern architecture, and what enterprise teams should evaluate before adopting it.

What is model context protocol (Mcp)?

Model Context Protocol (MCP) enables secure two-way communication between AI systems and data sources. Developers can host data on MCP servers or build AI clients to connect with them. Operating at the system interface layer, MCP replaces tightly coupled integrations by routing requests dynamically. It governs data structure, sharing criteria, and access conditions, enhancing modularity, reducing redundancy, and simplifying scalable cross-system AI interactions.

The origins and development of MCP

Anthropic introduced the Model Context Protocol (MCP) in November 2024 as an open standard for connecting AI assistants to real systems. At that time, even advanced models struggled to access tools or data beyond static prompts. MCP was built to fix that gap with a structured and reusable interface.

Before MCP, teams built one-off connectors between every model and system, which Anthropic called the “N×M” problem. Stopgap solutions like plugins or function-calling APIs helped, but only in platform-specific ways. MCP offered a consistent protocol for exposing tools and exchanging context across systems.

Key design principles and protocol foundations

Model Context Protocol was built to support interoperability, modularity, and runtime context exchange across AI ecosystems. It’s structured to be platform-neutral and easy to adopt.

- Uses JSON-RPC 2.0 for transport to maintain consistency across environments.

- Reuses message flow logic from the Language Server Protocol (LSP).

- Supports bidirectional communication between assistants and tools.

- SDKs are provided in Python, TypeScript, Java, and C#.

- Designed for structured access to external tools, not just passive context retrieval.

- Keeps permission, visibility, and state management within the protocol itself.

Why MCP is crucial in AI integration?

Model Context Protocol (MCP) is important in AI integration because it simplifies how AI models interact with external data, tools, and services. It provides a standardised way for AI to access context and makes integrations easier, more flexible, and secure.

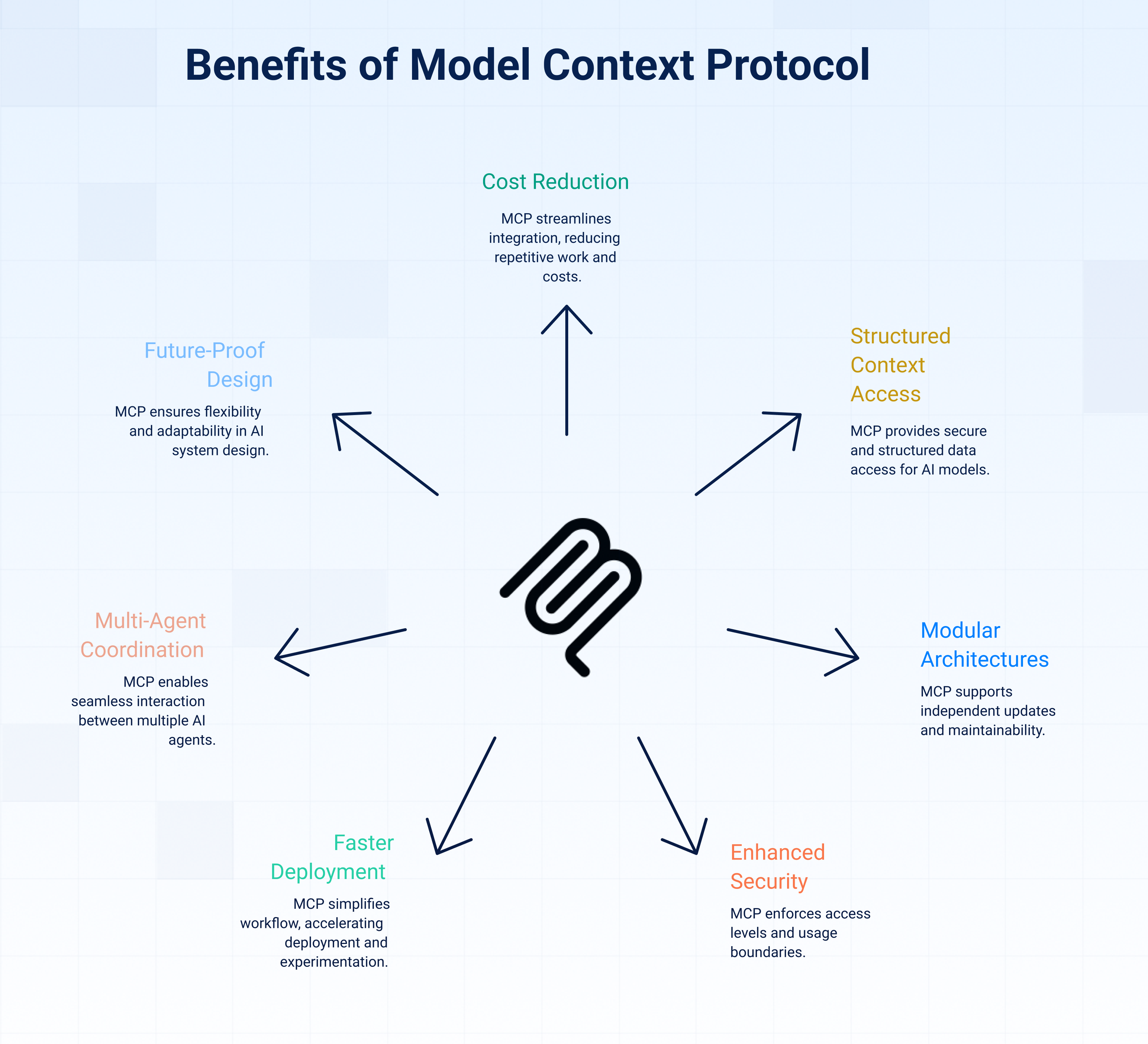

Here are the reasons why MCP is important:

1. Cuts operational costs and duplication

MCP directly lowers operational costs by cutting out repetitive integration work. Instead of building custom connectors for every model-tool pair, developers implement a single Model Context Protocol interface that can serve multiple assistants. This reuse leads to faster deployment cycles, leaner engineering demands, and a stronger return on investment.

2. Gives models structured access to context

Without access to state or system context, even the best models operate in isolation. MCP turns context into a first-class input. Instead of prompt injections or hacks, assistants can request structured data or functions through a secure interface. That makes behaviour more predictable and aligned to the task.

3. Makes architectures modular

MCP is built on a clean client-server model, which separates orchestration from execution. Tools can be added or updated independently, without breaking model logic. This kind of modularity supports long-term maintainability and makes system evolution easier as needs change.

4. Strengthens security and governance

Every request routed through Model Context Protocol carries structure and permissions. Enterprises can define access levels, usage boundaries, and execution rules. That reduces exposure and helps AI systems operate safely in regulated environments.

5. Speeds up deployment and experimentation

With SDKs in Python, TypeScript, Java, and C#, MCP is accessible to most engineering teams out of the box. It removes low-level protocol work, so teams can focus on building workflows, not wiring up systems. Faster iteration means better outcomes.

6. Supports multi-agent and tool coordination

As architectures move toward agent-based systems, shared access becomes critical. MCP allows multiple agents to interact with the same toolset, without stepping on each other’s state or triggering conflicts. That coordination layer helps larger systems behave more like unified applications.

7. Future-proof AI system design

Because Model Context Protocol is open and transport-agnostic, teams aren’t locked into any model or vendor. It uses JSON-RPC 2.0 and follows a design pattern inspired by the Language Server Protocol. That makes switching models or evolving the stack easier, with no code rewrites.

What are the core components of MCP?

MCP is built on a client-server architecture that defines how AI systems interact with tools and services in a structured, permission-aware way. Each component plays a focused role, and together, they form a modular system that scales across use cases and environments.

Here are the key components of MCP:

1. Host

The Host is the user-facing application where interaction begins. It could be a chat interface, a developer tool, or a domain-specific app. Its core role is to manage user inputs, initiate requests to MCP Servers, and coordinate outputs.

Hosts also handle permissions and overall flow. For example, tools like Claude Desktop or Cursor act as Hosts, letting users engage with LLMs while triggering tools through MCP. Other Hosts may be purpose-built apps using libraries like LangChain or smolagents.

2. Client

The Client lives inside the Host and acts as a protocol-aware bridge to a specific MCP Server. Each Client maintains a one-to-one connection with one Server. Its job is to handle the transport logic, structure requests, manage session state, and interpret server responses.

Clients abstract away the low-level communication, allowing Hosts to work with remote tools through a consistent interface. This modularity keeps the logic inside Hosts clean and reusable, even as the number of tools or servers grows.

3. Server

The Server is the tool-facing side of Model Context Protocol. It exposes services, data, or functions in a way that Clients can discover, call, and interpret. Servers can wrap local scripts, external APIs, or cloud services and they don’t need to know anything about the AI system using them.

MCP Servers register their capabilities in a standardized format, enabling Clients to navigate and use them securely. They can be deployed locally or remotely, depending on infrastructure needs.

How to implement MCP in your systems?

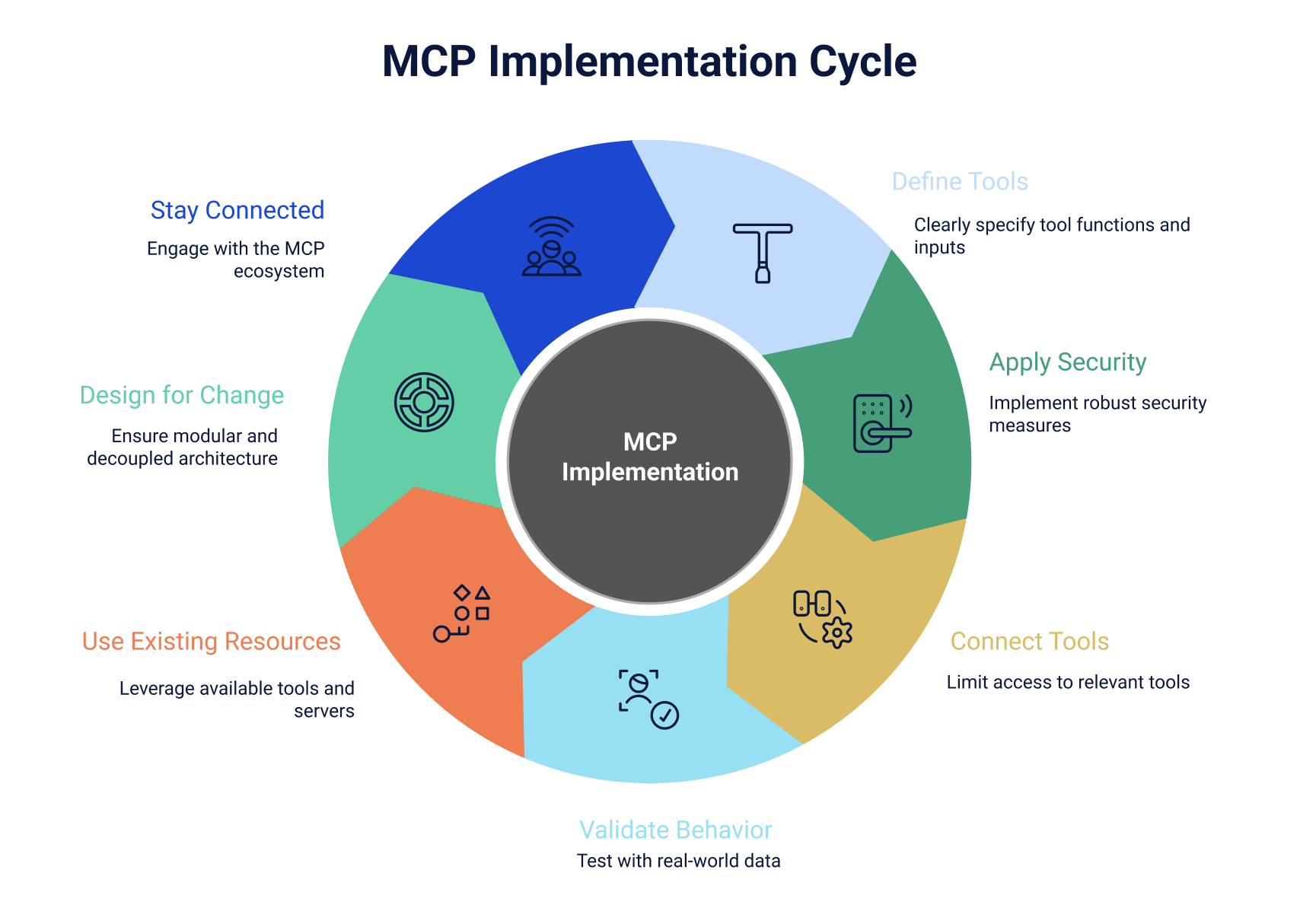

To implement MCP, teams should prioritise clarity in tool design, strict security, and modular architecture. These elements ensure stable and scalable integration across different AI environments.

Here’s how to approach MCP implementation with confidence:

1. Define tools with clarity and constraints

Each MCP tool should describe its exact function, expected inputs, and outputs in a structured form. Use clear naming, typed arguments, and schemas like JSON Schema to define how your tools behave. When you scope definitions precisely, models can generate valid requests and avoid failures caused by vague or incomplete arguments.

2. Apply security controls by default

You should use OAuth 2.1 to authenticate users and apply the least privileged access to every available tool. Avoid hard-coded tokens and store secrets outside your codebase. This approach limits unintended actions and gives you clear visibility into how models interact with sensitive systems.

3. Connect only what’s relevant

Don't expose every tool by default. Instead, match tools to the user’s current task or system role. By limiting access based on intent, you narrow the model’s decision space, avoid invalid calls, and make it easier for your team to trace and debug requests.

4. Validate behaviour with real context

If you're testing integrations, use data snapshots that simulate real operational states. Avoid relying on mock responses alone. Testing with full workflows helps you identify unexpected behaviours and confirms that tool responses align with the model’s interpretation of context.

5. Use what already exists

You don’t need to build everything from scratch. Anthropic and the open-source community provide ready-made Model Context Protocol servers for platforms like Slack and Google Drive. These are already compatible with core SDKs, and using them can help you move faster with fewer protocol-level issues.

6. Design for change and growth

Structure your implementation so that communication logic, tool definitions, and orchestration remain decoupled. As MCP evolves, you’ll want to isolate the parts of your system that may require updates. This reduces rework and makes your stack easier to maintain over time.

7. Stay connected with the ecosystem

Follow MCP development through GitHub, changelogs, and discussions around upcoming changes. Staying active in the ecosystem gives you visibility into compatibility shifts and lets you contribute insights from your own deployments. The more you engage, the more resilient your implementation becomes.

What security measures should be considered in MCP?

Giving AI models access to tools and services through the Model Context Protocol changes how trust, context, and permissions are managed. This convenience introduces new attack surfaces that require deliberate security planning.

Below are five high-priority threats and the specific security measures that can help contain them:

1. Token theft and account takeover

Stored OAuth tokens can be reused without triggering standard login warnings. If stolen, an attacker can impersonate the user and maintain access indefinitely. Password changes often don’t help, because OAuth tokens are still valid until manually revoked or expired by system policy.

Key Security Measures:

- Store tokens in encrypted, isolated environments

- Use short token lifespans with automatic expiration

- Revoke tokens on logout, reset, or anomaly detection

- Monitor activity by IP, location, and behavior patterns

2. MCP server compromise

Model Context Protocol servers often hold credentials for multiple systems. If compromised, attackers can access every linked tool including email, calendars, and storage without needing to hack each one individually. This single point of entry increases the overall blast radius of any breach.

Key Security Measures:

- Containerise and isolate each server deployment

- Enforce network restrictions with tight inbound rules

- Apply least-privilege scopes to every integration

- Enable logging and alerts for privileged operations

3. Prompt injection attacks

AI assistants interpret natural language to trigger tools. Attackers can insert hidden commands in regular-looking text. The model may read the message, misinterpret the intent, and take action without the user realising it. This breaks traditional boundaries between viewing and executing.

Key Security Measures:

- Sanitise and validate input before processing

- Limit which model outputs can invoke the tools

- Require confirmation for any sensitive or persistent operation

- Train users not to share untrusted or unclear content

4. Excessive permission scope

MCP integrations often ask for full access to simplify development. That becomes a liability during token loss. If a tool only needs read access but has full write privileges, even a minor compromise can lead to serious damage across connected systems.

Key Security Measures:

- Use OAuth scopes scoped tightly to the task

- Avoid granting edit or delete access by default

- Separate privilege levels by role and integration

- Review permissions during regular audits or refactors

5. Cross-Service data aggregation

When multiple services connect to a single model, they can access and correlate information across domains. This unified view enables profiling, behavioural inference, or surveillance even if only one token is compromised. It amplifies the risk of what seems like limited access.

Key Security Measures:

- Segment tokens across use cases and endpoints

- Require consent before combining multiple data sources

- Monitor which services are queried together

- Avoid unnecessary context sharing unless functionally required

Adopt MCP safely with Digital API

As enterprises embed AI into operations, the need for secure and scalable context delivery becomes urgent. Model Context Protocol (MCP) creates a standard interface between AI systems and external tools, simplifying connections and reducing integration complexity.

Digital API strengthens this foundation by embedding security into every layer of implementation. It ensures AI systems operate within clear access boundaries, structured token management, and traceable execution flows. With Digital API, deployment practices stay aligned with compliance and governance expectations from day one.

See Digital API in action and deploy MCP with clarity, control, and confidence.

Frequently Asked Questions

Is MCP Compatible With All AI Models?

Yes, MCP is designed to be model-agnostic. It supports any AI model that can communicate using JSON-RPC and interpret tool schemas. It includes general-purpose LLMs like Claude and ChatGPT, as well as custom models built for specific workflows. Compatibility depends on the client setup, not the model’s architecture.

Where Can I Find Resources To Implement MCP?

You can find official MCP resources at modelcontextprotocol.io. The site includes SDKs for Python, TypeScript, Java, and C#, along with integration guides, example servers, and documentation. Community contributions and Anthropic’s GitHub repositories offer additional reference implementations for production use.

Are There Any Costs Associated With Using MCP?

No, there are no licensing fees for using MCP. The protocol is open and freely available. However, costs may arise from building and maintaining infrastructure, securing tokens, or customising tools to fit enterprise systems. These depend on how you structure and scale your MCP implementation internally.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

%20(1).png)

.avif)