Integrating LLMs with enterprise APIs unlocks powerful automation and intelligence, but it also demands an entirely new layer of security, governance, and visibility. Unlike traditional clients, LLMs generate unpredictable requests that can bypass safeguards or expose sensitive data if unmanaged.

DigitalAPI bridges this gap with an AI-native API management platform purpose-built for the LLM era, offering secure mediation, monitoring, and policy enforcement between your APIs and AI agents.

Make your APIs MCP ready in one click.

Large language models (LLMs) are changing how we build applications, unlocking new ways to automate tasks, interpret data, and interact with APIs using natural language. But giving LLMs access to your APIs isn’t as simple as flipping a switch. It introduces a new layer of complexity around security, governance, and control.

Unlike traditional clients, LLMs can generate unpredictable inputs, potentially triggering unintended API calls, exposing sensitive data, or escalating access beyond intended boundaries. That means the old API security playbook isn’t enough.

In this blog, we’ll break down how to expose your APIs to LLMs safely, without compromising on control. From authentication best practices and secure routing to prompt validation and zero trust architectures, we’ll explore the key design patterns and tools you’ll need to protect your systems while embracing this new wave of AI integration.

What does it mean to expose APIs to LLMs?

Exposing APIs to large language models (LLMs) means allowing these models to call, interact with, or retrieve data from enterprise systems via APIs, either directly or through an orchestrating layer. As LLMs become more capable, they're being used to automate tasks like summarising reports, analysing transactions, generating support responses, or even stitching together workflows across internal tools. To do this effectively, they need access to real-time data and actions, which live behind APIs.

But unlike traditional applications, LLMs are not fixed-code clients. They generate dynamic prompts and outputs, which makes it harder to predict or restrict what they’ll request. Giving an LLM unfiltered access to an internal payments API, for example, could lead to unexpected behaviour, like triggering unauthorised refunds or exposing sensitive customer data.

In a secure enterprise setting, “exposing an API” to an LLM doesn’t mean removing authentication or dropping it into the open web. It means designing a controlled interface where LLMs can access certain endpoints with scoped permissions, monitored behaviour, and strict validation. This might involve routing requests through a proxy, wrapping the APIs with governance rules, or layering in guardrails like rate limits, logging, and prompt filtering.

Why API security gets tricky with LLMs?

While APIs have long been secured through authentication, rate limiting, and input validation, large language models introduce new variables that make traditional safeguards harder to rely on. Their unpredictability, dynamic prompting, and lack of explicit boundaries present novel challenges. Here are the key reasons API security becomes more complex when LLMs are involved:

- Unpredictable inputs and outputs: LLMs generate requests on the fly, which means their API calls may not follow predefined structures or expectations. This makes it harder to anticipate the shape or content of each request, increasing the risk of malformed inputs or unintentional actions.

- Prompt injection attacks: Just like SQL injection in the early web era, prompt injection involves a user manipulating the LLM’s instructions to bypass intended behaviours. This could lead to unauthorised API access, data leaks, or unintended function calls.

- Lateral access escalation: Once an LLM has limited API access, a poorly scoped token or misconfigured backend could allow it to move laterally, accessing other endpoints or systems it wasn’t meant to. This is particularly risky in microservices or distributed environments.

- Lack of user identity and context: LLMs often act as an intermediary without a clear user session or identity. This breaks traditional models of access control and auditing, making it harder to trace intent or enforce user-level permissions.

Key threats when integrating APIs with LLMs

Integrating APIs with LLMs brings efficiency and intelligence to workflows, but it also opens up new avenues for security breaches. These risks are not hypothetical; they’re emerging in real-world use cases where APIs are exposed to dynamic, model-driven behaviour. Below are the most pressing threats you should watch for:

1. API key leakage

LLMs may be prompted to display or log sensitive credentials if not properly sandboxed. If API keys are hardcoded into prompts, environment variables, or request templates, they can easily be surfaced in outputs, stored in logs, or leaked to unintended recipients.

2. Over-permisioned access

A common oversight is granting the LLM broad access to backend APIs using a single, privileged token. Without scoped permissions or access tiers, the model may access sensitive resources—even if the original prompt didn’t intend it—leading to potential data exposure.

3. Malicious payloads

LLMs can be tricked into generating or submitting harmful payloads, either through prompt manipulation or external instructions. This includes sending malformed API requests, injecting rogue parameters, or exploiting known endpoint behaviours in ways developers didn’t anticipate.

4. Unmonitored usage

If API requests from LLMs aren’t logged or monitored in real time, it becomes difficult to detect abnormal patterns. This lack of visibility creates blind spots where misuse, overuse, or data exfiltration can occur without raising alerts or triggering thresholds.

Secure design patterns for LLM-API integration

Building secure bridges between LLMs and APIs requires architectural forethought. Unlike typical API clients, LLMs need to be wrapped with safety layers that inspect, filter, and enforce policy around every interaction. The goal is to enable access without compromising security or control.

- API proxies: A proxy acts as a controlled gateway between the LLM and your backend APIs. It can rewrite or inspect requests, inject headers, apply rate limits, and filter inputs, helping to ensure that no raw or dangerous calls ever reach the core system directly.

- Access mediation: Instead of granting the LLM full API access, introduce an orchestration layer that mediates what actions are allowed based on user intent, identity, or session context. This layer can map prompts to safe, predefined operations and enforce logic constraints.

- Input sanitisation: All requests generated by the LLM should be validated and cleaned before hitting your APIs. This helps protect against injection attacks, malformed parameters, or unexpected payloads—especially when prompts involve user-generated content or open-ended input.

- Output filtering: Even if an API response is technically correct, it may contain sensitive data. Add a post-processing layer that filters or redacts fields, enforces data privacy rules, or blocks high-risk responses from being exposed to the user or the model.

- Prompt templates with guardrails: Use templated prompts with strict formatting and embedded constraints to ensure only specific API routes or operations are available to the LLM. This limits the model’s ability to improvise and keeps interactions bounded to expected behaviour.

- Role-based token generation: Dynamically issue short-lived tokens to the LLM with tightly scoped roles or permissions for each task. This ensures that even if the token is leaked or abused, the blast radius is minimal and revocation is straightforward.

- Natural language-to-API mapping constraints: Limit the number of APIs an LLM can invoke through a mapping layer that translates only approved phrases or intents into specific actions. This reduces ambiguity and prevents the model from accessing unauthorised endpoints.

- Continuous monitoring and feedback loops: Track usage patterns in real-time and feed alerts or audit logs back into the system. This allows for active anomaly detection, adaptive throttling, and post-incident analysis—critical for keeping LLM access auditable and accountable.

How to authenticate and authorise LLM Access to APIs

.jpg)

Securing API access for LLMs starts with robust authentication and fine-grained authorisation. Since LLMs are not human users, traditional identity models don’t always apply. Instead, you need strategies that ensure the right level of access for the right use case—whether internal, external, or automated.

1. OAuth2 vs API keys

API keys are simple but often too permissive and difficult to manage at scale. OAuth2, by contrast, offers more secure, token-based authentication with scopes, expiry, and revocation. For LLM use cases, OAuth2 is generally preferred as it enables better control over what the model can do and for how long.

2. Role-based and scoped tokens

Assign roles to different API consumers and issue tokens with clearly defined scopes. This ensures the LLM can only access a limited set of endpoints or perform specific actions. Scoping access is crucial to preventing accidental overreach or data exposure from general-purpose LLM queries.

3. Managing internal vs partner access

Internal LLMs may need broader access across services, while partner-facing models should operate within tightly restricted boundaries. Use different identity providers or authentication flows for each, and apply context-aware policies to keep data separation clean and compliant.

4. JWTs with embedded claims

JSON Web Tokens (JWTs) allow you to include user or session information directly within the token payload. This makes it easier to verify permissions without hitting a central database on every request and enables context-rich access decisions for LLM-driven actions.

5. mTLS (mutual TLS) authentication

In high-security environments, mutual TLS ensures both the client (LLM wrapper or agent) and the server authenticate each other. This adds a strong layer of trust, particularly useful when exposing APIs in private networks or regulated industries.

6. Token expiration and rotation policies

Always use short-lived tokens for LLM access and rotate them frequently. This minimises the risk window in case of token leakage and prevents persistent access from outdated or stale authorisations that no longer reflect current permissions.

7. API gateway-based identity enforcement

Use your API gateway to centralise identity checks and enforce policies. This includes verifying tokens, applying rate limits, validating scopes, and redirecting unauthorised calls, all without modifying backend services.

Managing secrets and tokens in distributed systems

Managing secrets and tokens securely is a critical part of exposing APIs to LLMs, especially in distributed systems where multiple services, environments, and users may interact with sensitive credentials. When tokens are not properly protected, the risks include unauthorised access, accidental leaks, and full system compromise.

One of the most common pitfalls is hardcoding API keys into scripts, prompt templates, or source code. This may seem convenient during prototyping, but it becomes highly dangerous in production. Hardcoded secrets can easily end up in public repositories, logs, or shared documentation, making them an easy target for attackers.

Instead, enterprises should use secret management tools like AWS Secrets Manager, Google Cloud Secret Manager, or HashiCorp Vault. These tools securely store secrets, enforce access controls, and support automatic rotation. By managing secrets centrally, you ensure that tokens are never directly visible in code or exposed beyond necessary boundaries.

Another best practice is runtime token injection. This involves retrieving secrets just-in-time using environment variables, service mesh layers, or orchestration tools to inject tokens securely into API calls. This ensures credentials are not permanently stored or visible to LLMs or intermediary services.

Using API gateways to control LLM access

API gateways are essential when exposing APIs to LLMs. They provide a central control point to manage access, enforce rules, and observe behaviour across services. Here’s how they help you maintain security and governance without limiting functionality:

- Traffic control: Gateways help define which APIs are accessible to LLMs, when, and how often. This prevents excessive or unintended requests, especially when models generate unpredictable patterns or trigger loops in prompts.

- Policy enforcement: Through authentication, IP whitelisting, and role-based rules, gateways ensure that only authorised requests are allowed. This enforces a clear boundary between what the LLM can ask and what your backend will accept.

- Throttling and logging: Gateways apply rate limits to reduce abuse and ensure fair use across clients. They also provide real-time logging of all requests, making it easier to detect anomalies, audit activity, and investigate security events.

- Context-aware routing: Depending on the LLM, user, or prompt type, requests can be routed to different services or environments. This helps you isolate sensitive operations from lower-trust interactions, reducing the attack surface.

- Custom response handling: Gateways can intercept API responses, filter or redact sensitive fields, and return modified outputs. This adds an extra layer of protection when exposing data to models that may surface unintended details.

Implementing zero trust for API led LLM systems

As LLMs become API clients in their own right, traditional perimeter-based security no longer holds up. Zero trust offers a better model, where every request must prove it belongs, regardless of origin. Here’s how to implement zero trust principles in systems that expose APIs to LLMs:

- Identity-based access for every request: Every API call from an LLM should carry a verified identity, whether tied to the user, the model, or a service wrapper. Use tokens with embedded claims or mTLS to authenticate each interaction and ensure requests aren’t blindly trusted based on location or origin.

- Network segmentation: Divide your infrastructure into isolated zones, so even if one part is compromised, the rest remains protected. LLM-accessible APIs should be placed behind internal firewalls or service meshes, with gateways enforcing strict traffic flows between zones.

- Principle of least privilege: Give the LLM access only to the APIs it absolutely needs—nothing more. Apply fine-grained scopes, restrict token lifespans, and avoid bundling unrelated permissions. If one capability is misused, it shouldn’t open doors to everything else.

- Continuous validation and monitoring: Zero trust isn’t just about the first check—it requires ongoing validation. Monitor LLM activity in real-time, flag abnormal patterns, and terminate access instantly if something looks off.

- Policy-as-code enforcement: Define zero-trust rules using policy engines like OPA or service mesh policies. This ensures consistency across environments and makes it easier to scale governance as your system evolves.

Real-World Tools and Frameworks You Can Use

Building a secure and scalable system that connects LLMs to APIs isn’t just about theory—it’s about using the right tools to enforce safety, manage workflows, and retain control. From orchestration frameworks to runtime layers, these technologies can help you operationalise LLM integration without compromising security or observability.

1. LangChain

LangChain is a widely adopted open-source framework designed to build applications powered by LLMs. It enables developers to compose "chains" of operations like calling APIs, handling logic branches, and managing memory, all orchestrated via prompts. When used with strict input/output validation and scoped permissions, LangChain can help structure how and when LLMs trigger API requests, reducing randomness and improving safety.

2. Apache Beam + Google Dataflow

Apache Beam offers a unified model for batch and streaming data pipelines, while Google Dataflow serves as its fully managed execution engine. For teams building distributed LLM workflows that include API interactions, this combo provides fault tolerance, scaling, and fine-grained control over how data flows to and from models. Beam allows you to define strict transforms and checkpoints, ensuring LLM-generated API calls meet compliance and validation standards.

3. Reverse proxies (e.g., NGINX, Envoy)

Reverse proxies sit between the LLM and your APIs, allowing you to filter, reshape, or inspect requests in real-time. For example, an LLM might try to send variable parameters to a backend service, and your proxy can strip, rewrite, or validate these before they reach sensitive systems. With rate limiting, logging, and dynamic routing built in, reverse proxies add a strong enforcement layer without requiring changes to your APIs.

4. LLM-aware API management platforms

A new generation of API management tools like DigitalAPI, Kong with AI plugins, or bespoke internal platforms are evolving to handle LLM use cases directly. These platforms often include prompt-aware request inspection, access controls tied to model identity, and adaptive rate throttling. They allow organisations to publish APIs specifically for LLM access, define usage contracts, and observe how models consume them, ensuring trust without sacrificing agility.

Best practices checklist for secure LLM-API access

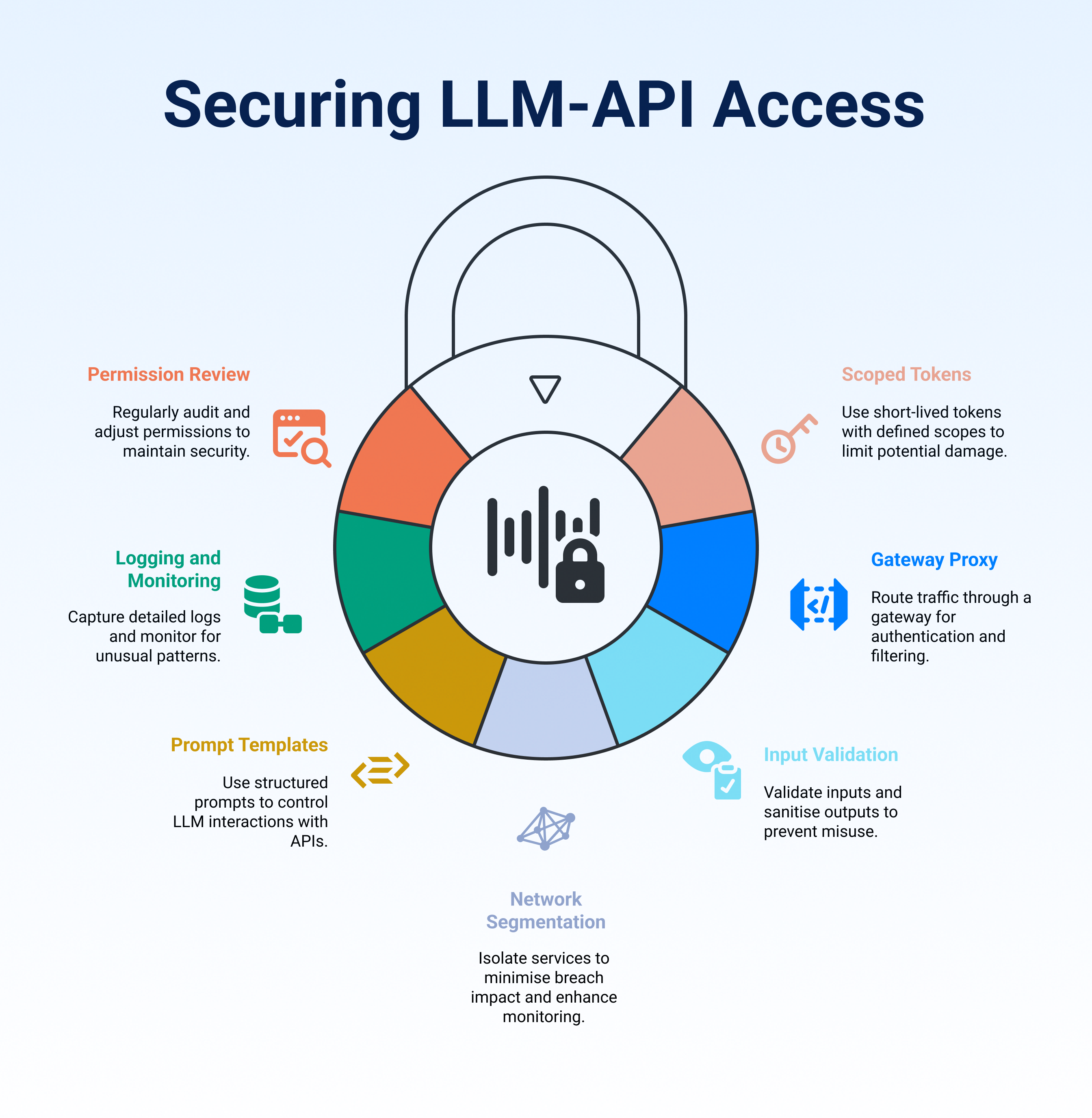

As LLMs become trusted actors in enterprise systems, securing their API access is no longer optional; it’s essential. Below is a practical checklist that brings together the core principles discussed above. Use it as a reference when designing or reviewing your LLM-API architecture:

- Use scoped, short-lived tokens: Always issue tokens with tightly defined scopes and short expiry times. This limits the damage if a token is misused or leaked by the LLM or any intermediary layer.

- Proxy all LLM traffic through a gateway: Don’t let LLMs talk to production APIs directly. Route requests through an API gateway or proxy that enforces authentication, rate limits, logging, and output filtering.

- Validate inputs and sanitise outputs: Never trust LLM-generated requests without validation. Sanitise all input parameters and filter sensitive fields from API responses before returning them to the model.

- Isolate access using network segmentation: Place LLM-accessible services in a segmented zone within your infrastructure. This minimises lateral movement in case of a breach and allows for more granular monitoring.

- Use prompt templates with embedded controls: Control how the LLM interacts with APIs by using structured, guardrailled prompts. Avoid open-ended instructions that could trigger unintended behaviour.

- Log everything and monitor usage: Capture detailed logs of every LLM-driven API request. Monitor for spikes, unusual patterns, or misuse, and integrate with your alerting systems.

- Review permissions regularly: Periodically audit what APIs the LLM has access to. Revoke unused permissions and adjust scopes as workflows evolve to maintain the principle of least privilege.

Final thoughts

As LLMs become more deeply integrated into enterprise systems, exposing APIs to them is no longer a futuristic idea; it’s today’s challenge. But with great potential comes great responsibility. Unlike traditional clients, LLMs operate in open-ended, probabilistic ways, which means securing their API access demands a new mindset.

By combining proven security principles with LLM-specific safeguards—like scoped tokens, prompt guardrails, and API gateways-you can enable innovation without introducing risk. Zero trust, policy enforcement, and continuous monitoring aren’t optional; they’re essential.

Ultimately, safe LLM–API integration is about balance: empowering models to act, but always within a controlled, observable, and reversible framework. With the right design and tools, you can make your APIs LLM-ready without breaking security.

FAQs

1. What is expose APIs to LLMs?

It refers to the process of allowing large language models (LLMs) to call, interact with, or retrieve data via your APIs. Unlike traditional clients, LLMs operate dynamically—they generate unpredictable inputs and need well-scoped access to avoid misuse.

2. Why is API security more complex when integrating with LLMs?

Because LLMs can generate unpredictable requests, may not follow fixed structures, and can attempt prompt-injection or lateral access escalation. Traditional API protection (rate-limits, static validation) isn’t enough.

3. What are common threats when letting LLMs access APIs?

Key threats include API key leakage, granting over-permissioned access, malicious payloads generated by LLMs, and unmonitored usage that leads to data exposure or misuse.

4. How should we authenticate and authorise LLM access to APIs?

Use secure methods like OAuth2 with scoped and short-lived tokens, role-based access, JWTs with embedded claims, mutual TLS (mTLS), and avoid simple API keys which tend to be too permissive.

5. What design patterns help safely integrate LLMs with backend APIs?

Patterns include using API proxies or gateways to mediate requests, input sanitisation and output filtering, prompt templates with guardrails, mapping natural-language intents to approved actions, and continuous monitoring with feedback loops.

6. How can organisations implement a zero-trust model for LLM-API systems?

By enforcing identity-based access for every request, segmentation of networks, applying principle of least privilege, continuous validation of sessions, policy-as-code enforcement, and monitoring all activity in real time.

7. What tools or frameworks support secure LLM-to-API integration?

Examples include LangChain for structuring LLM workflows, reverse proxies such as Envoy or NGINX for request filtering, and modern API-management platforms designed to support LLM access.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)