The rise of AI agents is reshaping how software interacts with the world. From personal assistants like ChatGPT to autonomous systems like AutoGPT and LangChain-powered agents, these intelligent systems are no longer just passive responders; they're active participants, capable of taking actions, making decisions, and even calling APIs on behalf of users.

But there’s a catch: most APIs weren’t built with AI agents in mind. They’re often designed for human developers, with assumptions about context, structure, and documentation that simply don’t translate to machine understanding. As AI agents become more prominent in workflows, from customer service automation to backend orchestration, the demand for agent-ready APIs is rapidly growing.

In this blog, we’ll explore what it means to make your API “agent-friendly,” why it matters, and how you can evolve your existing APIs to support AI-driven automation.

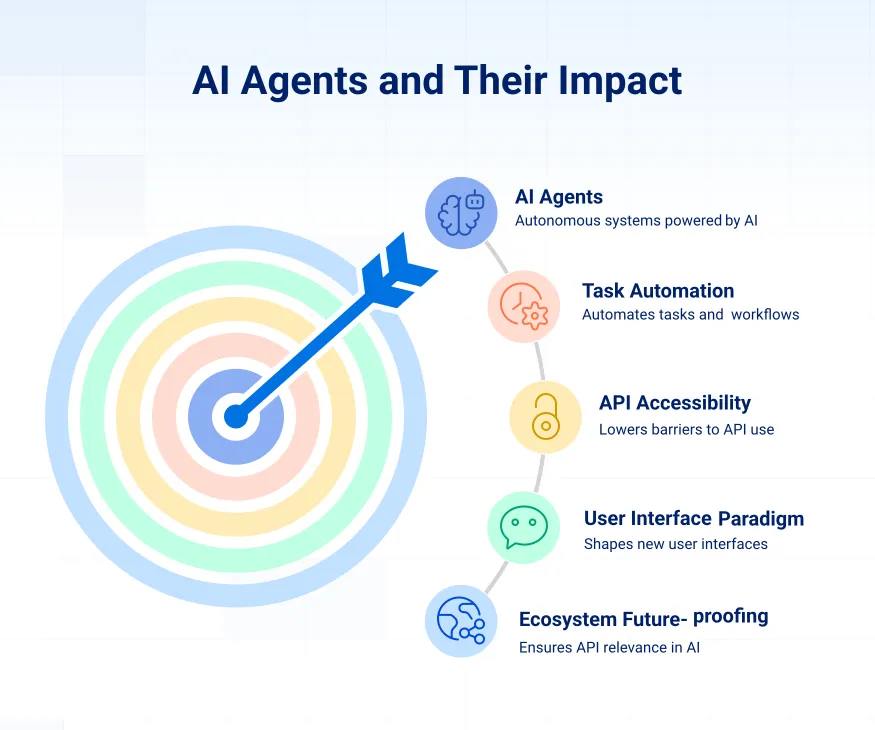

What are AI Agents and why do they matter?

AI agents are autonomous or semi-autonomous systems powered by large language models (LLMs) or other AI technologies that can understand context, make decisions, and perform tasks. Unlike simple chatbots, AI agents can plan and execute multi-step actions using tools like APIs, databases, or web interfaces. Some examples include ChatGPT plugins, AutoGPT, and custom-built LangChain agents.

For instance, a travel-booking AI agent could take a user’s natural language request (“Book me a flight to Paris under $600”) and then search flights, compare prices, and complete the booking via an airline’s API, without needing direct user intervention. These agents act more like digital employees than static bots, capable of real-world task execution. Here’s why they matter.

1. Enable true task automation

AI agents can autonomously break down tasks, retrieve data, and act through APIs, freeing users from manual multi-step workflows. This goes beyond typical automation scripts by introducing adaptability and real-time decision-making based on language input and contextual understanding.

2. Expand API accessibility

Traditional APIs require programming knowledge to use. AI agents lower the barrier by translating natural language into API calls, allowing non-developers or low-code environments to interact with complex systems, which democratises access to software capabilities.

3. Shape the next user interface paradigm

Just as GUIs replaced command lines, conversational and action-based interfaces driven by AI agents are emerging as the next major UX shift. APIs that support agents enable businesses to meet users where they are, whether that's in a chat, voice assistant, or embedded assistant UI.

4. Optimise internal operations

AI agents can serve as intelligent connectors between internal services. For example, a support agent can autonomously retrieve customer data, check service logs, and file tickets using internal APIs, cutting human overhead and response times in enterprise settings.

5. Future-proof API ecosystems

As AI-native platforms and agent-driven systems proliferate, APIs that are not agent-compatible risk becoming obsolete. Designing with agents in mind ensures your API remains interoperable, discoverable, and relevant in the evolving AI-first software landscape.

Principles of agent-friendly APIs

To work effectively with AI agents, APIs must go beyond basic functionality. They need to be designed in a way that allows machine understanding, flexibility, and predictable outcomes. Below are the key principles that make an API truly agent-friendly.

- Self-describing: AI agents rely on structured documentation to understand how to interact with an API. Providing a complete and well-structured OpenAPI specification, including descriptions, parameter details, and example responses, helps agents parse and reason about functionality without human interpretation.

- Context-aware: APIs should include meaningful, human-readable descriptions for each endpoint and parameter, explaining not just what they do but why. This helps AI agents make decisions about which endpoints to use and in what context, especially when dealing with multiple options or conditional logic.

- Predictable: Consistency in structure, response format, and behaviour is critical. AI agents perform best when APIs return predictable outputs with clearly defined schemas, status codes, and error messages. Avoid hidden behaviours or undocumented edge cases that could confuse or mislead autonomous agents.

- Composable: Well-designed APIs allow endpoints to be combined or chained logically. This modularity enables agents to complete complex workflows by using multiple endpoints together. Avoid monolithic actions that do too much or require rigid sequencing, as they can limit agent flexibility.

- Accessible: APIs should use standard authentication flows such as OAuth 2.0 and keep onboarding straightforward. Rate limits, error handling, and required credentials should be clearly documented. The easier it is for an AI agent to interact with your API, the more reliably it can integrate into its task planning and execution.

How to upgrade your API for AI agent compatibility

Designing APIs for human developers is no longer enough. AI agents are now consuming and acting upon APIs without supervision, so clarity, structure, and semantic context are critical. Upgrading your API means making it machine-readable, natural language-friendly, and robust against ambiguity or unpredictability.

1. Use OpenAPI 3.0+ with full schema coverage

The OpenAPI 3.0+ specification is the standard way for AI agents to understand what your API does. But simply having an OpenAPI file isn’t enough. Your schema needs to be complete. That means defining every endpoint, parameter, request body, response format, and status code in detail.

Use description fields for all components, including individual fields within objects, and avoid generic or placeholder content. The richer and more precise your schema, the more effectively an agent can reason about your API.

2. Add natural language descriptions everywhere

AI agents process API specs using natural language models, so how you phrase your descriptions matters. Avoid technical jargon or vague comments like “retrieves data.” Instead, use clear, conversational descriptions such as: “Returns a list of orders placed within a specified date range, optionally filtered by status.”

Add explanations for what each parameter does, why it matters, and how it changes the behaviour of the endpoint. This helps the agent make informed decisions in complex workflows.

3. Implement MCP servers for dynamic agent integration

MCP (model context protocol) servers act as real-time interfaces where AI agents can query an up-to-date, machine-readable description of your API. This typically involves hosting a dynamic OpenAPI spec or plugin manifest that reflects the current state of your API.

By exposing your schema at a known endpoint (e.g. /openapi.json), you allow agents to discover capabilities, authentication methods, and response patterns on the fly without hardcoding rules. MCP servers ensure your API remains adaptive, discoverable, and directly usable by autonomous systems.

4. Provide request and response examples

Examples are one of the most powerful tools for agent understanding. For every endpoint, include example requests and responses that reflect realistic use cases, edge cases, and variations. Show what a valid input looks like, what a successful output includes, and how errors appear in practice.

Use multiple examples where necessary to demonstrate optional fields or dynamic behaviour. These examples train the agent’s internal logic to form correct request payloads and interpret API responses correctly.

5. Ensure responses are deterministic

Agents need consistency to plan actions. If your API sometimes returns a field, sometimes doesn’t, or changes its structure depending on hidden states or server conditions, the agent will struggle to interact with it. Always return responses in a structured, predictable format, even if the result is empty or there’s an error.

Include optional fields consistently, maintain the same data order, and never rely on undocumented side effects. Determinism is foundational for trust and usability in autonomous workflows.

6. Use structured and descriptive error messages

Error handling should be as informative and machine-friendly as your success responses. Avoid vague messages like “Something went wrong.” Instead, use consistent HTTP status codes along with structured JSON error objects that include error_code, message, type, and optionally hint or resolution.

For example: Json: {"error_code": "INVALID_DATE", "message": "The date format must be YYYY-MM-DD", "type": "validation_error"}

This enables agents to understand what failed and make decisions like retrying, adjusting input, or reporting the error to users in natural language.

7. Simplify authentication flows

While AI agents can handle token-based authentication, overly complex or undocumented flows introduce friction. Support standards like OAuth 2.0 client credentials or API keys, and document your token exchange process in detail.

Avoid requiring human login, captchas, or browser redirects unless you’re building for a human-in-the-loop agent. Also, clearly document token expiration, refresh behaviour, and scopes. The goal is seamless, machine-to-machine access without human intervention.

8. Group and tag endpoints logically

Logical organisation helps agents (and humans) discover the right endpoints more easily. Use tags and operation summaries in your OpenAPI spec to categorise functionality, e.g., billing, analytics, user management.

Keep each endpoint's purpose narrow and well-labelled. This not only aids searchability but also improves the relevance ranking of endpoints when an agent is deciding which to use. Naming and grouping should reflect real-world business logic, not internal architecture.

9. Allow contextual metadata in requests

Agents often need to provide extra context to maintain continuity across actions. Allow optional fields such as session_id, conversation_id, or timestamp so agents can track and link related API calls.

You might also include optional headers or parameters for things like localisation (locale), user preferences, or traceability. These aren’t always essential to the core function but enable more intelligent, personalised agent behaviour.

10. Maintain versioning and backwards compatibility

AI agents may be trained or configured to use a specific version of your API. If you make changes without versioning, you risk breaking those agents in production. Always version your API, either through the URL (e.g. /v1/orders) or via headers.

Document all changes between versions clearly, and avoid removing or repurposing fields without notice. Consider offering changelogs or a deprecation policy to help both developers and AI agents stay in sync.

11. Keep data structures simple and consistent

AI agents are far more effective when your data structures are easy to parse and logically consistent. Avoid deeply nested JSON objects or inconsistent formats between similar endpoints. For example, don’t use user_id in one response and uid in another.

Flatten your schemas when possible, and avoid sending large amounts of irrelevant metadata. A clean, predictable structure reduces the agent’s cognitive load and decreases the chance of errors or misinterpretations.

Common mistakes to avoid while making your APIs AI-ready

Even with good intentions, it's easy to overlook details that can limit your API's usability for AI agents. These systems depend on clarity, consistency, and semantic context, areas where traditional API design often falls short. Avoiding the following mistakes can save you time and make your API truly agent-compatible.

- Missing or vague descriptions in API specs: Many developers skip writing detailed descriptions for endpoints or parameters, assuming code speaks for itself. But AI agents need natural language context to interpret functionality. Lack of clarity in your OpenAPI spec leads to confusion and errors in agent behaviour.

- Using inconsistent naming conventions: Switching between userId, user_id, and uid across your endpoints creates unnecessary ambiguity. AI agents perform better when naming patterns are predictable and aligned across your entire API. Inconsistency increases the chance of mismatched data and misinterpretation.

- Overcomplicating data structures: Deeply nested JSON objects or dynamically shaped responses make it harder for AI agents to extract and process information. Simpler, flatter structures are easier to parse and reason about. Complexity adds noise and undermines usability.

- Relying on human-driven authentication: Interactive login flows, captchas, or non-standard OAuth implementations block autonomous access. AI agents need machine-friendly, documented authentication like OAuth 2.0 client credentials or static API keys. If a human is required in the loop, the agent cannot operate independently.

- Providing unstructured or unclear error messages: Returning plain-text errors or non-standard error formats makes it difficult for agents to detect and recover from issues. AI agents benefit from consistent, structured errors with codes, types, and resolution hints. Vague messages force unnecessary guesswork or retries.

- Omitting examples from documentation: Documentation without request/response examples leaves agents guessing how to structure their payloads. Concrete examples help language models understand data formats and expected behaviour. Without them, agents may misuse endpoints or fail to execute valid requests.

- Failing to version your API: If your API evolves without clear versioning, previously configured agents may break silently. Always include version identifiers and maintain backwards compatibility where possible. Version control ensures that agents can trust your API’s stability over time.

Convert your APIs to AI-ready agents instantly with Digital API

One of the most time-consuming aspects of making your APIs AI-ready is building and maintaining machine-consumable specifications. That’s where Digital API comes in, to speed up the transition from traditional developer-focused APIs to fully AI-compatible, MCP-compliant endpoints.

With a single click, our platform can generate OpenAPI specifications and convert APIs to MCP servers that conform to the standards required by large language models and autonomous agents. The platform can host MCP servers that expose these specs in real time, ensuring AI agents can discover and interact with your API dynamically.

By removing the manual effort of structuring your API for machine interpretation, we make it simple to unlock agent compatibility without re-architecting your backend. Whether you're building internal microservices or public-facing APIs, Digital API bridges the gap between traditional infrastructure and the future of autonomous, AI-driven integration.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)