API Gateway

API Gateway vs Service Mesh: What's the difference?

Updated on:

September 14, 2025

Microservices have changed how applications are built, scaled, and managed. But with distributed systems comes complexity, especially in how services communicate securely, reliably, and efficiently. That’s where two powerful patterns emerge, API Gateways and Service Meshes.

While they might seem similar at first glance, both handle network traffic and aim to improve performance and security by solving different problems. One manages the entry point for client requests, while the other governs how services talk to each other within your infrastructure.

Understanding the distinction can help you choose the right one, or using both correctly, reducing complexity, cost, and operational overhead. This guide breaks down what each does, how they differ, when to use one or both, and how to make the right call based on your architecture.

What is an API Gateway?

An API Gateway is a server that acts as the single entry point for all client requests to a system of microservices. Instead of having external clients interact directly with dozens of backend services, the gateway handles the initial request, routes it to the appropriate service, and returns the response back to the client. This simplifies communication, reduces coupling, and centralises concerns like authentication, rate limiting, and traffic shaping.

Beyond routing, an API Gateway also handles tasks such as authorisation, request transformation, response caching, and protocol translation (e.g., from HTTP to gRPC or WebSockets). By abstracting these cross-cutting concerns, it lets each microservice remain focused on business logic rather than infrastructure.

API Gateways are especially valuable in systems where services are exposed to third-party developers, mobile apps, or external consumers. They offer a layer of protection and control between your internal architecture and the outside world.

What is Service Mesh?

A Service Mesh is a dedicated infrastructure layer that manages how services within a distributed system communicate with each other. Unlike an API Gateway, which handles external requests, a service mesh governs internal (east-west) traffic, the communication between microservices running inside your architecture.

A service mesh provides features like service discovery, load balancing, encryption (mTLS), traffic routing, circuit breaking, and observability, without requiring developers to embed these capabilities in their application code. It does this by deploying lightweight proxies (sidecars) alongside each service instance, intercepting and managing all service-to-service communication transparently.

This model allows engineering teams to gain fine-grained control over traffic behaviour and enforce policies consistently across microservices, which is crucial in dynamic, containerised environments like Kubernetes. For example, you can implement canary deployments, retries, or failovers centrally, without modifying the services themselves.

While service meshes are powerful, they also introduce operational overhead and require a higher level of infrastructure maturity. They're best suited for large-scale microservice environments where reliability, observability, and security across internal traffic are critical.

Key differences between API Gateway and Service Mesh

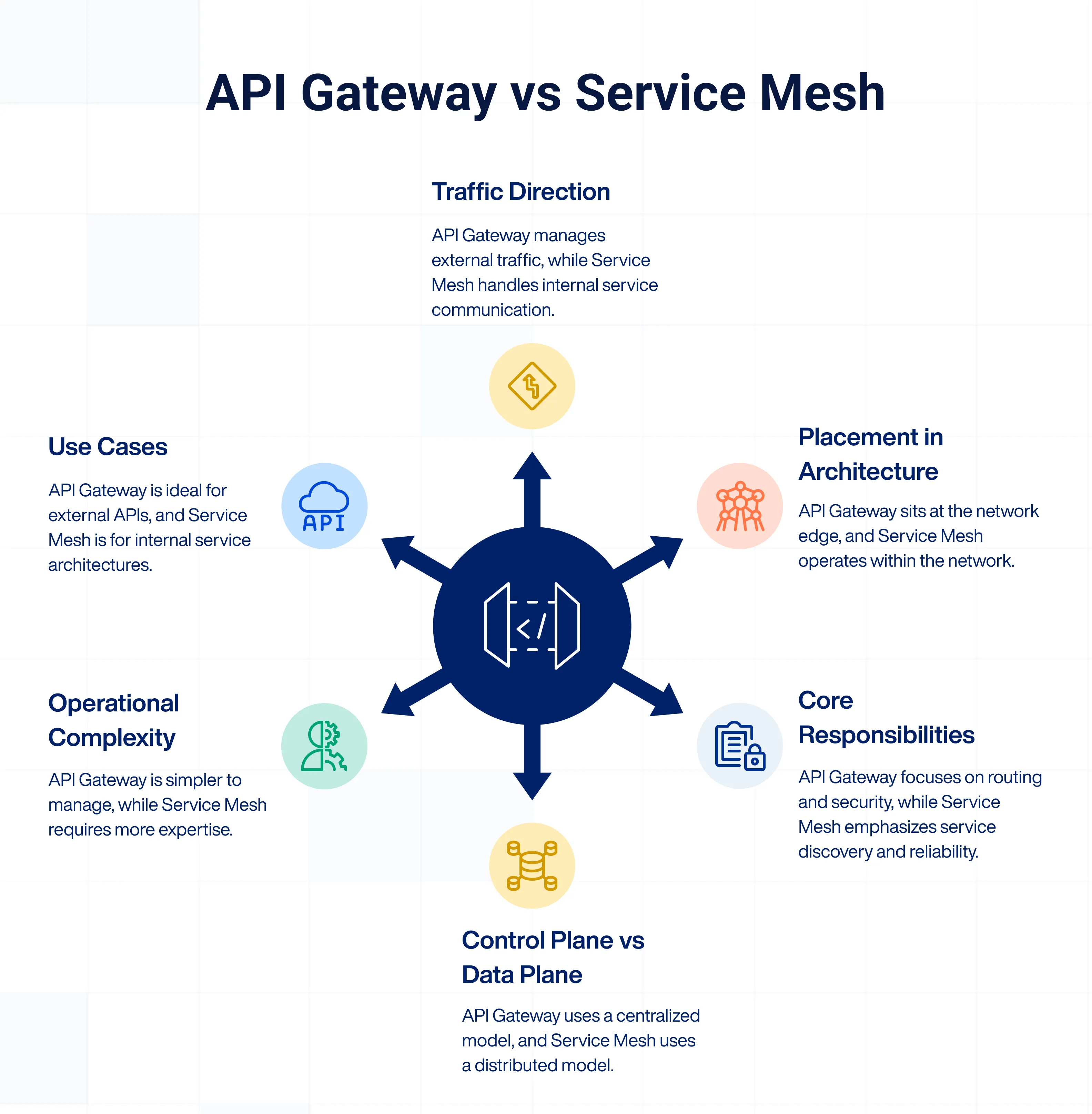

API Gateways and Service Meshes both deal with service communication and traffic control, but they operate in different parts of the architecture and solve distinct problems. Understanding their differences helps in choosing the right tool or knowing when to use both together. Let’s break it down by key aspects:

1. Traffic direction

API Gateway: It manages north-south traffic, i.e., communication between external clients and internal services by acting as the entry point for all inbound API requests.

Service Mesh: They handle east-west traffic, which refers to service-to-service communication inside the application network. It ensures reliable and secure connections between internal microservices.

2. Placement in architecture

API Gateway: Sits at the edge of the network, intercepting and managing all external requests before they reach backend services.

Service Mesh: Operates within the network, with sidecar proxies deployed alongside each service instance, intercepting internal calls.

3. Core responsibilities

API Gateway: Focuses on request routing, rate limiting, authentication, request/response transformation, and API versioning.

Service Mesh: Focuses on service discovery, traffic shaping, retries, circuit breaking, observability, and mutual TLS encryption.

4. Control plane vs data plane

API Gateway: Often includes both control and data planes in a centralised model, where policies are applied through configuration at the gateway level.

Service Mesh: Uses a distributed model where the control plane (e.g., Istio) manages configuration, while data planes (sidecar proxies like Envoy) enforce policies locally.

5. Operational complexity

API Gateway: Simpler to deploy and manage. Typically requires fewer infrastructure changes and is easier to integrate for teams starting out with microservices.

Service Mesh: Requires more infrastructure maturity and operational expertise. It can add complexity, especially in Kubernetes environments, due to its distributed nature.

6. Use cases

API Gateway: Ideal for exposing services to external clients, implementing security at the edge, and managing public or partner APIs.

Service Mesh: Best suited for large, internal service architectures that need fine-grained control over inter-service communication, telemetry, and reliability.

7. Protocol support

API Gateway: Primarily designed for HTTP/HTTPS protocols. Some gateways also support WebSockets, gRPC, and GraphQL, but HTTP-based APIs are the default.

Service Mesh: Often supports a broader range of protocols, including HTTP/HTTPS, gRPC, TCP, and even raw TLS, since it operates at both Layer 4 and Layer 7 of the OSI model.

8. Developer vs platform team ownership

API Gateway: Typically owned and managed by API product teams or platform teams, often with developers directly configuring routing and authentication.

Service Mesh: Usually managed by DevOps or SRE teams, as it’s more infrastructure-heavy and tightly integrated with the service orchestration layer (e.g., Kubernetes).

9. Latency impact

API Gateway: Introduces minimal latency, usually just at the request entry point. Performance impact is predictable and can be optimised through caching and load balancing.

Service Mesh: May introduce more distributed latency due to sidecar proxies being injected alongside every service instance. Requires careful tuning to avoid performance overhead.

10. Observability scope

API Gateway: Provides observability at the entry/exit points of the system, good for understanding how external consumers are interacting with APIs.

Service Mesh: Provides deep observability into internal service calls, retry logic, error rates, and service dependency graphs crucial for debugging distributed applications.

Benefits of using an API Gateway vs Service Mesh

API Gateways and Service Meshes aren’t competing tools—they serve different purposes and come with their own advantages. Understanding the unique benefits of each helps you deploy them where they provide the most value, whether independently or together in a layered architecture.

Benefits of using an API gateway

- Centralised entry point for all client requests to internal services

- Authentication, rate limiting, and access control are handled consistently at the edge

- Request and response transformation, such as protocol translation (e.g., REST to gRPC)

- Simplifies API management for public, partner, or mobile-facing APIs

- Supports API versioning, quotas, and monetisation strategies

- Built-in caching to improve performance and reduce backend load

- Developer-friendly documentation and testing tools are often bundled in

- Easier onboarding for teams transitioning from monoliths to microservices

Benefits of using a service mesh

- Fine-grained control over service-to-service communication

- Automatic retries, circuit breakers, and traffic shaping without changing app code

- Mutual TLS (mTLS) enables encrypted communication between internal services

- Consistent observability across services via telemetry, tracing, and metrics

- Decouples operational logic from business logic, simplifying app development

- Enables progressive delivery strategies like canary deployments and A/B testing

- Resilience and fault tolerance are built directly into the infrastructure layer

- Ideal for Kubernetes-native, large-scale microservice environments

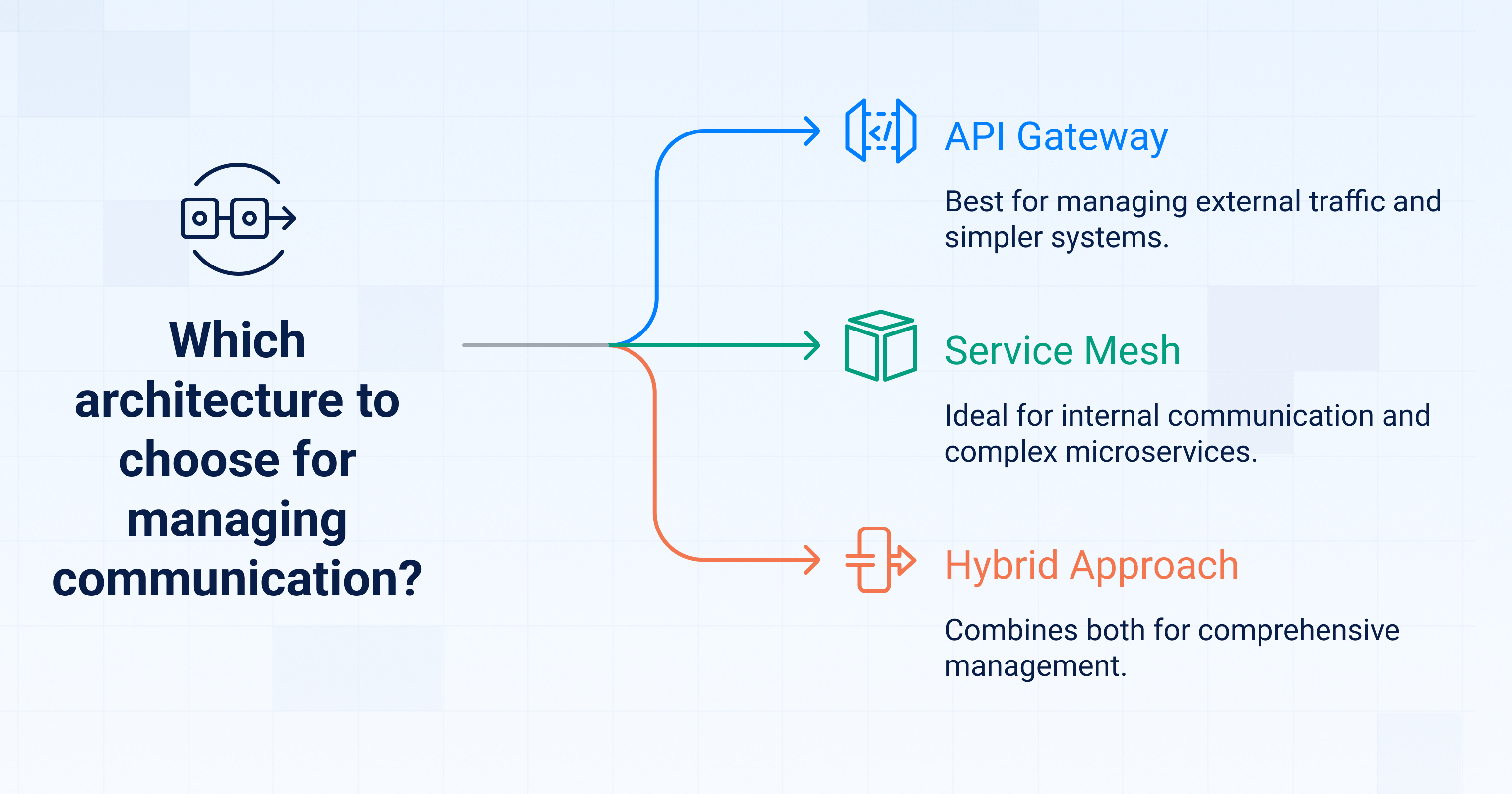

How do you choose between an API Gateway and a Service Mesh?

Choosing between an API Gateway and a Service Mesh depends on where the communication happens (external vs internal), your system’s scale and complexity, and your team’s operational readiness. You don’t always need both, at least not at the same time. Here’s how to decide:

1. Identify the traffic type

- If you're managing external (north-south) traffic → consider an API Gateway.

- If you're managing internal (east-west) communication → look into a Service Mesh.

2. Evaluate your team's maturity and tooling

- If your team is new to distributed systems, start with an API Gateway.

- If you're already deep into Kubernetes, observability, and secure service-to-service comms, a Service Mesh may be a better fit.

3. Assess the complexity of your services

- Small to mid-sized applications may only need an API Gateway.

- Large-scale microservice architectures benefit more from a Service Mesh.

4. Consider hybrid scenarios

Many mature platforms use both: the API Gateway handles ingress, and the Service Mesh manages internal routing, resilience, and observability.

Can you use API Gateway and Service Mesh together?

Yes, you can use API Gateway and Service Mesh together, and in many cases, you should. While they solve different problems, they complement each other well in a modern microservices architecture.

The API Gateway serves as the first line of defence at the edge of your system. It handles external (north-south) traffic, managing tasks like authentication, rate limiting, request transformation, and routing incoming API calls to the appropriate backend services. It also acts as a single point of entry, helping you expose your APIs securely and consistently to external consumers.

On the other hand, the Service Mesh operates inside your infrastructure, managing internal (east-west) communication between microservices. It enables features like service discovery, traffic shifting, retries, circuit breaking, mTLS encryption, and observability, without requiring code changes in the services themselves.

By using both, you create a layered control model: the API Gateway governs how clients interact with your platform, while the Service Mesh governs how services interact with each other. This combination is especially powerful in Kubernetes environments, where security, observability, and resilience are critical.

Many teams start with an API Gateway and later adopt a Service Mesh as their architecture matures. Used together, they provide end-to-end traffic management, improved security, and better visibility, without overloading application developers with infrastructure concerns.

FAQs

1. Is a service mesh the same as an API gateway?

No, a service mesh and an API gateway are not the same. An API gateway manages external (north-south) traffic from clients to services, while a service mesh manages internal (east-west) communication between services. They solve different problems and often work together in a modern microservices architecture.

2. Do I need both an API gateway and a service mesh?

You don’t always need both, but many complex systems benefit from using them together. The API gateway secures and routes external requests, while the service mesh handles internal service-to-service traffic, retries, and observability. As your architecture scales, combining both can improve security, control, and reliability across the entire service landscape.

3. Can a service mesh replace an API gateway?

In most cases, no. A service mesh is designed for internal communication, not for exposing APIs to external clients. It lacks key gateway features like authentication, rate limiting, or API versioning. You still need an API gateway to manage ingress traffic and secure your services from external access points.

4. Which is better: API gateway or service mesh?

Neither is universally “better” as they serve different purposes. Use an API gateway to manage external traffic and expose APIs. Use a service mesh to secure and manage internal service communication. The right choice depends on your use case, traffic patterns, and infrastructure maturity. Often, they work best together.

5. Does Kubernetes need a service mesh?

Kubernetes doesn’t require a service mesh, but using one adds powerful capabilities like traffic control, retries, mTLS, and observability across services. If your workloads are growing and need resilience and visibility, a service mesh like Istio or Linkerd can help. For simpler deployments, Kubernetes networking may be enough initially.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

%20(1).png)

.avif)