Blog

Tyk vs Kong: The architect’s guide to performance, governance, and AI-readiness

Updated on:

TL;DR

Tyk is a "batteries-included" platform (single Go binary) designed for instant stability and complex logic, while Kong is a modular toolkit (NGINX-based) optimized for raw throughput and extensive customization.

Architecturally, Tyk simplifies operations with a compiled binary and centralized governance, whereas Kong requires deep NGINX tuning and managing a decentralized plugin ecosystem.

For modern workloads, Tyk wins on native GraphQL stitching and consistent latency for AI streams, while Kong remains the king of high-volume, simple edge routing.

Operational costs differ significantly as Tyk offers a generous open-source dashboard and analytics pump, while Kong gates its GUI and visual metrics behind enterprise subscriptions.

The future isn't just about the gateway; it is about the control plane. DigitalAPI unifies both Tyk and Kong into a single AI-ready platform, automating documentation and converting APIs into AI Agents.

In the high-stakes world of cloud-native architecture, the API Gateway is no longer just a doorman. It is the central nervous system of your infrastructure. For CTOs, API Architects, and DevOps leads, the choice of an API Gateway often dictates the agility, security, and scalability of the entire organization for years to come.

The market is flooded with options, yet the conversation almost always narrows down to two heavyweight contenders: Tyk and Kong. Both are open-source, widely adopted, and battle-tested. Yet, they represent two fundamentally different philosophies in API management. Choosing between them isn't just about picking a tool; it’s about choosing a stack, a governance model, and an operational workflow. We will explore why modern AI-driven enterprises are looking beyond legacy gateways toward AI-first alternatives.

Understanding Tyk

Tyk is an open-source API Gateway and Management Platform written entirely in Go (Golang). Born out of the need for a lightweight, highly performant, and easy-to-deploy gateway, Tyk has gained massive popularity for its "batteries-included" philosophy. Unlike many competitors that rely on heavy external dependencies or complex plugin ecosystems for basic functionality, Tyk compiles into a single binary.

This Go-based architecture allows Tyk to offer impressive parallelism and low latency, making it a favorite for modern engineering teams who prefer the operational simplicity of a compiled language. Tyk is often praised for its Developer Experience (DX), offering a fully functional dashboard and analytics pump even in its open-source version, which lowers the barrier to entry for teams needing immediate visibility.

Understanding Kong

Kong is arguably the most widely recognized name in the API gateway space. Built on top of NGINX and utilizing OpenResty (LuaJIT), Kong inherits the legendary stability and raw throughput of NGINX. It is designed as a modular toolkit: the core gateway is lean, lightweight, and focused purely on routing traffic, while almost all advanced logic, from authentication to rate limiting, is offloaded to a vast ecosystem of plugins.

Kong’s philosophy is rooted in extensibility. Because it relies on NGINX, it fits naturally into environments where Ops teams already possess deep NGINX expertise. It is the default choice for organizations prioritizing maximum Requests Per Second (RPS) and those who prefer a "build-your-own-platform" approach by stitching together various plugins to meet specific needs.

Main factors to consider when choosing between Tyk and Kong

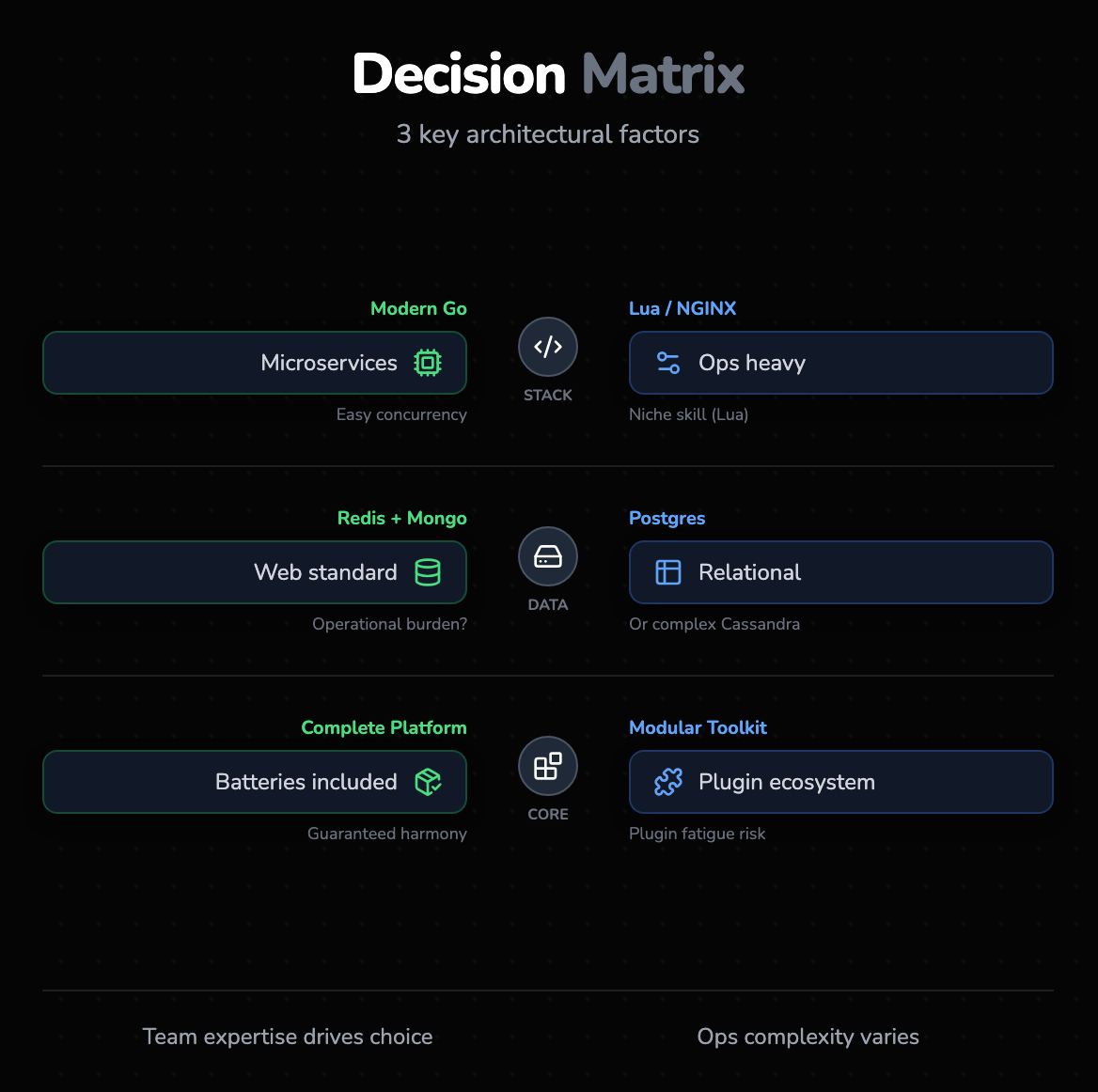

Before diving into feature-by-feature comparisons, it is crucial to frame the decision around three high-level architectural considerations. These are the factors that will impact your engineering team long after the contract is signed.

1. The tech stack

Your team’s existing expertise should weigh heavily on your decision.

- Tyk (Go): If your backend infrastructure is moving toward Kubernetes, Docker, and Go-based microservices, Tyk fits seamlessly. Go’s static typing and concurrency model (Goroutines) make it easier for modern backend developers to write custom plugins or understand the gateway’s behavior.

- Kong (Lua/NGINX): If your Ops team consists of NGINX veterans who can tune nginx.conf files in their sleep, Kong will feel like home. However, customizing Kong often requires writing Lua scripts. Lua is fast and lightweight, but it is a niche skill compared to Go, potentially creating a "bus factor" risk if only one or two engineers understand your custom gateway logic.

2. Database requirements

Both gateways require backing databases to store configuration, policies, and keys, but their choices have different operational footprints.

- Tyk: primarily relies on Redis for hot data (rate limiting keys, tokens) and MongoDB for long-term storage and analytics. This is a common stack for web applications, but managing a Mongo cluster at scale is a distinct operational burden.

- Kong: typically uses PostgreSQL or Cassandra. Postgres is ubiquitous and reliable for most use cases. Cassandra is used for multi-datacenter, high-availability setups but is notoriously complex to manage.

3. Built-in features vs. plugins

- Tyk: Adopts a "Complete Platform" approach. OIDC, complex auth flows, and extensive rate limiting are baked into the core binary. They are optimized, tested, and guaranteed to work together.

- Kong: Adopts a "Modular Toolkit" approach. The core is empty. You must select, install, and configure plugins for every feature. This offers flexibility (you don't load what you don't use) but introduces "plugin fatigue," where upgrading the core gateway might break a third-party plugin you rely on.

Detailed comparison: Tyk vs Kong

Ultimately, the choice between Tyk and Kong is a choice between a "Complete Platform" and a "Modular Toolkit." Tyk aims to give you a finished product out of the box, minimizing the time to the first API call. Kong aims to give you a high-performance engine and a box of parts, allowing you to assemble exactly the machine you want, provided you have the engineering time to build it.

1. Architecture and operational complexity

The architectural choice between a compiled Go binary and an NGINX wrapper fundamentally shapes your deployment strategy. This decision impacts everything from dependency management to routine maintenance. DevOps teams must evaluate whether they prefer the simplicity of a single binary or the granular control of a multi-layered web server stack.

The architectural divergence between Tyk and Kong is the root of all their performance and operational differences.

Tyk: the go binary

Tyk is a compiled Go binary. This provides distinct advantages in terms of deployment simplicity: you drop the binary onto a server or into a container, and it runs. Go’s garbage collection and concurrency model allow Tyk to handle complex processing logic (like transformation and validation) with very stable latency.

Kong: the NGINX wrapper

Kong is effectively a Lua application running inside NGINX. This architecture is practically unbeatable for raw throughput. NGINX’s event loop is legendary for handling tens of thousands of connections with minimal overhead. However, this performance comes with complexity. When a request hits Kong, it passes through the NGINX worker, then into the OpenResty Lua environment, passes through a chain of Lua plugins, and then goes upstream.

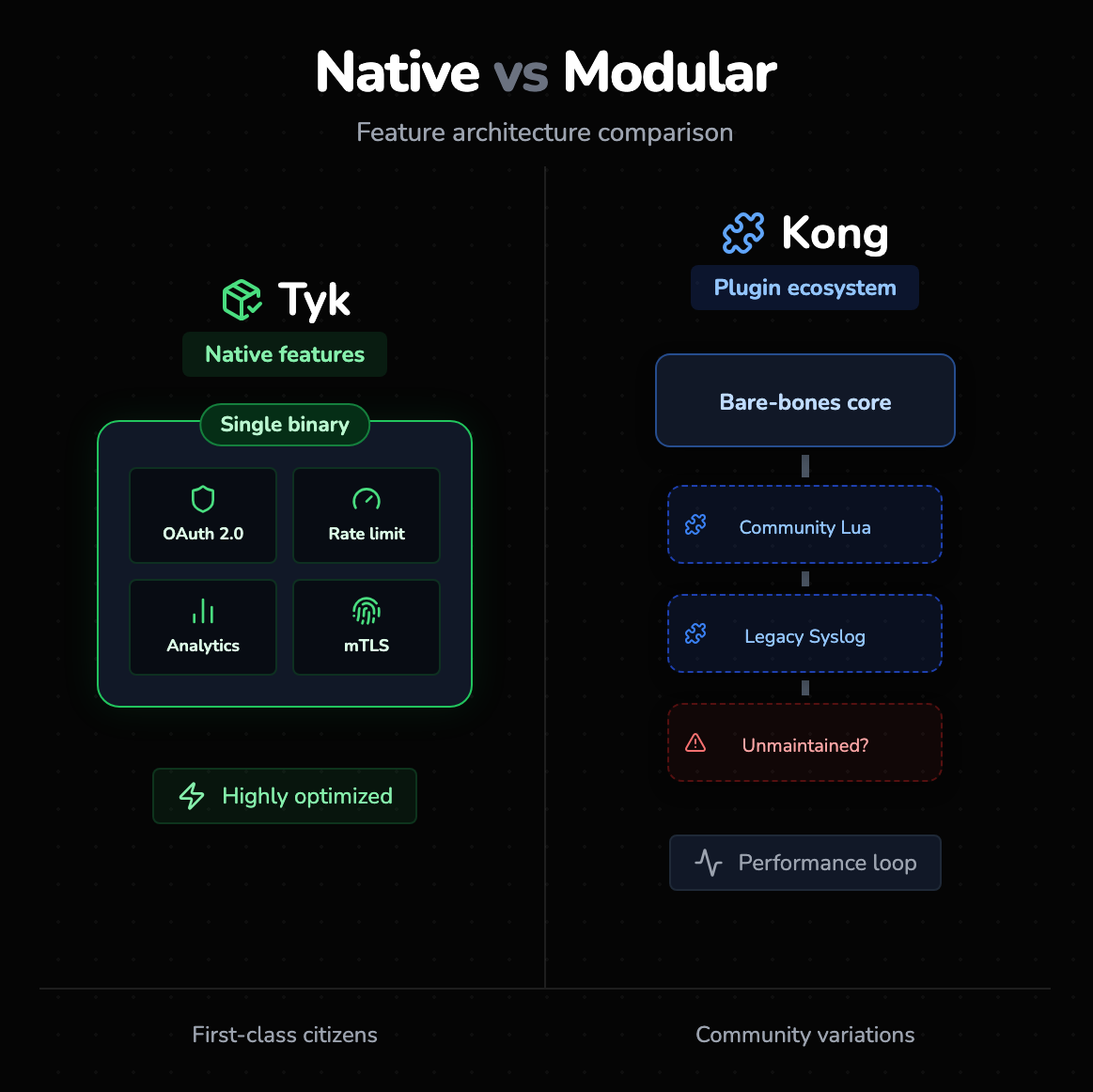

2. Core features and plugins

This comparison highlights the split between Tyk's "batteries-included" design and Kong's "build-your-own" toolkit approach. Architects must decide between a pre-compiled platform where features work instantly out-of-the-box, or a lean canvas that offers immense flexibility but requires significant effort to assemble and configure via plugins.

This is where the "Batteries Included" vs. "Plugin Marketplace" distinction becomes most apparent.

Tyk’s native capabilities

Tyk treats API Management features as first-class citizens. When you install Tyk, you immediately have access to advanced authentication methods (OAuth 2.0, OpenID Connect, mTLS), sophisticated rate limiting (including quota management and context-based limits), and detailed analytics. Because these are compiled into the binary, they are highly optimized.

Kong’s plugin ecosystem

Kong’s core is intentionally bare-bones. To add functionality, you turn to the Plugin Hub. The advantage here is the sheer volume of community-contributed plugins. If you need a niche integration, say a specific logging output to a legacy syslogger, someone in the Kong community has likely written a Lua plugin for it. However, this modularity is a double-edged sword. Community plugins vary in quality, maintenance, and performance.

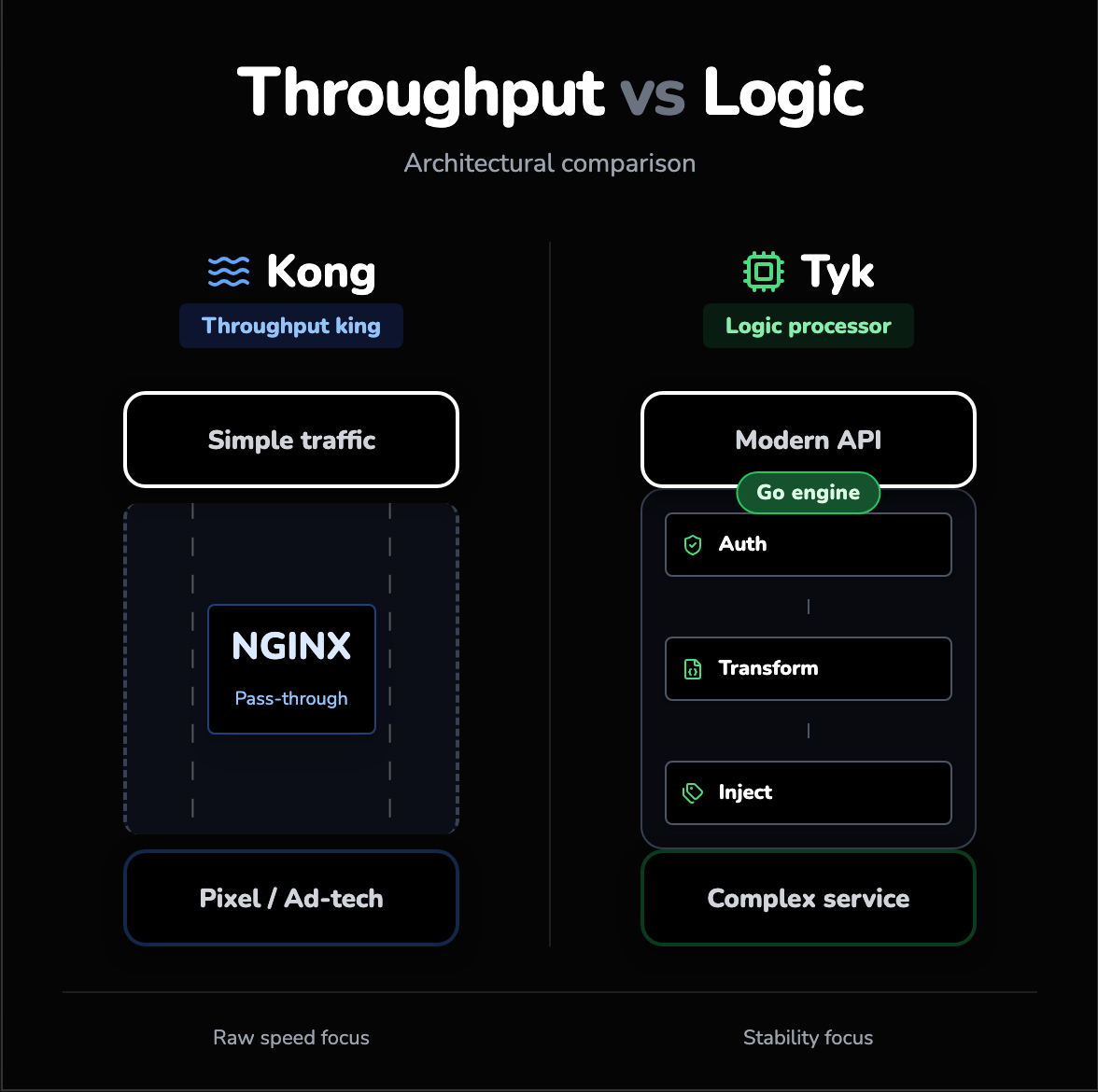

3. Speed and traffic handling

Both gateways are enterprise-grade, but the distinction lies in optimizing for raw throughput versus consistent low latency. High-volume edge routing favors one architecture, while complex API mediation involving data transformation requires another. Understanding this nuance is vital for modern AI and financial applications where stable processing times trump raw speed.

When reading benchmarks, it is easy to get lost in "Requests Per Second" (RPS), but for modern Architects, the nuance lies in Throughput vs. Latency.

Kong: the throughput king

If your primary requirement is to pipe a massive volume of simple requests (e.g., ad-tech pixel tracking or simple pass-through traffic) where every microsecond of overhead counts, Kong is likely the winner. NGINX is optimized for this exact scenario. It can saturate a 10Gbps link more efficiently than almost anything else.

Tyk: the logic processor

However, most modern APIs are not just pass-through pipes; they are intelligent proxies. They validate tokens, transform JSON to XML, inject headers, and aggregate data. In scenarios where the gateway performs work, Tyk often shines. Go is excellent at CPU-bound tasks. As the complexity of request processing increases, Tyk’s latency tends to remain more consistent compared to Kong, where heavy logic in Lua scripts can start to tax the JIT compiler.

The AI/LLM context

- Shift to Streaming: In the era of AI, we are moving from short, bursty REST requests to long-lived streaming connections (like ChatGPT responses).

- Tyk's Stability: Tyk’s architecture, leveraging Go’s concurrency, handles these long-lived connections and WebSockets with exceptional stability.

- NGINX Complexity: NGINX handles connections well, but the complexity of managing streaming timeouts and buffers in a Lua/NGINX stack can be higher than in a native Go environment.

4. Security and control

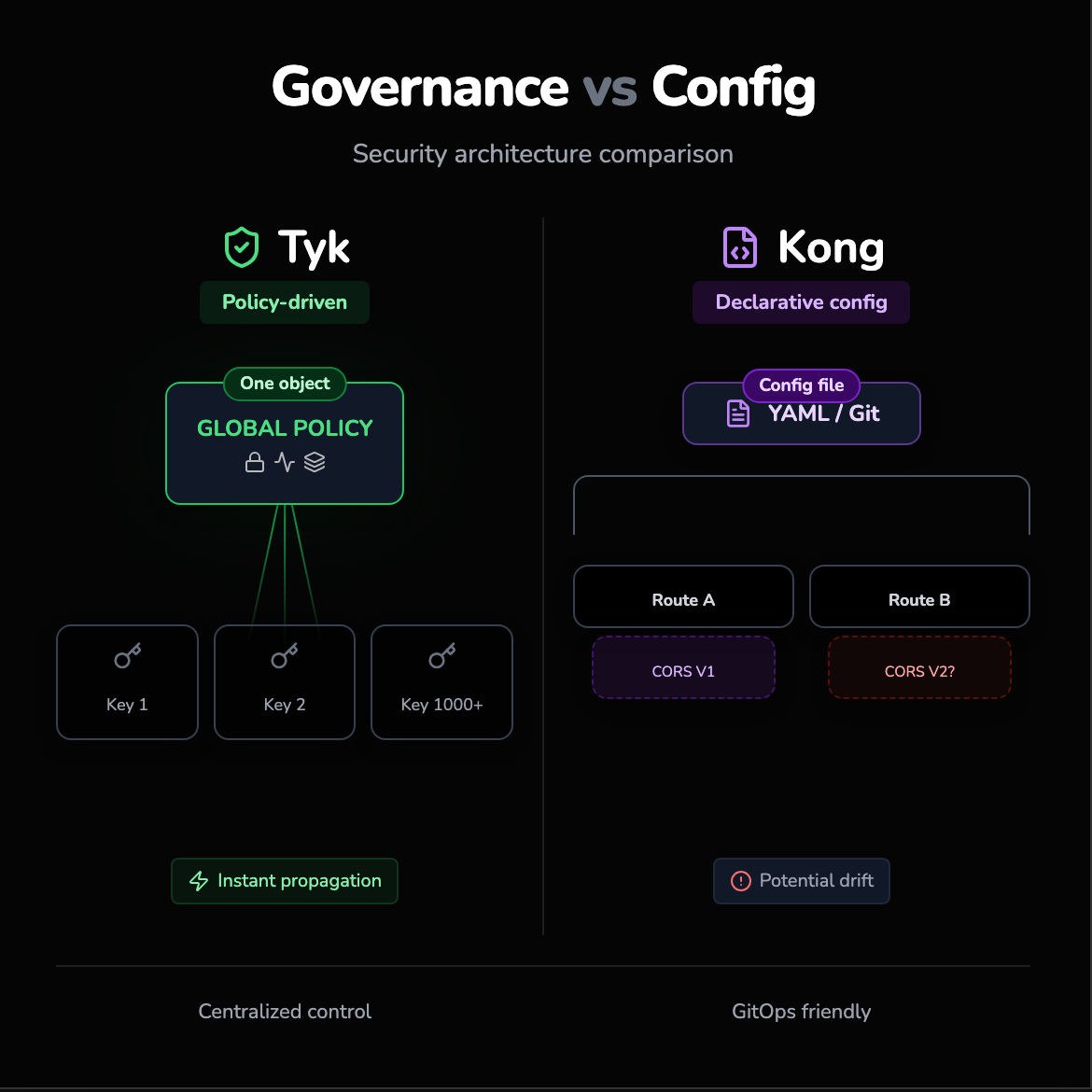

Effective governance depends on enforcing consistent policies without configuration drift. In large-scale environments, the mechanism used to apply security rules whether via centralized policy objects or granular, route-specific plugin configurations determines scalability. This section examines how each platform balances rigid centralized control with flexible, service-specific security requirements.

Security is not just about blocking hackers; it's about governance: who can change what, and how easy is it to make a mistake?

Tyk’s policy-driven security

Tyk shines in centralized governance. It uses a "Policy" object that wraps all security rules (ACLs, rate limits, and quotas) into a single entity. You can apply a Policy to thousands of keys instantly. This is crucial for large enterprises. If you need to rotate a key or change a quota tier, you update the Policy, and it propagates instantly. Tyk also supports complex security flows like "Keyless Access" with fallback to specific auth methods, which is difficult to orchestrate in other gateways.

Kong’s declarative configuration

Kong (specifically in DB-less mode or using Kong Konnect) pushes for a declarative configuration model. You define your services, routes, and plugins in a YAML file and apply it. Although this is GitOps-friendly, the granularity of security is often tied to individual plugins attached to specific routes. This can lead to "configuration drift", where one route has the CORS plugin configured one way, and another route has it configured differently.

5. Developer experience

The speed at which a new developer can publish their first secure API depends heavily on out-of-the-box tooling. In an era where "Developer Experience" is critical, the friction in onboarding defines success. We compare the accessibility of a GUI-driven approach with a CLI-first methodology to determine which one best suits your team.

How fast can a new developer publish an API?

Tyk: The visual dashboard

Tyk offers a fully functional GUI Dashboard even in its Open Source version (though with some limitations compared to Enterprise). This is a massive win for Developer Experience (DX). A developer can log in, click "Add API," set up an authentication token, and have a secured endpoint running in under a minute. Visualizing the traffic, errors, and latency graphs immediately helps developers understand their APIs without needing CLI mastery.

Kong: API-First / CLI

Kong is API-first. In the open-source version, there is no official GUI. You interact with the Admin API using curl commands or by applying YAML files via the CLI. To get a visual dashboard, you either need to pay for Kong Enterprise, use Kong Konnect (SaaS), or rely on third-party community dashboards like "Konga," which may lag behind the official release. For a DevOps engineer, this CLI approach is fine. For a frontend developer trying to debug a gateway issue, it is a significant friction point.

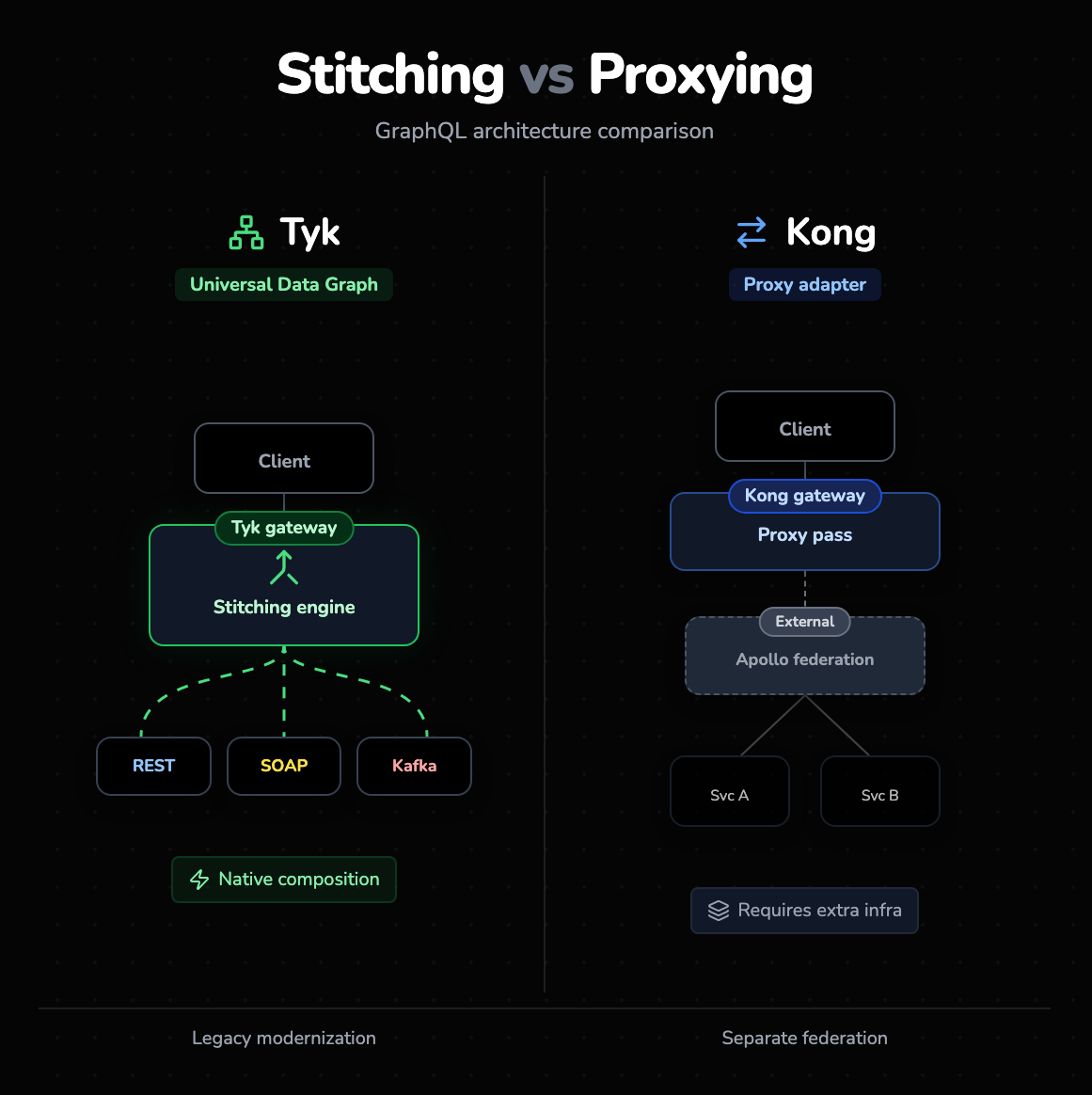

6. GraphQL and integrations

As API ecosystems evolve beyond REST, natively handling and stitching GraphQL schemas becomes a critical differentiator. Gateways must now act as intelligent mediation layers rather than simple proxies. This section explores whether you need a true Universal Data Graph or just a performant pass-through adapter for existing GraphQL servers.

Modern gateways must be more than REST proxies; they must be protocol-agnostic.

Tyk: Universal Data Graph (UDG)

Tyk has made a massive bet on GraphQL with its Universal Data Graph. This is not just a proxy; it’s a stitching engine built into the gateway. You can take multiple REST APIs, legacy SOAP services, and Kafka streams, and "stitch" them together into a single GraphQL schema exposed to the client. The gateway handles the complexity of fetching data from the different upstreams. This is a game-changer for organizations trying to modernize legacy tech without rewriting the backend.

Kong: Adapters and Proxying

Kong supports GraphQL, but largely via plugins that act as adapters. It can validate GraphQL queries and proxy them to a GraphQL server (like Apollo). However, it lacks the native "Stitching" capabilities of Tyk. If you want to compose a graph from multiple microservices in Kong, you usually need to run a separate Apollo Federation server behind Kong. In Tyk, the gateway is the federation server.

7. Monitoring and analytics

Deep visibility is essential, but the difference lies in whether analytics are a built-in component or an external plugin. A decoupled, asynchronous approach ensures stability, while tight coupling risks performance impact. We analyze how each platform extracts critical data and what that means for your monitoring stack's reliability.

Tyk Pump

Tyk’s approach to analytics is unique. It separates the analytics engine from the gateway using a component called "Tyk Pump." The gateway writes metadata to Redis, and the "Pump" asynchronously moves that data to any backend you want: MongoDB, ElasticSearch, Prometheus, InfluxDB, or CSV.

Kong Vitals & Plugins

Open-source Kong relies on plugins for monitoring. You enable the prometheus plugin or the datadog plugin to export metrics. Although effective, it puts the burden on the user to configure the sampling rates correctly so performance isn't impacted. "Kong Vitals," their deep-dive visual analytics tool, is reserved for the Enterprise tier.

8. Pricing and open source licenses

Understanding what is truly free versus what is gated behind an enterprise license is vital for long-term budget planning. Both platforms have open-source roots, yet their monetization strategies differ significantly regarding management planes and analytics. This section breaks down licensing models to help you avoid unexpected costs as you scale.

Feature

Tyk:

- Open Source: Very generous. Includes the Gateway, Pump, and Dashboard. However, the license for the Dashboard restricts it to non-commercial use in some contexts (users should check the specific license terms), while the Gateway itself is MPL (Mozilla Public License).

- Enterprise: Adds Multi-Data Center Bridge (MDCB), Developer Portal, and commercial support.

Kong:

- Open Source: Apache 2.0. Very permissive. You can run the gateway core anywhere. However, the GUI, advanced security plugins (like OIDC), and Vitals are stripped out.

- Enterprise: Moving to "Kong Konnect," a SaaS-based control plane. This separates the management layer (SaaS) from the data plane (your infrastructure). This is convenient but introduces data sovereignty questions for some banks and government entities.

Which gateway should you choose?

Choose Tyk If:

- You value "Time to Value": You want a gateway that includes OIDC, rate limiting, and a GUI out of the box without hunting for plugins.

- You are a Go Shop: Your team understands Golang and wants to extend the gateway using a language they already know.

- You need GraphQL Stitching: You have a mess of REST and SOAP services that you want to expose as a clean GraphQL API without building a separate middleware layer.

- You prefer a consolidated architecture: You want a single binary rather than an NGINX wrapper with Lua scripts.

Choose Kong If:

- Raw Throughput is King: You are processing hundreds of thousands of requests per second for simple routing tasks.

- You have NGINX Expertise: Your Ops team is already comfortable managing, tuning, and scaling NGINX instances.

- You need the largest ecosystem: You want the security of knowing that if a plugin exists, it probably exists for Kong first.

- You want a pure "Infrastructure" approach: You prefer the declarative, config-driven model over a dashboard-driven model.

Looking beyond Kong? Explore the top modern Kong alternatives

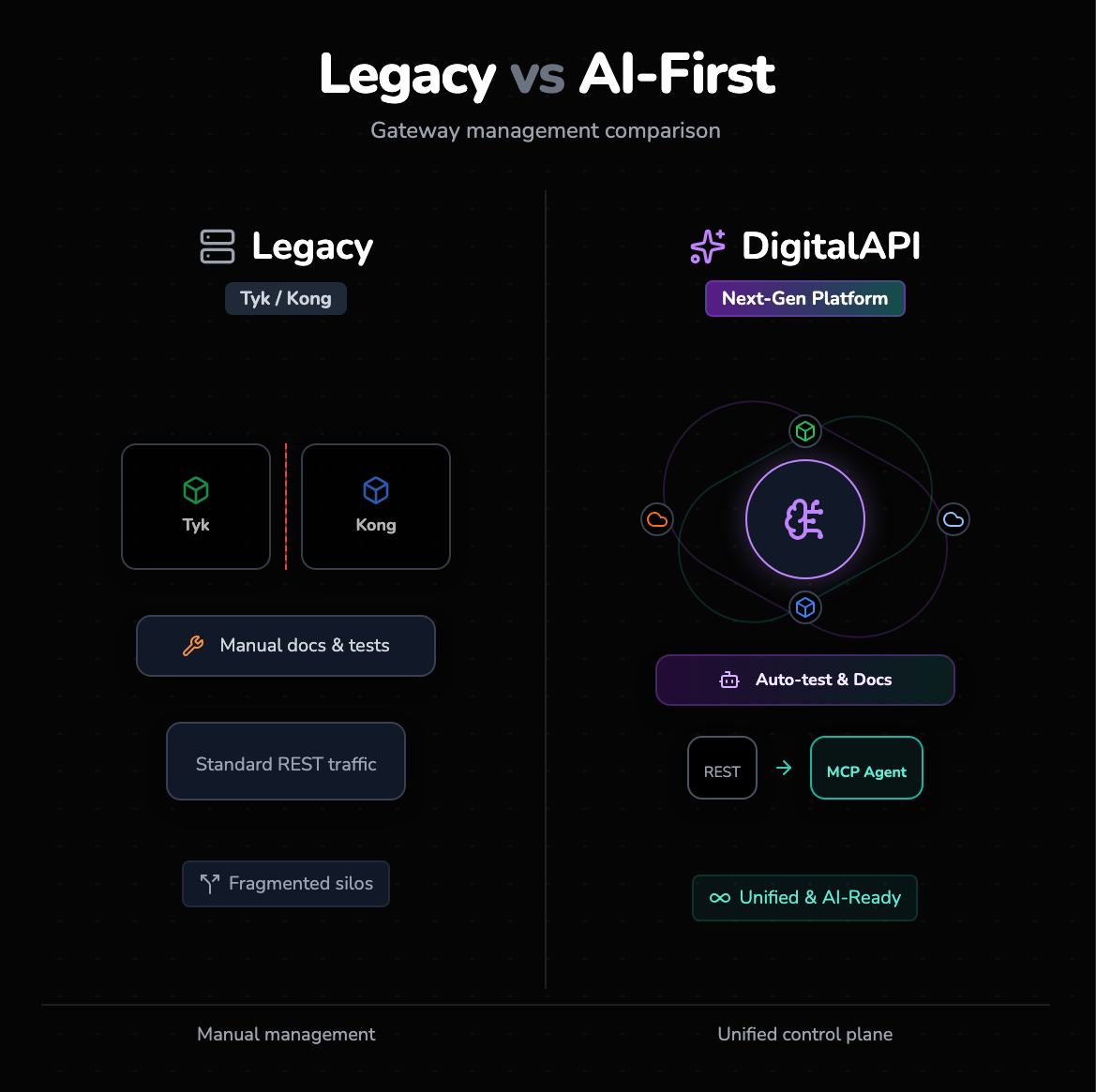

The AI-first alternative: DigitalAPI

Tyk and Kong fight for dominance as legacy API gateways, but DigitalAPI.ai represents the next generation of AI-First API Management. It doesn’t just manage traffic; it unifies your entire ecosystem and prepares it for the age of AI Agents.

1. The "Gateway Agnostic" advantage

- Unified Control Plane: Stop managing gateways in silos. DigitalAPI acts as a single pane of glass to manage, govern, and secure APIs across Kong, Apigee, AWS, and Azure simultaneously.

- No "Rip and Replace": You can keep your existing Kong or Tyk instances for data-plane traffic while using DigitalAPI for superior governance and visibility.

2. AI-First features for modern teams

- Instant MCP Readiness: Convert any REST API into a Model Context Protocol (MCP) agent in one click, making your APIs instantly consumable by LLMs and AI Agents.

- AI-Powered Automation: Say goodbye to manual grunt work. DigitalAPI uses AI to auto-generate documentation, write test cases, and detect duplicate APIs ("API Sprawl") across your organization.

- Helix Gateway: Need a new gateway? DigitalAPI’s Helix is an ultra-lightweight, AI-native gateway designed for zero-latency AI workloads, offering a faster alternative to NGINX-based stacks.

3. Monetization & growth

- White-Label Marketplace: Launch a branded, monetization-ready API marketplace in minutes, not months.

- Universal Discovery: An AI-driven catalog that makes every API in your organization discoverable, regardless of which gateway hosts it.

Frequently asked questions

1. How does vendor lock-in apply to Tyk vs Kong?

Both platforms are open-source, which reduces lock-in compared to proprietary SaaS like Apigee. However, "Logic Lock-in" is real. If you write complex custom logic in Kong’s Lua plugins, migrating away becomes difficult because you have to rewrite that logic. Tyk’s native features reduce this risk slightly, but migrating distinct architectural concepts (like Tyk’s specific Policy objects) to another gateway still requires significant refactoring.

2. Which platform is better for large enterprises?

Both serve Fortune 500 companies. Kong is often favored by enterprises with massive, existing on-premise infrastructure and NGINX legacy. Tyk is often favored by enterprises undergoing "Modernization" or "Digital Transformation" initiatives that favor agility, Kubernetes-native deployments, and Developer Experience over legacy tooling.

3. Does Kong require a database?

Historically, yes (Postgres or Cassandra). However, Kong recently introduced "DB-less mode" (using declarative YAML config) for Kubernetes (KIC). This removes the database requirement, but it also removes some dynamic capabilities (like creating consumers on the fly via API) unless you use a control plane like Kong Konnect to manage the config. Tyk creates a similar effect by using Redis for temporary state but still generally requires Mongo for the management layer.

4. Which platform offers better native support for GraphQL?

Tyk is the clear winner for native support. Its Universal Data Graph allows you to create GraphQL endpoints from existing data sources without writing code. Kong supports GraphQL proxying and some validation but generally relies on you having a separate GraphQL server to do the heavy lifting.

5. How do the deployment models compare?

Kong is often deployed as an Ingress Controller in Kubernetes or as a standalone gateway on VMs. Tyk is similar but also offers a "Hybrid Cloud" model (Tyk MDCB), where the Management Control Plane is SaaS (or centralized), but the Gateways (Data Plane) sit in your private VPCs. Kong offers a similar hybrid model via Kong Konnect. Both are fully compatible with Docker, Kubernetes, and bare metal.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)