API Gateway

What is an API Gateway?- Definition, Features and Use Cases

Updated on:

December 21, 2025

TL;DR

An API gateway acts as the single entry point between clients and backend services. It routes requests, applies security policies, and improves performance by handling authentication, rate limiting, load balancing, caching, and protocol translation.

DigitalAPI makes managing gateways much easier by bringing platforms like AWS, Apigee, and Kong into one unified control plane. Its lightweight Helix gateway lets teams design, deploy, and operate APIs without extra complexity.

With built-in analytics, automation, and security, DigitalAPI helps enterprises scale their API ecosystems efficiently while maintaining consistency, visibility, and governance across environments.

Get started with DigitalAPI today—Book a Demo!

Imagine going to a restaurant where there are no waiters to serve you. Instead of placing a single order like usual, you now have to go to the kitchen to order food, head to the bar to order drinks, and head to the cashier to pay.

Not only is it frustrating for you, the restaurant does not like it either. They would prefer if customers did not enter their kitchen to maintain hygiene and efficiency. Introducing a waiter solves all of these problems instantly.

The waiter takes all of your orders, then grabs your food and drink and serves it to you. Once you are done with your meal, they will bring the bill to your table for payment. Suddenly, the whole process is now really smooth, fast, and efficient for both parties, a win-win situation.

In the internet, an API gateway has a similar role. Instead of client applications making API requests directly to multiple backend services, the API gateway acts as your personal waiter. It handles all your API requests and ensures an efficient process for both the client application and backend services.

Key takeaways

If you are in a hurry, here is the summary:

- An API Gateway is the single entry point for client applications to interact with multiple backend micros ervices, making API communication more efficient and structured.

- It receives a single API call from the client with multiple service requests. After security checks, it routes the requests to the services and sends back the combined data in a single response.

- It acts as a security layer by handling protocol checks, API key validation, authentication, authorization, and rate limiting, preventing unauthorized access and DDoS attacks.

- API Gateways improve performance by reducing latency through request aggregation, caching, and protocol and data format translation.

- It takes care of the non-functional elements of microservices like security, logging, monitoring, and analytics.

- Handling high traffic loads is easier with API gateway features like load balancing, rate limiting, API throttling, and circuit breaking. This ensures system stability even during spikes.

- Following best practices such as deploying multiple gateways, enforcing HTTPS, integrating with Service Discovery, and planning for traffic spikes ensures long-term API reliability and scalability.

What is an API gateway?

An API gateway is an intermediary between the client application (such as a browser, or a mobile app) and the backend micro services of a company. The API gateway is also the single point of entry for client applications to make API calls.

When a client application sends an API call requesting multiple information, the API gateway receives and routes the API call to the appropriate backend microservice. It then fetches and delivers the information back to the client application.

But that’s not all, an API gateway also acts as a layer of security, balances API traffic, provides rate limiting, and more.

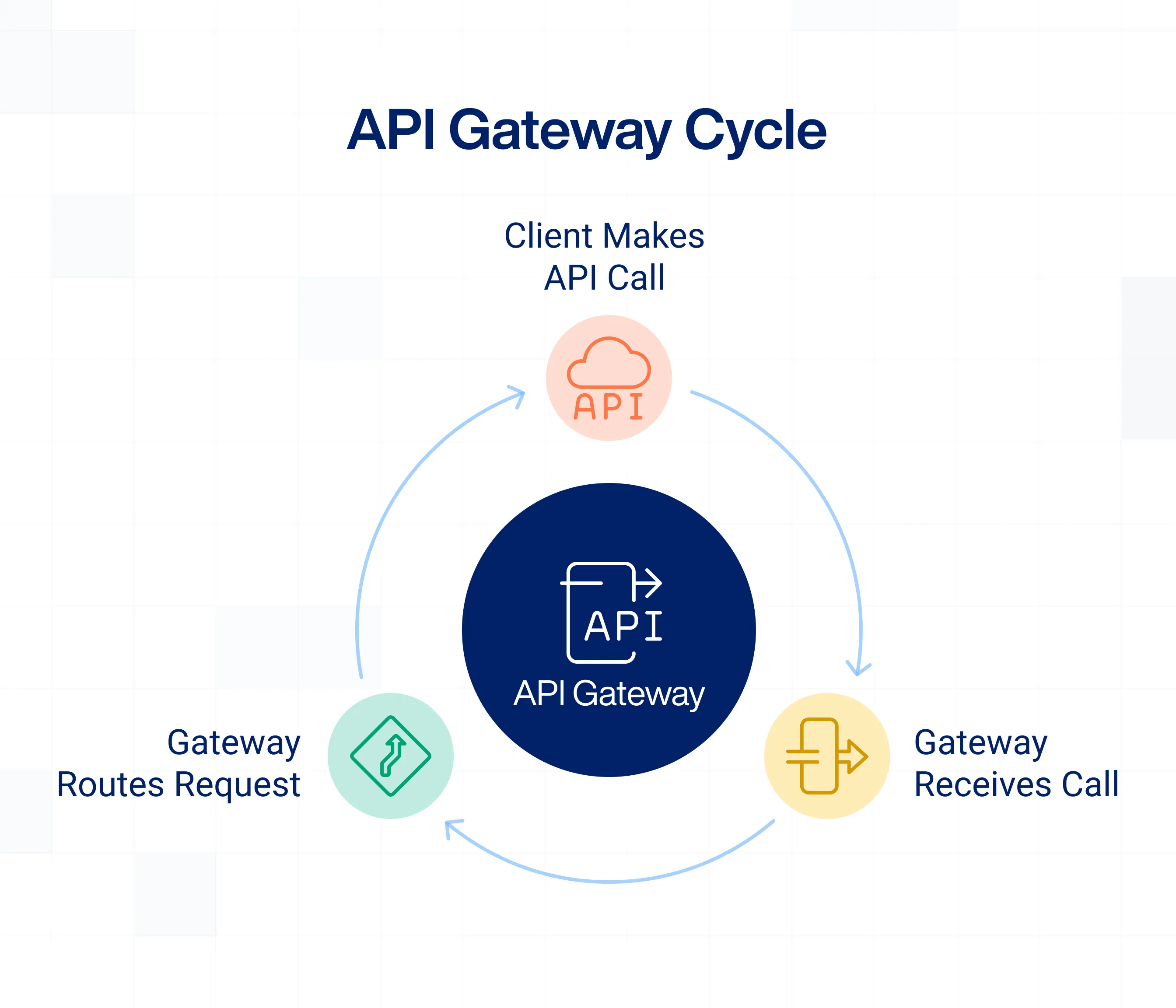

How does an API gateway work?

It may seem simple while saying that an API gateway receives an API call, fetches the information from the micro services and delivers it back to the client. But, there are a lot of other things that happen during this process. Let’s see how an API gateway works using real-world example of an eCommerce store:

1. The client makes the API call

The client application (a browser or a mobile app) makes an API call, typically in the HTTPS protocol. The request contains these details:

- The Type of Request: Whether it is a data retrieval, addition, update, or removal.

- Authentication And Authorization Header: It contains the API key, authentication and authorization tokens such as OAuth tokens or JWT

- Accepted Response Formats: The client specifies the format it expects in the response such as JSON and XML.

Example: When you want to view a shirt on an online clothing store, your browser sends an API call requesting to retrieve the product info, inventory info, your user name, and more.

2. The API gateway receives the API call

Once the API gateway receives the API call from the client, it will run some checks on the call to ensure everything is fine. They are:

- Protocol Check: Checks if HTTPS protocol is used to make the call. In case a HTTP or non-HTTP protocol like gRPC is used, the API gateway will reject the request unless it is configured to redirect these requests.

- API Key Validation: Checks if the received API key is valid. The request is rejected if the API key is invalid or missing in the request.

- Client Authentication Check: Checks the identity of the client if required, typically through OAuth tokens or JWT. If the authentication fails, the request is rejected if the authentication fails.

- Client Authorization Check: Checks whether the client is authorized to make the request. If the client is not authorized to make the request, it is rejected.

- Rate Limit Check: Checks whether the client is allowed to make a request at that point in time. The request is either rejected or delayed if the client has exceeded their rate limit.

Example: While the product info of the shirt might not require authentication, it is needed to fetch your username. From the company’s portal, you won’t be able to delete the product listing if you are not an admin.

3. The API gateway routes the request

Once all security and verification checks are done, the API gateway identifies the appropriate backend microservices where the data is located. This is usually done with the help of a Service Discovery component in the infrastructure.

In this stage, the API gateway also transforms the request to match the microservices’ format, if needed (example: JSON to XML).

The microservices then receive the request from the API gateway and will send the requested data back to it. Here, the API gateway will combine the multiple data and deliver it back to the client as a single response in the format accepted by it (example: XML back to JSON).

Example: Your browser receives all the data it requested and displays the product page loaded with information to you in the UI.

Want to understand how API gateways differ from full API management platforms? Read our in-depth comparison.

API gateway features

1. Client request router

One of the core API gateway features is control over the API traffic and directing them to the appropriate backend microservices. This routing mechanism is usually carried out through two methods.

- Path-based Routing Method routes the call based on the URL in the request.

- Content-based Routing Method routes the call based on the payload of the request.

This routing process also includes a Service Discovery component that can identify healthy and alive microservices for the API gateway to send the requests to. This makes the routing process much more efficient.

2. Authentication and authorization of clients

Since the API gateway is the single point of entry for client applications, it makes much more sense to authenticate and authorize clients at this entry point.

Before routing the requests to the microservices, API gateways authenticates the API key provided. It then checks the identity of the client either with OAuth 2.0, JSON Web Tokens (JWT), mTLS, or SAML.

It also checks whether the client is authorized to make certain requests. For example, only Amazon Prime members are able to view exclusive deals.

3. Rate limiting and throttling

Being the primary traffic controller, rate limiting is an important damage control feature of API gateways. Rate limiting refers to the rate at which a client can make an API call for a fixed time period (i.e, seconds, minutes, or hours).

Rate limiting can be configured:

- Across all APIs

- For specific APIs, and/or

- For specific clients

Additionally, API gateways can queue and delay the excess request instead of outright rejecting them. This is called API throttling. Rate limiting is a hard cut-off that prevents bad actors, while API throttling helps more in handling sudden API call spikes.

4. Protocol and data format translation

Not all client applications and backend microservices use the same protocols and data formats. This is where API gateway acts not only as the mediator, but also as a translator.

For example, let's say the client application sends a HTTPS call and uses JSON in the request. But the microservices only accept gRPC requests and XML requests. The API gateway translates both the protocol and the data format for the microservices to read.

Once the API gateway gets all relevant data, it will combine and translate them back into HTTPS and JSON and send it to the client.

5. Caching

Imagine an online store has a “product of the day” section in its website. All clients visiting the website will call for the same “product of the day” API in the next 24 hours. It is an unnecessary trip for the API gateway to route the same requests for 24 hours.

The API gateway feature, caching, comes in handy here. Caching refers to storing data temporarily within the API gateway. This reduces latency even more for frequently requested APIs and improves the client experience. Caches can be configured to reset according to the company’s needs.

6. Logging and monitoring

Understanding how APIs are used is crucial for maintaining system health, identifying issues, and optimizing performance. The API gateway collects detailed logs of all incoming and outgoing API traffic, recording request timestamps, response times, and error rates.

Monitoring tools integrated with the API gateway can track API performance in real time, alerting administrators about slow responses, failed requests, or unusual activity. For example, if an API suddenly starts receiving thousands of failed login attempts, the API gateway can detect it as a potential security threat, urging admins to block the malicious IP addresses.

Analytics generated from API gateway logs also help companies make data-driven decisions. For example, an e-commerce platform can analyze which API endpoints receive the most traffic, helping developers optimize those services for better efficiency.

Benefits of implementing an API gateway

1. Enhanced security for API endpoints

Since API gateways provide centralized security implementation, it ensures consistent policy enforcements across all APIs. This eliminates potential security gaps that can arise when different microservice teams implement their own policies.

It also allows the company to oversee and update the security at one entry point instead of on top of all the microservices which can affect client experience.

The authentication, authorization, rate limiting, and API throttling features also enforce encrypted API communication and prevent DDoS attacks and malicious access.

2. Reduces latency for the client

Latency refers to the time taken for an API request to travel from the client to the backend microservice and back to the client. Without an API gateway, clients should make this trip every single time it needs access to microservice data. This leads to an increase in the latency.

By implementing an API gateway, clients only need to make one API call requesting all the required microservices data. The API gateway fetches all the data simultaneously from all the microservices, and sends back one combined response. This reduces the latency significantly, enhancing the user experience.

On top of this, using API gateway caches further reduces latency for frequently requested microservice data.

3. Increases micro services’ efficiency

Since backend microservices do not interact directly with the clients, they can focus on their core functional logic. It is now the duty of the API gateways to take care of the non-functional elements. This includes:

- Validating the protocol of the API request

- Authentication of API keys and client identities

- Authorization of requests

- Rate limiting and API throttling configuration

- Protocol and data format translation

- Interaction with Service Discovery

- Logging and monitoring of API requests

This makes the microservices lightweight, more efficient, and manageable. This encourages developers to make updates without worrying about affecting the non-functional elements.

4. Handles load and API traffic spikes

If your infrastructure cannot manage heavy API request load or a sudden spike in API traffic, your clients might face performance issues or service outages.

An API gateway helps you navigate this by:

- Distributing incoming requests across different microservice instances - Load balancing

- Rate limiting and API throttling

- Redirects requests away from unresponsive microservices or breaks the circuit completely - Circuit breaking.

During a festival sale like the Black Friday Sale, companies anticipate an API request spike and prepare their API gateways and microservices accordingly. This provides a smoother experience for the user and companies reduce the loss of potential sales.

5. Reduces the load on developers and admins

An API gateway makes API management much simpler by centralizing all the non-functional microservice elements in a single layer. This means that the microservices teams can now focus on building business logic and reduce development cycles.

API gateways also take care of logging, monitoring, and analytics. This makes it easier for the admins to track API usage, detect suspicious activities, and optimize the performance.

Additionally, API gateways support multiple versions of an API. It makes A/B testing possible where admins can test a new API version against the old one without disrupting the existing flow. Once the new API version is a success, they can fully roll it out.

API gateway best practices

1. Implement Multiple Gateway Instances

A single API gateway handling all incoming traffic creates a single point of failure. If that gateway becomes overloaded or crashes, the entire system can become unresponsive. This is especially problematic for applications with global users or high API traffic volumes.

Instead of one, companies should deploy multiple API gateways across different regions and locations. Further, you can use DNS-based load balancing to distribute traffic across multiple API gateway instances in these different regions and locations.

This makes sure that even if one API gateway instance fails, the others take over. Many organizations use Kubernetes and containerized deployments to automatically scale gateways based on demand, preventing performance issues during peak traffic times.

2. Enforce HTTPS for Security

Security is a non-negotiable in API gateway traffic management, and one of the simplest way to secure the API traffic is to enforce HTTPS instead of HTTP. HTTPS encrypts all communication between clients and servers, preventing hackers from stealing sensitive data like passwords, API keys, or user details.

To enforce HTTPS properly, you should:

- Automatically redirect HTTP requests to HTTPS.

- Use TLS (Transport Layer Security) with modern encryption standards (TLS 1.3).

- Get SSL/TLS certificates from trusted providers like AWS Certificate Manager.

- Enable HSTS (HTTP Strict Transport Security) to ensure all connections are secure.

3. Integrate with a service discovery component

Backend microservices are constantly changing. New instances might be added, some might be shut down, and others might get moved. If an API Gateway relies on fixed service addresses, it might start sending requests to services that no longer exist, leading to errors and downtime.

Service Discovery tools like Kubernetes Service Discovery update the API Gateway with real-time service locations. This ensures that requests always reach the correct microservice instance.

4. Plan for traffic spikes before they happen

API traffic can be unpredictable, and failing to plan for sudden surges can cause slow responses or even system crashes. The best way to avoid this is to analyze past traffic patterns and predict when you’ll need to scale your API Gateway.

For example, a ticketing website will experience massive spikes when a highly anticipated concert starts selling tickets.

A great way to manage this is auto-scaling. It is where new API Gateway instances are automatically added when traffic increases and removed when it slows down. This helps maintain fast response times without wasting resources.

You can also set API rate limits and throttling to control how many requests a single user can make in a given time frame.

Manage your API gateways effectively with DigitalAPI

Handling multiple API Gateways across different platforms can quickly become a challenge. Without a structured system, managing security, tracking performance, and maintaining consistency across APIs can feel overwhelming.

Enter DigitalAPI: It offers you a centralized solution for API management.

Why Choose DigitalAPI For API Gateway Management?

- Works across multiple API Gateways – Seamlessly integrates with AWS API Gateway, Apigee, Kong, Azure API Management, SAP, and SAP iFlows, eliminating the need for separate tools.

- Ensures security and governance – Enforce consistent security policies, role-based access control, and compliance across all APIs.

- Provides real-time analytics – Gain deep visibility into API traffic, usage trends, and performance issues.

- All-in-one Hub – Developers can discover, test, and document APIs in one place.

- AI-powered automation – Reduces manual effort by automating API documentation.

- Round the clock support – Get a complete 24/7 self-service support.

It’s time to step up your API management game! Contact us now!

FAQs about API gateway

1. Define API gateway?

An API gateway is a single entry point between client applications and backend services. It routes requests, enforces security, handles load-balancing, caching and protocol/data-format translation so backend services can focus on business logic.

2. Why use an API gateway?

Using an API gateway provides centralized security, consistent policy enforcement, reduced latency through request aggregation and caching, improved backend micro-service efficiency, and better handling of traffic spikes.

3. How many API gateways do I need?

You may start with a single gateway, but you’ll need multiple instances when you span regions, separate internal vs external traffic, enforce isolation/fault-tolerance, or handle very high traffic volumes. Deploying multiple gateways improves resilience and scalability.

4. How to ensure an API gateway can scale with enterprise growth?

Ensure your gateway architecture is stateless, supports autoscaling and multi-region deployment, integrates with service-discovery, uses caching and rate-limiting, and plans for traffic-spike scenarios. Also use monitoring/logging and governance to maintain consistent performance and reliability.

5. What is the primary role of an API gateway?

The primary role of an API gateway is to act as a single entry point for client requests, routing them to backend services while handling key tasks like authentication, rate limiting, caching, traffic management, and monitoring. It simplifies API delivery, improves security, and enhances performance.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)