API Gateway

Best API Gateway Tools for Microservices in 2026: A Practical Comparison

Updated on:

January 9, 2026

TL;DR

Microservices help teams move fast initially, but as services grow, direct integrations create complexity. Teams repeatedly rebuild authentication, routing, and rate limits, leading to drifting security, fragmented observability, and unclear latency issues.

An API gateway restores control by centralizing traffic management, security, and visibility. Kong and NGINX suit high-throughput internal services, Apigee fits external APIs and monetization, and Gravitee supports event-driven APIs.

Most organizations run multiple gateways. DigitalAPI.ai unifies discovery, governance, analytics, and AI readiness across them without forcing a rip-and-replace.

Book a demo

Microservices are great for building momentum early. But as they multiply, every direct integration becomes another place to reinvent auth, routing, timeouts, and rate limits, turning every client into its own special case. Soon, security diverges, observability splinters, and latency gets harder to explain. The platform still runs, but change becomes costly, and reliability becomes tribal knowledge.

An API gateway is how you regain control. It provides a single entry layer where traffic is authenticated, routed, observed, and shaped consistently across services, preserving both performance and security. So the real question is not just about finding the best API gateway. It’s about finding one that fits your throughput, lifecycle, and developer experience constraints.

This guide breaks down the main gateway categories, the best tools in each, and the tradeoffs that keep today’s choice from becoming next quarter’s integration mess.

Top API gateway tools for microservices

The hardest part of picking an API gateway is cutting through overlapping claims and figuring out what will hold up once the gateway becomes part of your platform. This list focuses on the tools that show up most often in real microservices stacks, and it compares them using decision parameters that map to day to day outcomes, such as how much latency the gateway adds under load, how cleanly it lets you express and enforce security and traffic policies, and how heavy the operating model becomes once you are running it across environments and teams.

1) DigitalAPI.ai (Book a demo)

When APIs are distributed across teams and gateways, the real cost becomes coordination.

Because without a shared catalog and consistent governance, teams waste cycles figuring out what exists, who owns it, and how it should be consumed safely. DigitalAPI targets the operations tax by turning fragmented API delivery into a fully managed system.

Instead of each service team rebuilding the same edge controls, such as authentication, rate limits, routing, and rollout policies, DigitalAPI lets you define and enforce standards once across the API estate.

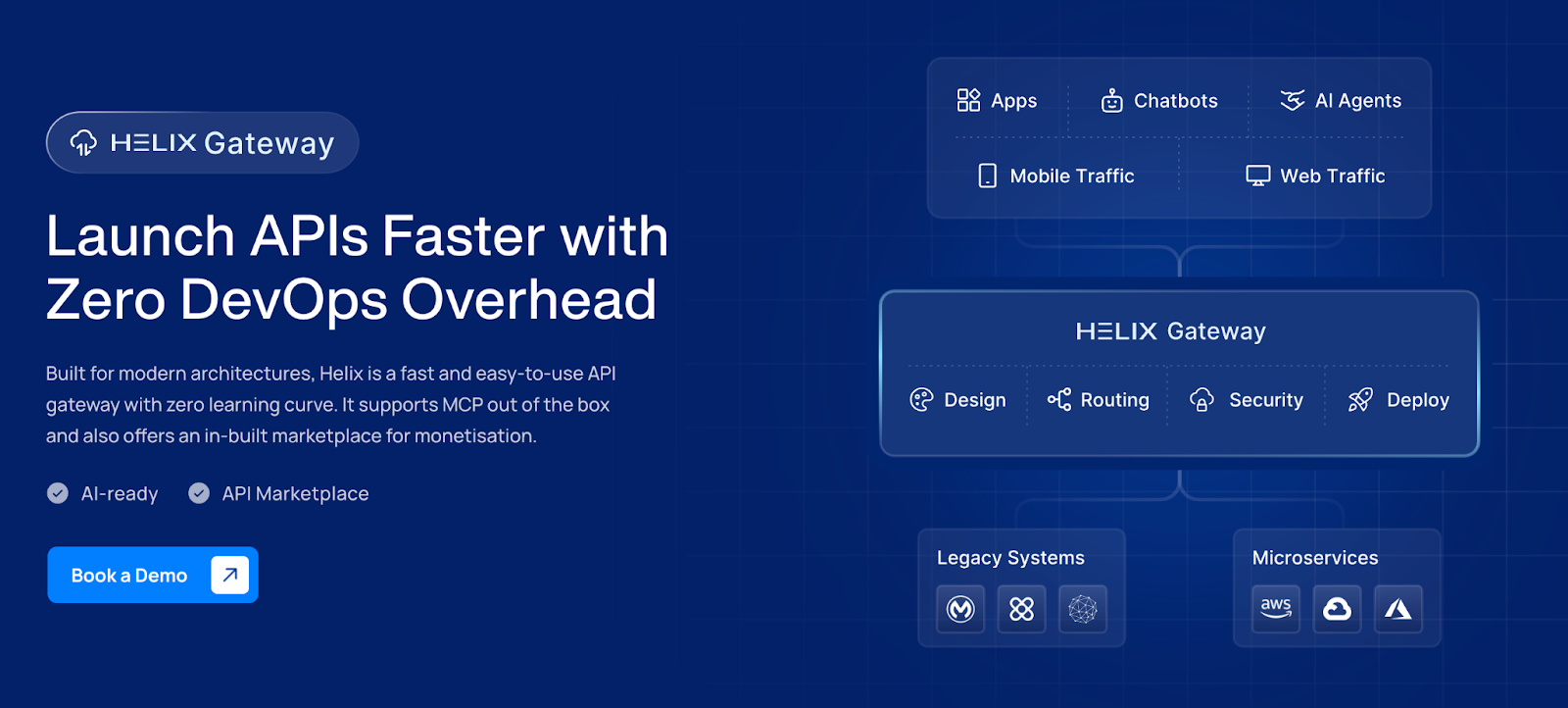

Working under the DigitalAPI platform is the Helix-managed gateway, designed to deploy quickly and run with minimal operational overhead, paired with a platform layer that gives teams one place to define, publish, discover, and govern APIs consistently, even when your underlying gateways differ.

It also supports AI-facing patterns, including Model Context Protocol style exposure, which becomes important when APIs are consumed by agents and automation, not just human-built apps.

Key features

- Traffic management: Rate limiting and quotas, request routing rules, metering and usage tracking, and optional caching and connection reuse for performance tuning.

- Security and authentication: API keys, JWT validation, OAuth2 flows, CORS controls, and mutual TLS options for stricter client and upstream security posture.

- Deployment model: SaaS-first control experience with a cloud-native gateway, plus the ability to manage an API estate that spans other environments and gateways, rather than treating each gateway as its own island.

- Governance and visibility: Centralized API catalog, search and discovery, policy consistency across APIs, and governance views that help spot duplication and drift as teams scale.

- Observability: Built-in analytics views for traffic, latency signals, and usage patterns, with an emphasis on being able to answer basic operational questions without stitching dashboards on day one.

- AI and MCP support: DigitalAPI makes traditional APIs usable by AI agents through MCP, an open standard that connects AI apps to external tools and systems. It can convert an existing API into an MCP-compliant, agent-consumable interface in one click, with secure and contextual access, without schema rewrites or manual mapping.

- Service hub: Helix plugs into the DigitalAPI Service Hub, so teams get one place with built-in analytics for API discovery, adoption, security, and governance. This way, teams eliminate their dependency on 3rd party services.

- Pricing model: Consumption-oriented pricing is positioned around API usage rather than per-gateway licensing, which can simplify budgeting when the gateway footprint grows.

Best for

DigitalAPI is great for teams that want to ship and govern APIs fast without turning the gateway into another platform to babysit. Also, it’s a strong fit when you need one control layer across multiple gateways and environments, especially as AI and automation become real API consumers.

2) Kong

Kong is a modular API gateway that sits in front of your microservices and centralizes the cross-cutting controls every API ends up needing. This keeps individual service teams from re-implementing the same edge logic in slightly different ways, and it gives platform teams a consistent place to enforce policy.

Kong is commonly deployed with Kubernetes and works well in declarative, GitOps-style setups, where gateway configuration is treated like infrastructure and promoted through environments with the same review and rollout discipline as the rest of your stack. Its plugin architecture makes it more flexible, letting teams add common capabilities, like rate limiting, request and response transformations, and logging, without changing backend services.

However, the flexibility often comes with operational and skills overhead. Depending on how you deploy it, Kong may rely on additional components for configuration and clustering, and certain distributed features can introduce extra moving parts to run, scale, and observe. Thus, it can feel overwhelming if your priority is a simple, low-touch setup.

Key features

- Plugin-based policy enforcement: Add authentication, rate limiting, transformations, and logging by attaching plugins at global, service, route, or consumer scope.

- Kubernetes-native configuration: Configure routing and policies through the Kong Ingress Controller using Kubernetes resources, including Gateway API objects.

- Hybrid control-plane option: Separate management from traffic handling so configuration can be centralized while data planes run close to workloads.

- Flexible configuration and state: Support declarative config for GitOps flows, and database-backed operation when you need dynamic updates and shared state.

3) Apigee

Apigee is Google Cloud’s API management platform, and teams usually reach for it when they are running an external API program with formal partner onboarding and commercial controls. It fits teams that need consistent policy enforcement, visibility into API usage, and structured ways to package APIs for internal teams or external partners.

In evaluation terms, Apigee tends to matter when lifecycle controls, analytics, and partner onboarding are higher priority than running the lightest possible data plane.

The tradeoff is that Apigee can be heavier than proxy-style gateways, both in cost and in operational model, and its deeper capabilities are only valuable when you actually run an API product motion, not when you simply need fast routing in front of Kubernetes services.

Key features

- API products and rate plans: Bundle multiple endpoints into API products and attach quotas and plans for controlled partner access and monetization workflows.

- Policy flows with reusable building blocks: Compose behavior using built-in policies and shared flows, with conditional execution for routing, security, and mediation logic.

- Hybrid runtime option: Keep management centralized while deploying the data plane in your Kubernetes environment for locality and control.

- Developer portal onboarding: Support self-service app registration, key provisioning, and API documentation through a portal designed for partner and developer programs.

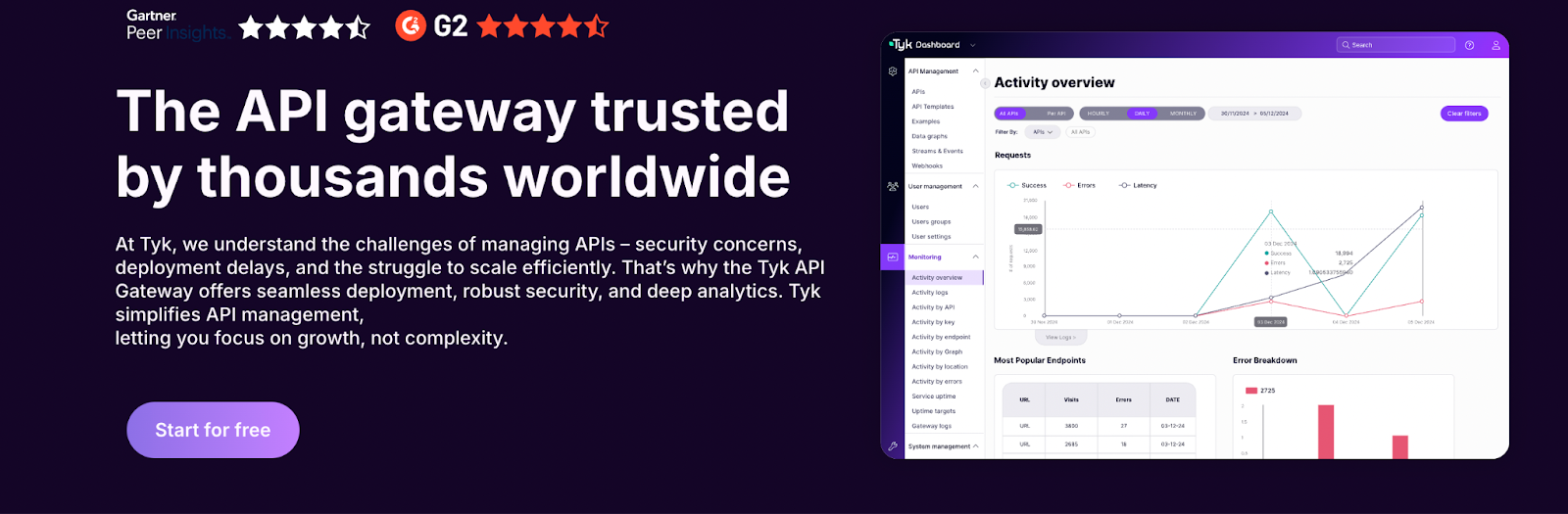

4) Tyk

Tyk is often the middle path choice when teams want a real API gateway plus enough API management capabilities to run an API program, without signing up for a heavyweight enterprise suite.

Instead of stitching together a proxy, a separate developer portal, analytics, and policy tooling, Tyk packages the gateway with common management building blocks so onboarding and governance don’t become a side project. That matters in microservices environments because the hard part is rarely routing traffic; it’s operating the program with consistent auth and policy, key issuance, developer onboarding, and visibility into usage.

From an evaluation standpoint, Tyk is good in terms of deployment flexibility. You can run it as open source or self-managed, use cloud options, and adopt hybrid patterns where the control plane and the gateways don’t have to live in the same place. It also supports

Key features

- Developer portal and self-serve access: App registration, key provisioning, and docs workflows without adding a separate portal product.

- Hybrid and Kubernetes deployment paths: Operator and Helm-based patterns, plus hybrid control and data plane setups.

- Polyglot plugin model: gRPC-based extensibility options that let teams implement custom logic in common languages.

- Policy and traffic controls: Rate limiting, quotas, caching, and auth patterns like JWT and OAuth2/OIDC support.

However, the tradeoff for the platform is straightforward. If you self-manage it, you still own upgrades, dependencies, and monitoring across components. And if your needs are unusual, expect some engineering work to match edge cases cleanly.

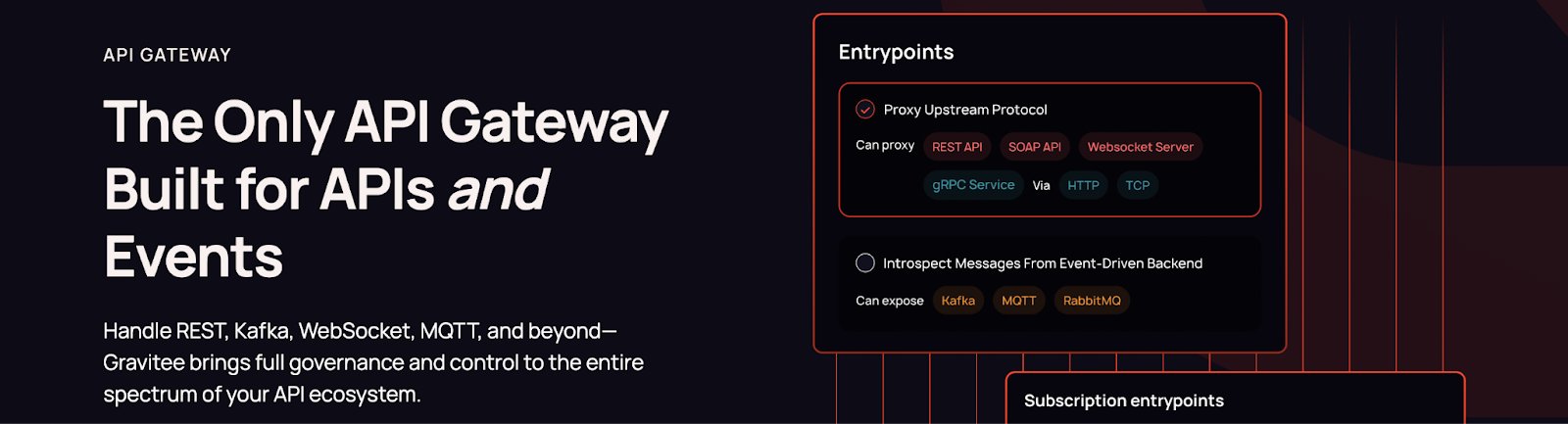

5) Gravitee

Gravitee is a good fit when your API estate includes more than HTTP microservices. It is often evaluated by teams that need to expose and govern event-driven surfaces, like Kafka-backed streams, alongside traditional REST APIs, without treating streaming as an afterthought.

In that world, the core problem is not just routing, but it’s also applying consistent access controls, subscriptions, and observability across different interaction styles, including request-response, WebSockets, and server-sent events.

From an evaluation standpoint, Gravitee leans toward a policy-driven operating model, where many behaviors are composed through configuration rather than custom code.

However, the tradeoff is that some advanced async and broker capabilities may depend on enterprise licensing, and deeper monetization typically requires integrating with external billing systems. It is more valuable when you truly need mixed protocol governance, not when you only want a fast ingress proxy in front of Kubernetes services.

Key features

- Async and event exposure: Manage and publish event streams, and expose them through web-friendly interfaces with guardrails.

- Plans and subscriptions: Support portal-driven access requests and tiered plans, with usage tracking for program operations.

- Policy-based controls: Apply authentication, rate limits, transformations, and validations through composable policies, with custom options when needed.

- Portal and multi-environment ops: Provide a developer portal and management patterns that help teams operate across environments and clusters.

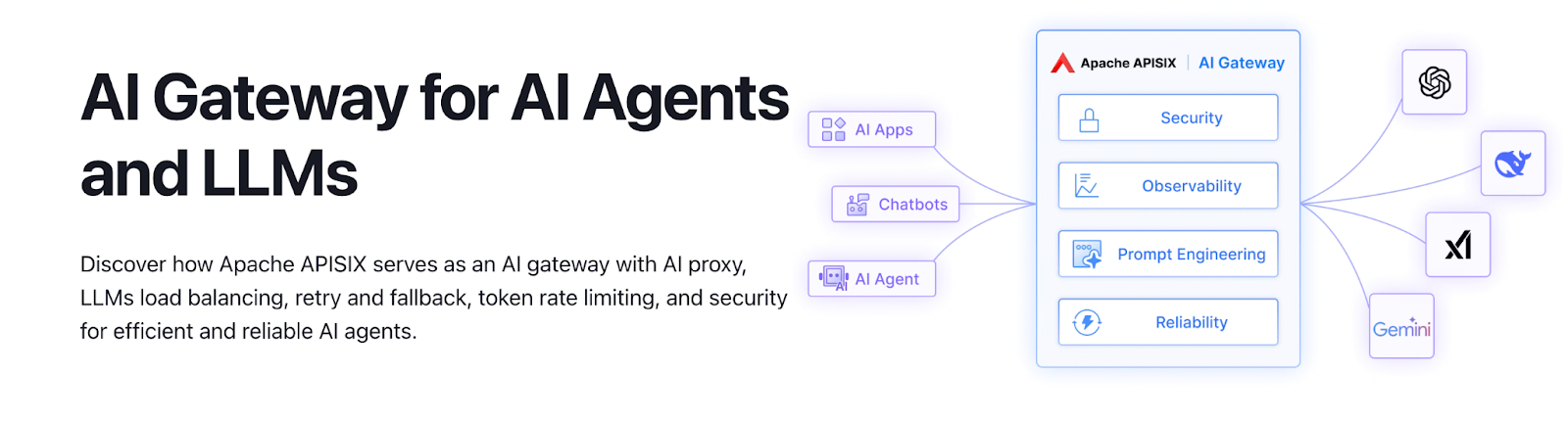

6) NGINX / Apache APISIX

NGINX and Apache APISIX show up in microservices stacks when the main requirement is a fast, reliable traffic layer, not a full API program platform. They’re often used as high-performance gateways at the edge, where you care about low latency routing, predictable resource use, and tight control over how requests enter Kubernetes or upstream services.

In evaluation terms, you get speed and a clean data-plane focus, but you take on more build-the-rest work if you need higher-level API management, like catalogs, developer onboarding, products, or monetization.

Usually, NGINX tends to be the straightforward choice when you want a proven reverse proxy foundation and are comfortable operating it as infrastructure. APISIX is closer to an API-gateway product in the open-source world, with dynamic configuration and a rich plugin system geared toward cloud-native environments. Neither is trying to be an all-in-one control layer across multiple gateways and teams, which is where DigitalAPI’s model later becomes relevant.

Key features

- High-performance routing: Efficient HTTP traffic handling with flexible routing rules and upstream load balancing.

- Dynamic, cloud-native control: APISIX supports hot-reload style config updates and Kubernetes-friendly management patterns.

- Plugin-driven policies: APISIX offers a broad plugin system for auth, rate limiting, and transformations, with multiple extensibility options.

- DIY API management: Portals, catalogs, and program governance typically require additional products or a custom build-out.

7) AWS API Gateway

AWS API Gateway is a fully managed front door for APIs inside AWS, and teams usually pick it when they don’t want to run gateway infrastructure. It’s a practical fit for serverless and AWS-native backends, where the fastest path is often to just connect the API layer to Lambda and ship with AWS handling scaling and availability.

However, the most important choice boils down to the API type. REST APIs are the feature-heavy option, with capabilities like usage plans, request and response mapping, validation, and caching. HTTP APIs are intentionally slimmer and cheaper for straightforward proxying, with fewer management features. WebSocket APIs exist for real-time, bidirectional messaging. All of them come with their own downsides.

Since this is AWS-only, multi-cloud and on-prem patterns are outside the model, and governance and onboarding usually need to be built around it. So, it isn’t something you get as a complete program layer.

Key features

- REST, HTTP, and WebSocket APIs: Different feature sets and cost profiles depending on whether you need rich controls, simple proxying, or real-time connections.

- Usage plans and throttling: Quotas and per-client throttles using API keys in the REST API model, with simpler controls in HTTP APIs.

- AWS-native security and observability: IAM-based access patterns and standard AWS monitoring and tracing integrations for operational visibility.

- Managed operations with fixed limits: No servers to manage, but service quotas and feature boundaries that you need to design around early.

Which platform do you really need?

Picking an API gateway comes down to identifying the job you need the gateway to do. Some gateways are tuned to be fast, lean entry points for internal microservices traffic. Others are built to run an external API program where onboarding, quotas, analytics, and commercial controls are part of the workload. Some are better suited for event-driven exposure.

So the fundamental questions you need to answer are:

- Should we optimize for latency and throughput?

- Is it better to optimize for governance and productization?

- Is streaming behavior a priority?

- Are you protecting developer velocity?

Once you anchor on that, the right category becomes clear, and the shortlist gets much shorter. So let’s see which tools fit what priorities.

Scenario A: High-speed microservices

When you have 500+ microservices in Kubernetes, gateway choice starts to hinge on latency because the gateway becomes part of almost every request path. Even small overhead at the entry layer gets multiplied across huge request volumes, and the difference shows up as user-facing slowness, higher compute spend, or both.

The real moat then becomes how much processing the gateway adds per call, and how consistently you can operate it while dozens of teams ship changes. In evaluation, you want a data plane that stays fast under load and an operating model that avoids configuration drift across clusters. You need just enough controls for safe routing and basic traffic shaping, without turning the gateway into a second platform.

That’s why Kong or NGINX tend to fit here. Kong is a solid option when you need plugin-based controls and Kubernetes-native configuration patterns. NGINX fits when you want a lean, high-performance front door and are fine with handling API management needs elsewhere.

Scenario B: External monetization

When you sell APIs to partners, speed is not the bottleneck. Trust is. The work is turning access into a product that can be requested, approved, measured, and billed without your team becoming the human workflow driver.

In the absence of that, what breaks first is partner onboarding and control. You need a real portal experience, seamless subscription and credential management, and commercial enforcement that matches reality, like quotas, plans, and invoices tied to actual usage instead of ad hoc tracking.

So when you’re evaluating a platform for that use case, the deciding factors are productization features, plan and quota enforcement, partner-grade analytics, and a portal that supports self-serve without losing governance.

That is why Apigee is a common fit for this scenario. It is designed for API programs where packaging, onboarding, and monetization are first-class concerns, even though it can be more platform than you need if the goal is only internal microservices ingress.

Scenario C: Event streaming

Exposing Kafka topics to the web is where most gateway thinking falls apart, because an event stream isn’t a request and response call. It has consumers that stay connected, it behaves differently under load, and it often needs to be presented in web-friendly forms like WebSockets or server-sent events, not just HTTP endpoints.

Yet, the decision parameter is simple. Can the gateway treat event-driven APIs as a first-class surface with real governance, or are you forcing streaming through an HTTP-shaped tool and hoping it behaves the way you need it to?

Once you answer that, you’d also want one consistent way to apply access control, subscriptions, and visibility across both REST APIs and streaming exposure.

That is why Gravitee is a good fit in this scenario. It is built to manage mixed portfolios where event exposure sits alongside traditional APIs, with the practical tradeoff that some advanced event and broker features may depend on enterprise licensing.

Scenario D: Developer experience

When developers ship changes frequently, the gateway becomes a shared control point, and shared control points create queues. A new route, a tweak to an auth rule, a small rate-limit adjustment.

If every change has to go through a central platform team, developer velocity drops, and the gateway turns into a bottleneck. But if every team manages gateway rules however they like, standards drift, authentication varies across APIs, and security becomes uneven.

The goal becomes clear: to optimize for developer experience, you need a tool that can handle self-serve changes with consistent outcomes.

Look for declarative configuration that can be reviewed and versioned, clean promotion through environments, and an extension model that fits the languages your developers already use. That’s why Tyk or Zuplo fit here. Tyk works when you want packaged management building blocks alongside the gateway. Zuplo fits when code-first simplicity and fast iteration are the priority.

Why choose DigitalAPI?

Gateway sprawl is what happens when different teams optimize for different constraints. One team chooses AWS API Gateway because the workload already lives in AWS, and they want a managed path. Another goes with Apigee for partner-facing APIs because onboarding, analytics, and commercial controls are part of the job. Platform teams may run Kong or NGINX in Kubernetes because predictable performance and Kubernetes-native operations matter more than bundled management features.

Over time, that mix becomes the default state, not the exception.

And that is where fragmentation sets in. APIs end up scattered across consoles and portals, so even basic discovery becomes work. Standards drift because each gateway encodes policy differently, which turns governance into case-by-case debates instead of a repeatable system. Then the everyday questions start taking far longer than they should. Who owns this API? Which version is actually live? What authentication pattern is the default, and what exceptions are allowed?

DigitalAPI.ai addresses this by sitting above your gateways and unifying how the estate is discovered, governed, and consumed, without forcing a rip-and-replace.

- Unified catalog and AI-powered search: One inventory across gateways, with faster discovery and less duplication.

- Multi-gateway developer portal: One place to publish docs, enable self-serve access, and support sandbox-style exploration across runtimes.

- Cross-gateway governance and security checks: Shared standards, policy checks, and guardrails that reduce drift between teams and environments.

- Usage and performance visibility: Consolidated analytics so ownership, adoption, and hotspots are visible without hopping dashboards.

- MCP-style AI readiness: This makes existing APIs more agent-consumable as automation, and AI agent workflows become first-class consumers.

In short, you get one catalog, one search, and one governance model across Kong, Apigee, and AWS, and a clear path to making the whole estate AI-ready.

FAQs

1. Do I need a Service Mesh too?

It really depends on your use case. Use an API gateway for external traffic and edge policies. Add a service mesh when you need consistent service-to-service security, routing, and observability inside the cluster, without changing every service.

2. Is NGINX an API Gateway?

NGINX can act as an API gateway for routing, TLS termination, and basic controls. It usually lacks built-in API management features like developer portals, products, and governance, so teams often pair it with additional tooling.

3. What is the best open-source gateway?

There isn’t one best. Choose based on your constraint, such as Kong for plugin-driven extensibility, Apache APISIX for high-performance cloud-native routing, Tyk for more packaged management features, and Gravitee for mixed REST plus event-driven APIs.

4. How do I handle authentication?

Centralize authentication at the gateway. Use OAuth2/OIDC for identity, validate JWTs at the edge, and use mTLS for high-trust clients. DigitalAPI.ai helps standardize these patterns across multiple gateways so teams don’t reinvent auth per API.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)