Alternatives

Apigee vs Kong: Enterprise API Management vs. Modern Microservices Gateway

Updated on:

TL;DR

Apigee acts as a comprehensive "control tower" optimized for governance and monetization, while Kong serves as a lightweight, decentralized engine built for sub-millisecond latency and microservices.

For high-frequency use cases, Kong wins on raw throughput and low resource usage, whereas Apigee prioritizes rich policy execution and reliability over speed, making it better suited for external partner management.

Apigee provides deep, native integration with the Google Cloud ecosystem, while Kong offers a true "run anywhere" model that supports multi-cloud and hybrid environments without vendor lock-in.

For most API Architects and CTOs, the final decision on API infrastructure inevitably comes down to Apigee versus Kong. This is not just a comparison of features. It is a collision of philosophies. Apigee (Google Cloud) represents the centralized, full-lifecycle management platform designed for strict governance and monetization. Kong represents the decentralized, cloud-native gateway built for sub-millisecond latency and microservices. Choosing the wrong one doesn't just mean swapping software later. It means fundamentally rethinking your traffic architecture. This guide dissects the trade-offs to help you make the right call.

Apigee: The centralized API platform

Apigee, acquired by Google Cloud in 2016, is the quintessential Enterprise API Management Platform. It is designed to act as a comprehensive "control tower" for an organization's digital assets. Built to manage the entire API lifecycle, from design and security to complex monetization and analytics. Apigee excels in environments where centralized governance, strict compliance, and business-focused visibility are paramount. It is a heavyweight, full-stack solution favored by organizations deeply embedded in the Google ecosystem or those requiring robust, out-of-the-box tools to expose legacy data to external partners.

Kong: The modern microservices gateway

Kong represents the modern, cloud-native approach to connectivity. Born from open-source roots and built on the battle-tested, high-performance NGINX proxy, Kong is architected for speed, modularity, and microservices. It is the "developer's choice" - lightweight, platform-agnostic, and designed to sit as close to the code as possible. Whether deployed as a sidecar in Kubernetes or an Ingress Controller, Kong prioritizes low latency and high throughput, making it the de facto standard for organizations shifting toward decentralized, CI/CD-driven architectures.

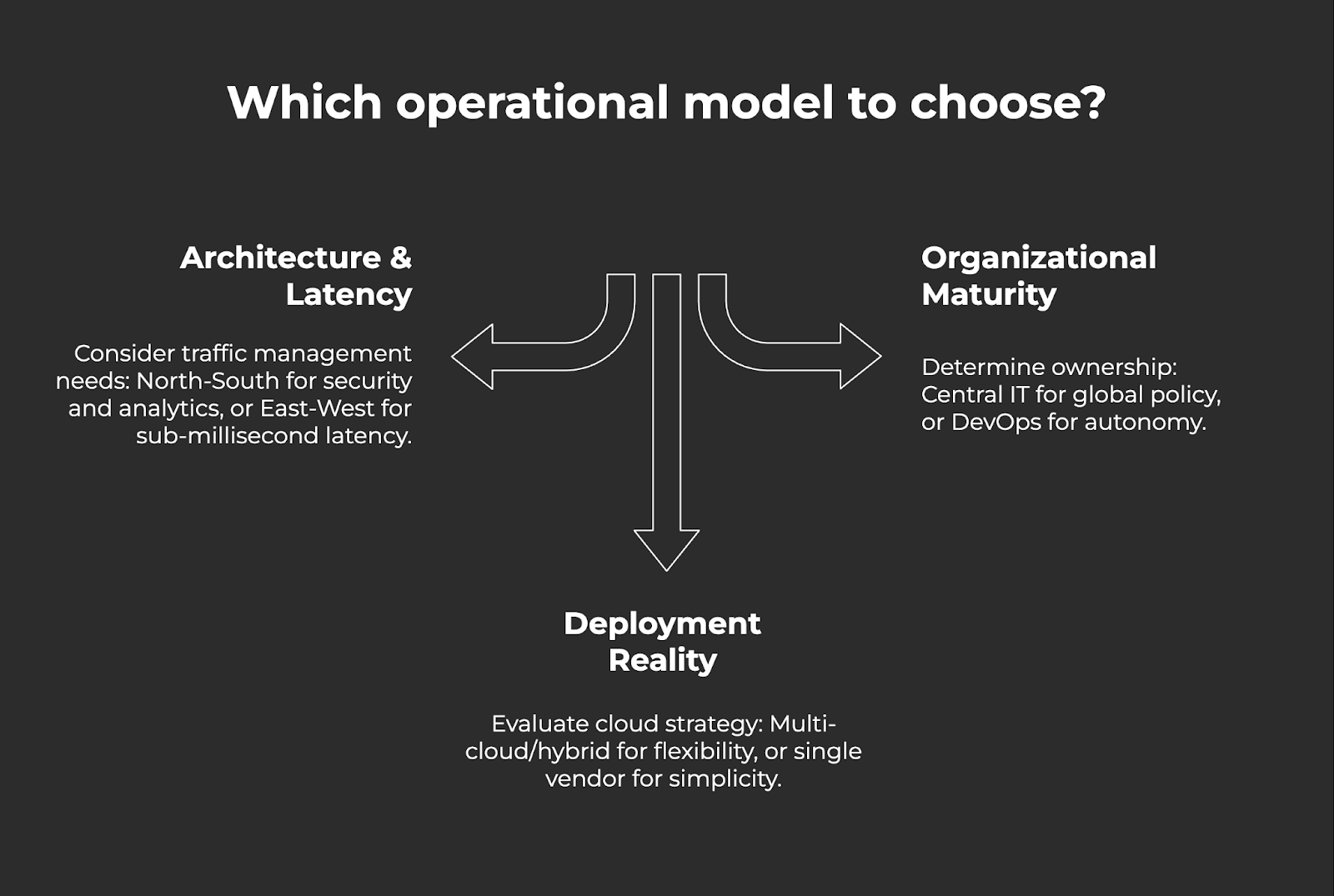

The 3 strategic pillars of decision

Before diving into the feature-by-feature comparison, it is vital to set the context. Buyers are not just comparing software; they are choosing an operational model. The decision typically hinges on three strategic pillars:

1. Architecture & Latency (North-South vs. East-West)

Are you primarily managing "North-South" traffic - external partners and mobile apps accessing your internal data? If so, the slight latency of a centralized gateway is an acceptable trade-off for security and analytics. Or, are you managing "East-West" traffic - millions of internal calls between microservices where sub-millisecond latency is non-negotiable? The answer often dictates whether you need a rich management platform or a high-performance proxy.

2. Deployment reality (Centralized vs. Distributed)

Are you moving toward a multi-cloud or hybrid reality where the gateway must run identically on AWS, Azure, and on-premise data centers? Or are you comfortable with a managed control plane hosted by a single vendor (Google)? The "Run Anywhere" requirement is a major dividing line between the two platforms.

3. Organizational maturity (Central IT vs. DevOps)

Who will own the gateway? If it is a Central IT or Security team mandating global policy, a centralized dashboard is essential. If ownership is distributed to autonomous "Spotify-model" squads who manage their own routing and configs via GitOps, a decentralized, declarative tool is required.

Apigee vs Kong: Detailed comparison

While both platforms serve the fundamental purpose of managing API traffic, they do so with vastly different engines and philosophies.

1. Architecture and Performance

The architectural divergence is the most significant differentiator. Apigee offers a "battery-included," centralized platform, whereas Kong offers a modular, decentralized engine built for speed.

Apigee: The Centralized Proxy

Apigee utilizes a centralized, full-stack architecture. In its modern iteration (Apigee X), the management plane is fully managed by Google Cloud, while the runtime (where traffic flows) is a managed instance peered with your network. This setup creates a distinct "layer" that separates consumers from backend services.

- The Enterprise Context: For a Retail Bank, this architecture is reassuring. It ensures that every single API call, regardless of origin, passes through a consistent enforcement point for heavy PCI-DSS compliance checks and audit logging.

- The Trade-off: This centralization introduces architectural "weight." Traffic must route through specific ingress points defined by the managed service, which can introduce network hops and latency. It acts as a heavy curtain, ideal for policing but potentially bottlenecking high-velocity internal traffic.

Kong: The Lightweight Ingress

Kong is built on NGINX and extended with OpenResty (Lua), utilizing a decentralized architecture. Kong is designed to be deployed anywhere: as a centralized gateway, an Ingress Controller for Kubernetes, or even a sidecar mesh.

- The Modern Context: For a High-Frequency Trading platform or a Real-Time AdTech bidding engine, Kong is the logical choice. Its small footprint allows it to process requests with minimal resource overhead, handling tens of thousands of requests per second (RPS) on modest hardware.

- The Advantage: Because the data plane is decoupled from the control plane, Kong nodes can continue serving traffic even if the management layer goes down, offering a level of resilience critical for distributed microservices.

Verdict: Choose Apigee for centralized control of external-facing (North-South) APIs where policy depth outweighs raw speed. Choose Kong for low-latency internal communication (East-West) and microservices where performance is critical.

2. Key features and functionality

The feature comparison reveals a choice between Apigee's extensive, pre-built business platform and Kong's modular, developer-focused extensibility.

Apigee: Feature breadth and business logic

Apigee shines in its comprehensive breadth. It is not just a technical tool; it is a business platform. It includes advanced API design tools, deeply integrated monetization frameworks (rate plans, billing integration), and sophisticated threat protection capabilities out of the box.

- Industry Example: A Healthcare Provider exposing patient data to third-party insurance apps needs robust consent management, complex OAuth flows, and potential monetization of data access. Apigee provides the "Rate Plans" and "Monetization" modules pre-built, allowing the provider to launch a paid API tier without writing custom billing code.

- Implementation: However, Apigee’s policy engine relies on XML-based configuration. While powerful, modern developers accustomed to YAML or JSON often find XML verbose and legacy-oriented.

Kong: Feature depth and extensibility

Kong focuses on extensibility. Its core is lean, ensuring high performance, but its power lies in its vast plugin ecosystem. Need authentication? Rate limiting? Log transformation? There is a plugin for that. If a feature does not exist, developers can write custom plugins in Lua, Go, Python, or JavaScript.

- Industry Example: An eCommerce Giant dealing with Black Friday traffic might need a custom caching logic that is specific to their inventory SKUs. With Kong, they can write a custom plugin in Go that interacts directly with the request path to handle caching exactly how they need it, rather than relying on a generic policy.

- Implementation: This approach allows teams to build exactly the gateway they need without the bloat of unused features. However, "assembling" your platform via plugins requires higher engineering maturity than Apigee’s "switch-on" approach.

Verdict: Apigee wins on "Business" features (Monetization, Analytics, Partner Management). Kong wins on Technical Extensibility (Plugins, Custom Logic, lightweight footprint).

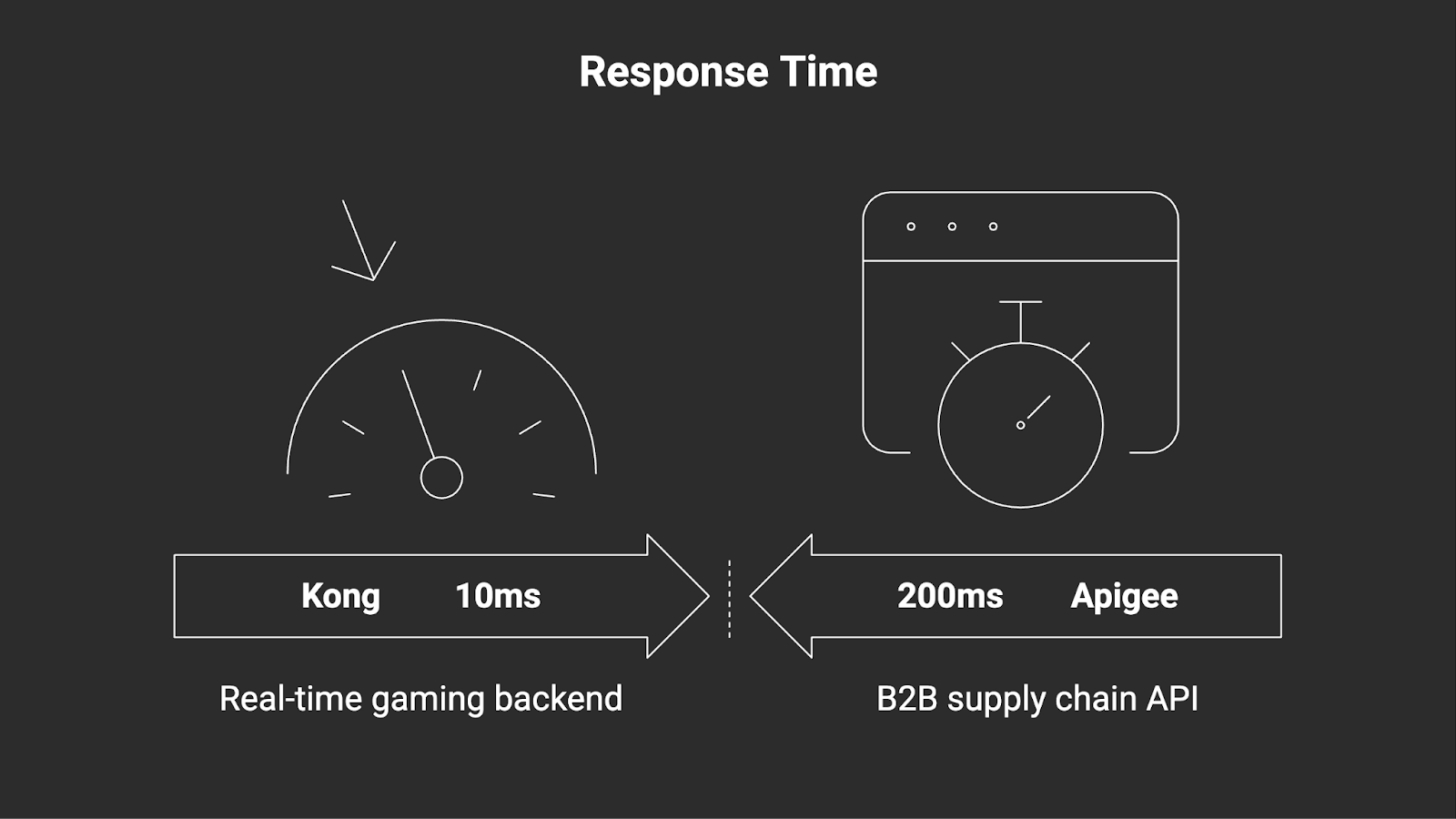

3. Performance and scalability

In high-stakes environments, the trade-off between rich policy execution and raw throughput becomes the deciding factor.

Kong: The performance leader

Kong is widely regarded as the performance leader in the gateway market. Benchmarks consistently show Kong delivering lower latency and higher throughput compared to Java-based or heavier enterprise gateways. Its ability to handle high concurrency with low memory usage makes it the standard for high-traffic industries.

- Scalability Profile: Kong scales linearly. If a media streaming service sees a spike in viewership, they can spin up new Kong pods in Kubernetes in seconds. Kong’s architecture avoids the "hair-pinning" of traffic that can occur with managed SaaS gateways, keeping data paths efficient and direct.

Apigee: Reliability over raw speed

Apigee prioritizes reliability, policy depth, and consistency over raw speed. While robust, the processing overhead of its rich policy chain - executing XML checks, transforming payloads from SOAP to REST, verifying diverse security tokens - adds latency.

- Scalability profile: Apigee X scales via Google Cloud’s massive infrastructure. While it can handle immense volume, the latency per request is generally higher than Kong's. For a B2B supply chain API where a 200ms response is acceptable, this is fine. For a real-time gaming backend requiring 10ms response times, Apigee’s overhead can be prohibitive.

Verdict: Kong is the clear winner for high-throughput, low-latency requirements. Apigee is sufficient for standard enterprise edge traffic, but is not optimized for real-time constraints.

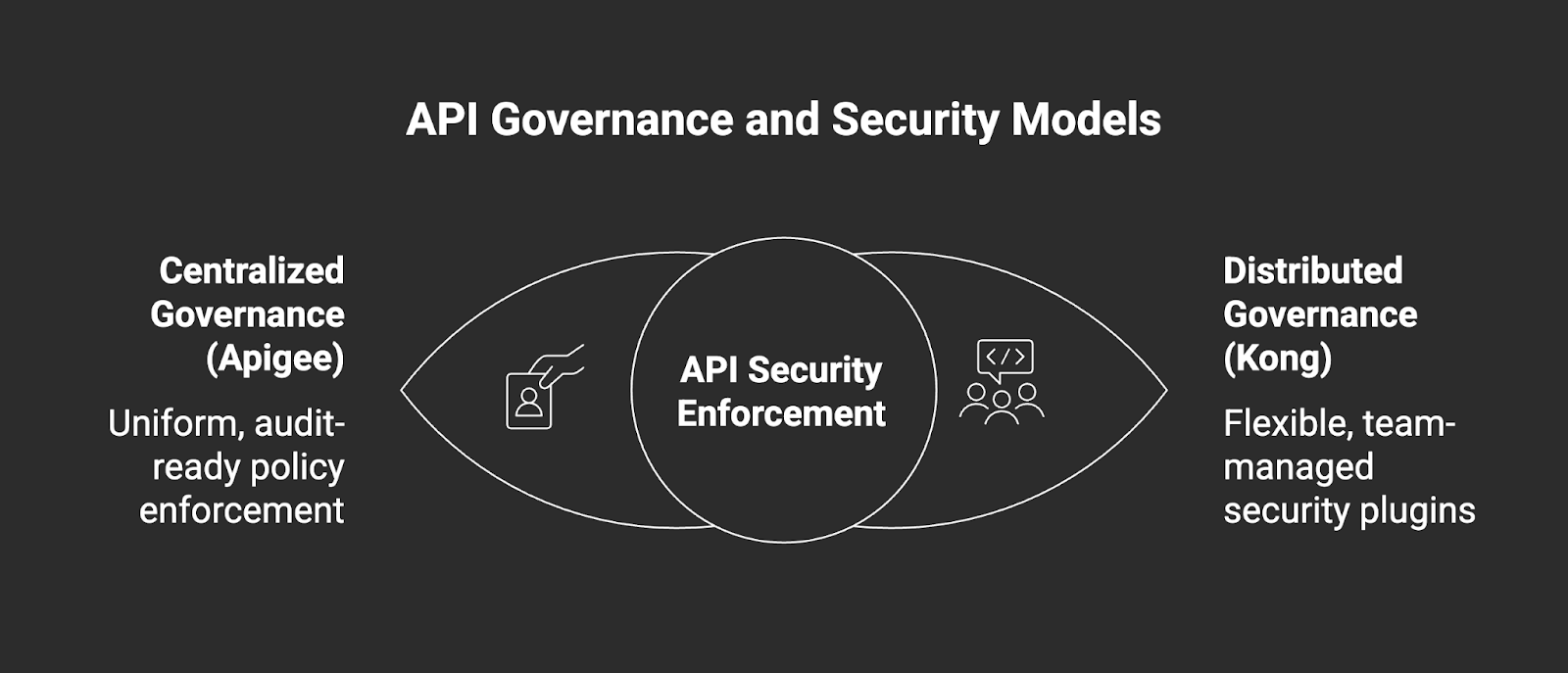

4. Governance and security

Security philosophies differ sharply between top-down, centralized enforcement and distributed, developer-led guardrails.

Apigee: The centralized sheriff

Apigee enforces a centralized governance model. It is designed for the "Central IT" or "CISO" team that needs to enforce uniform security standards across the entire organization. Security policies (OAuth, Spike Arrest, SQL Injection protection) are applied globally or per proxy via the UI.

- Compliance Advantage: In highly regulated sectors like Banking, auditability is key. A security auditor can log into Apigee and instantly visualize the exact policy chain applied to every endpoint. The "Shared Flows" feature allows security teams to force a mandatory security check (e.g., validating a JWT) on every API before the request ever reaches the developer’s code.

Kong: The distributed guardrails

Kong employs a distributed, plugin-based security model. Security is applied via plugins (e.g., OIDC, ACL, mTLS), which can be configured globally or scoped to specific services/routes.

- DevSecOps model: This fits the "DevSecOps" culture, where individual squads manage their own security configurations as code. A team building the "Checkout Service" can apply a strict rate-limiting plugin to their specific route without needing Central IT to approve a global config change. While powerful, it requires discipline (often via linting tools) to ensure that every decentralized team is actually applying the correct security plugins.

Verdict: Apigee for rigid, compliance-heavy industries requiring centralized auditability. Kong for agile, engineering-led organizations comfortable with distributed responsibility.

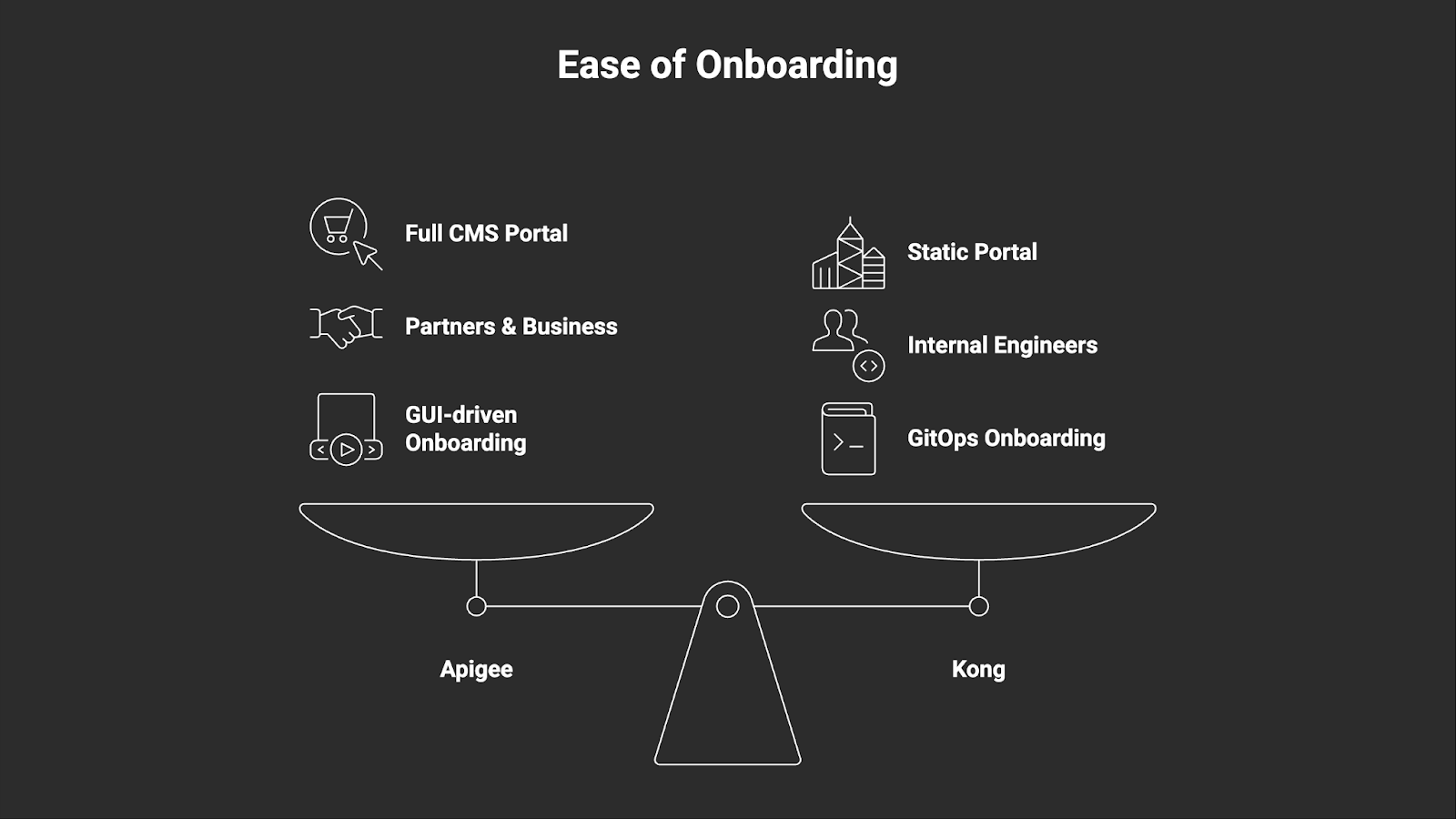

5. Developer onboarding

The speed at which internal teams and external partners can adopt your APIs depends heavily on the portal and onboarding workflow.

Apigee: The "Apple store" experience

Apigee offers a mature, CMS-style Developer Portal (integrated and customizable). It allows non-technical product managers to publish documentation, manage API keys, and onboard partners via a polished GUI.

- User experience: It feels like a finished product - complete with forums, blogs, and interactive documentation - ready to be presented to external partners. If you are a Telecom company opening up 5G APIs to external game developers, you want the polished, branded experience Apigee provides.

Kong: The GitOps experience

Kong’s portal capabilities have improved significantly with Kong Konnect, but they remain utilitarian compared to Apigee’s customization capabilities. Kong leans heavily on CI/CD and GitOps for onboarding.

- User experience: "Onboarding" in the Kong world often means checking out a Git repository, defining an Ingress object or a declarative YAML configuration, and pushing it to the pipeline. This is paradise for backend engineers who hate clicking through UIs, but it can be intimidating for non-technical business partners or less mature developer ecosystems.

Verdict: Apigee for external partner ecosystems and monetization. Kong for internal developer enablement and automation-focused teams.

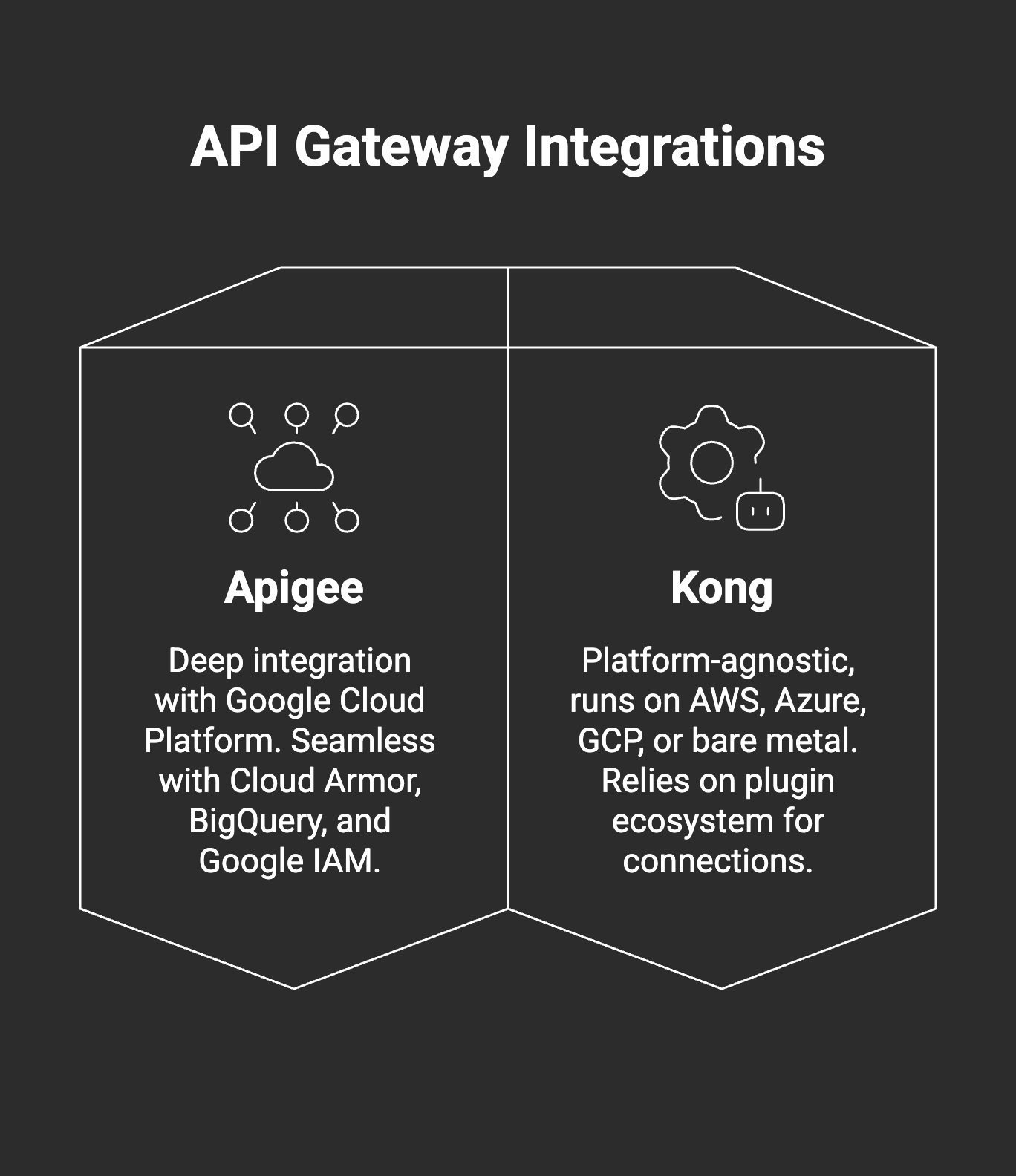

6. Integrations

The choice often depends on whether you need deep, native alignment with Google Cloud or a platform-agnostic ecosystem.

Apigee: The Google cloud powerhouse

Apigee provides deep, native integration with Google Cloud Platform (GCP). It integrates seamlessly with Cloud Armor (DDoS protection), BigQuery (analytics), and Google IAM.

- Ecosystem: If your infrastructure is already on GCP, Apigee feels like a natural extension of your stack. However, integrating with non-Google services often requires generic API callouts or extensions, which can feel less "native" when your backend is on AWS or Azure.

Kong: The switzerland of gateways

Kong is platform-agnostic. It runs equally well on AWS, Azure, GCP, or bare metal. Its integration strategy relies on its plugin ecosystem, which connects to virtually any logging service (Datadog, Splunk, Prometheus), auth provider (Okta, Keycloak), or database (Redis, Postgres).

- Ecosystem: Kong does not penalize you for using a multi-cloud strategy. A Logistics Company running workloads across AWS (for compute) and Azure (for heavy data) can deploy Kong nodes in both clouds, managed by a single control plane, ensuring consistent policy enforcement without lock-in.

Verdict: Apigee for GCP loyalists. Kong for multi-cloud and hybrid cloud strategies.

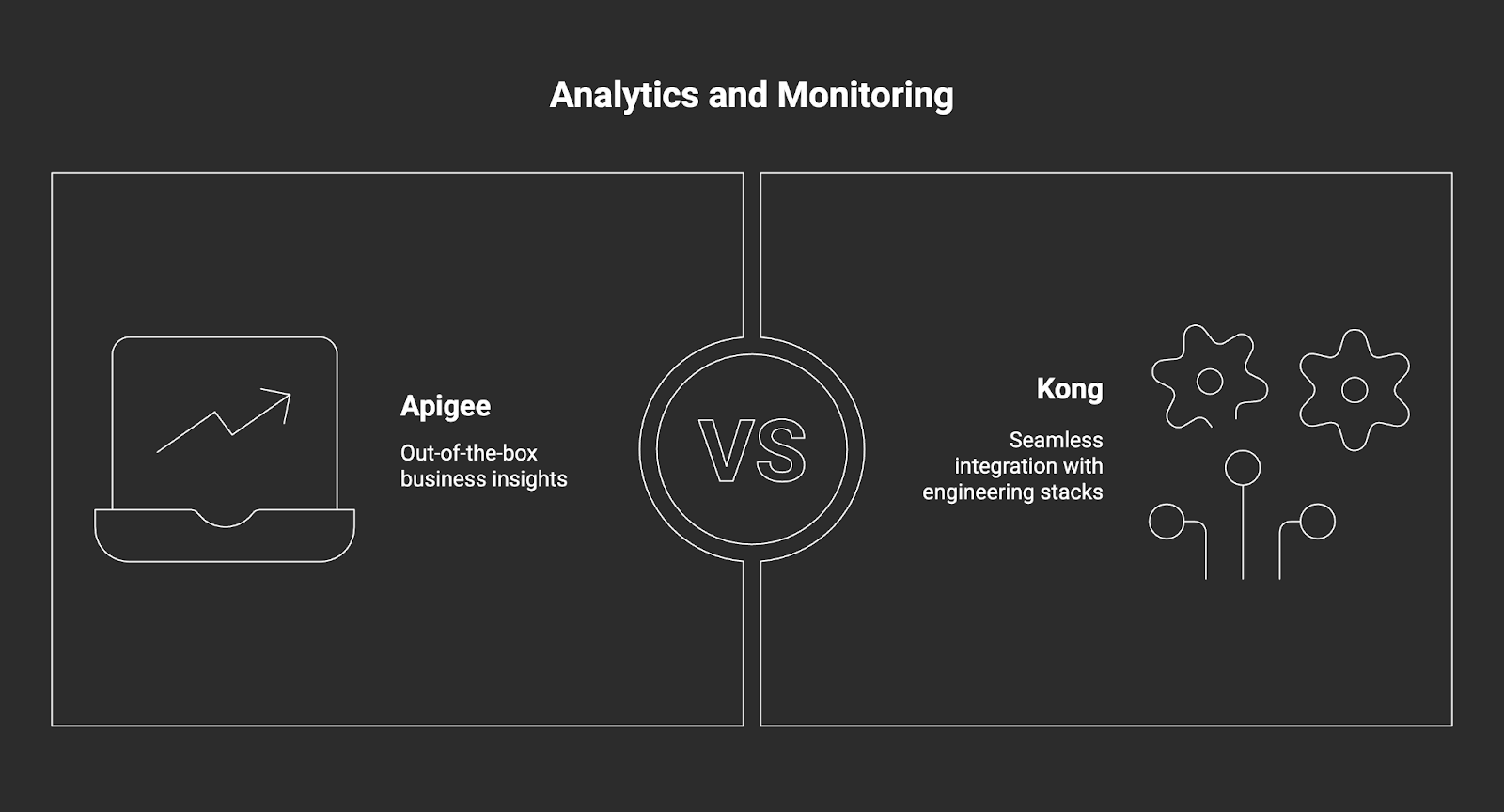

7. Analytics & monitoring

Visibility needs vary from high-level business dashboards to granular, real-time operational metrics for debugging.

Apigee: The business dashboard

Apigee possesses one of the best integrated analytics engines on the market. Its dashboards provide granular visibility into traffic spikes, error rates, and developer usage without requiring external tools.

- Insights: The specialized "API Monitoring" features allow operations teams to pinpoint exactly where latency is introduced. Furthermore, it translates data into business metrics: "Which partner is driving the most revenue?" or "Which API product has the highest churn?" These are questions a Product Manager can answer directly in Apigee.

Kong: The observability exporter

Kong relies on observability exports. While Kong Konnect has decent dashboards, the core philosophy is to push metrics to where you already look: Grafana, Datadog, or New Relic.

- Insights: Kong generates rich metrics, but you are often responsible for visualizing them. This is an advantage for engineering teams that want a "Single Pane of Glass" for all infrastructure (APIs, databases, servers) in one Grafana dashboard. However, it is a disadvantage for business users who want a pre-built "API Business Report" without asking an engineer to query Prometheus.

Verdict: Apigee for out-of-the-box business insights. Kong for seamless integration with existing engineering observability stacks.

8. Pricing and licensing

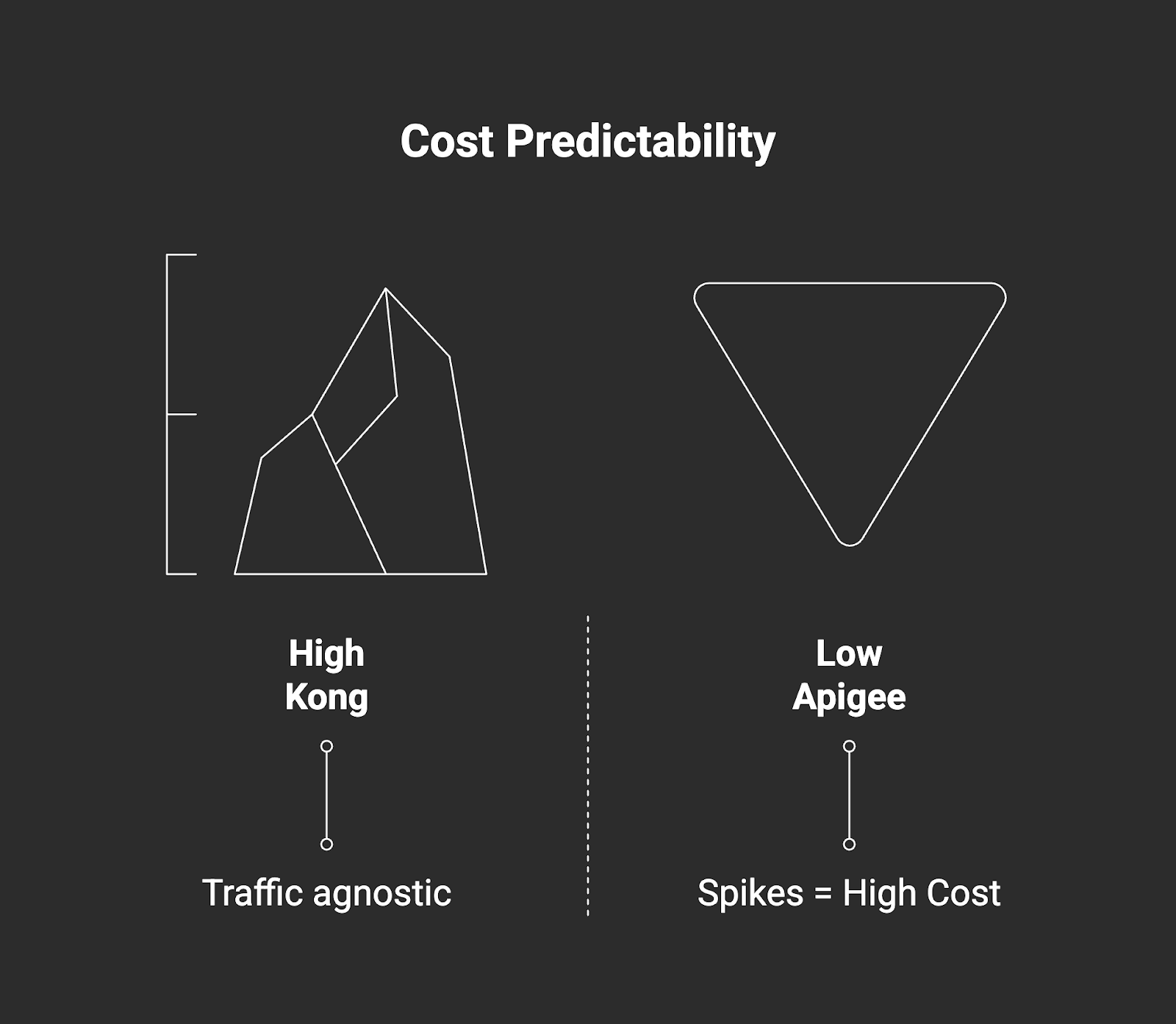

Cost predictability is a major factor, with models ranging from consumption-based subscriptions to node-based licensing.

Apigee: The premium subscription

Apigee is generally associated with a high-cost, enterprise pricing model. Pricing is often subscription-based, tiered by API call volume and environment units.

- Cost implication: For high-volume use cases, the costs can escalate quickly. While a "Pay-as-you-go" model exists, enterprise features are often gated behind significant commit contracts. It is an OpEx-heavy investment suited for organizations where API monetization generates revenue to offset the tool's cost.

Kong: The scalable open core

Kong operates on an Open Core model. The community version is free and fully functional for basic needs. The Enterprise version (and Konnect SaaS) typically charges based on "Services" or "Nodes," rather than strictly by API call volume.

- Cost Implication: This creates a more predictable cost structure for high-traffic applications. A social media startup serving billions of requests but only using 10 microservices will find Kong significantly cheaper than Apigee. You are not penalized for success (more traffic) but rather charged for complexity (more services).

Verdict: Kong is generally more cost-effective for high-volume, high-scale deployments. Apigee is a premium purchase for premium management features.

When to choose Apigee vs Kong?

Choose Apigee if:

- Governance is King: You are a large enterprise (Bank, Insurance, Gov) prioritizing governance, compliance, and auditability over raw speed.

- Google-centric: Your infrastructure is heavily centered on Google Cloud.

- Monetization: You need to sell your APIs and handle billing/invoicing out of the box.

- Legacy transformation: You need to expose legacy SOAP services as modern REST APIs to external partners with heavy transformation logic.

- Non-technical users: You have a non-technical team that needs to manage API products and portals via a UI.

Choose Kong if:

- Speed is King: You are building a microservices or Kubernetes-based architecture where latency is a KPI.

- Multi-Cloud: You require a multi-cloud or hybrid deployment without vendor lock-in.

- Engineering Culture: Your team prefers GitOps, Infrastructure-as-Code, and open-source tooling over "Click-Ops" UIs.

- High Volume: You have massive traffic volumes where Apigee’s call-volume pricing would be prohibitive.

- Innovation: You need to extend the gateway with custom code (plugins) to handle unique business logic.

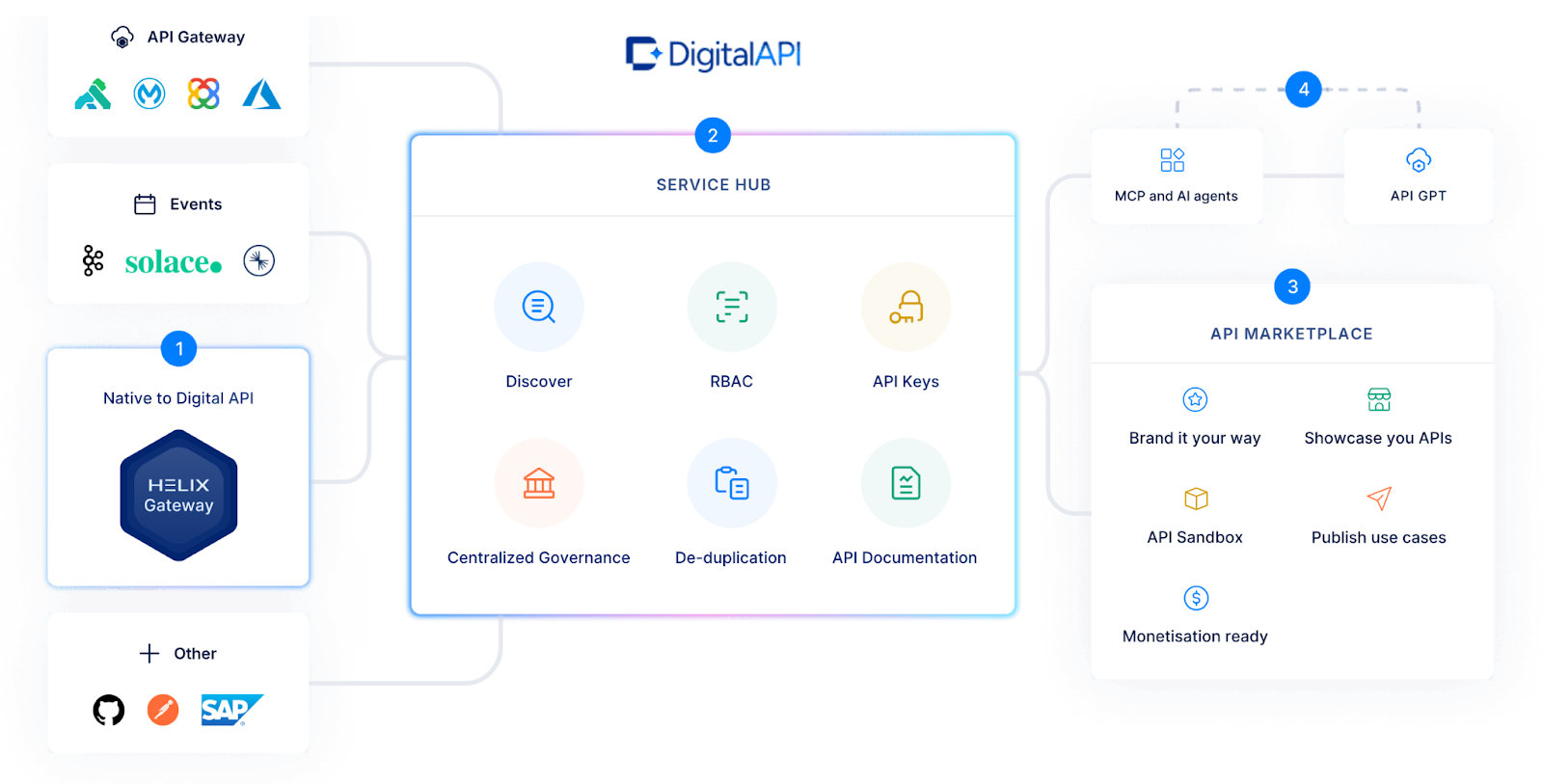

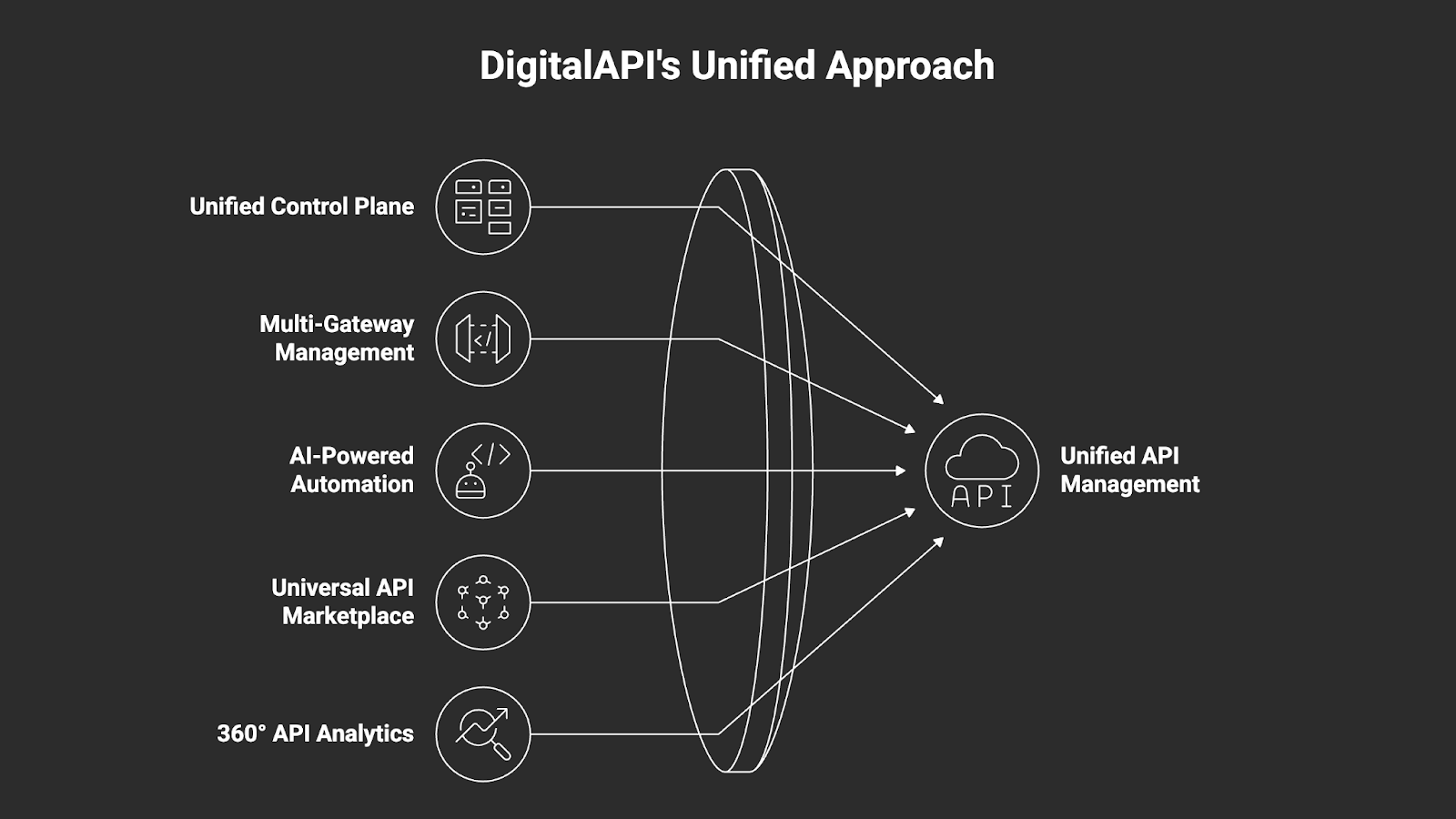

Why use DigitalAPI as an intelligence layer over Apigee and Kong?

You may have arrived here weighing Apigee against Kong, but most enterprises discover that relying on a single gateway creates new silos. DigitalAPI acts as the unified control plane that bridges this gap, allowing you to manage, govern, and scale APIs across any gateway or cloud.

- Unified Control Plane: Stop juggling multiple consoles. Manage your entire API ecosystem - whether on Apigee, Kong, AWS, or Azure - from a single, centralized dashboard. This breaks down silos and ensures consistent governance across all your environments.

- Multi-Gateway Management: Avoid vendor lock-in with true gateway agnosticism. DigitalAPI allows you to deploy, manage, and migrate APIs seamlessly across different gateways (like moving from Kong to Apigee or running them in parallel) without rewriting your governance policies.

- AI-Powered Automation: Accelerate your workflow with AI-driven tools that automate documentation, generate test cases, and detect security vulnerabilities. This reduces manual overhead and ensures your APIs are production-ready faster.

- Universal API Marketplace: Launch a branded, consumer-centric marketplace that aggregates APIs from all your gateways. DigitalAPI provides a unified catalog for internal and external developers to discover, subscribe to, and monetize APIs, regardless of where they are hosted.

- 360° API Analytics: Gain a unified view of performance, security, and consumption across your entire API estate. DigitalAPI aggregates metrics from every gateway, whether Apigee, Kong, or Helix, into a single dashboard, eliminating the blind spots typical of multi-gateway environments.

Frequently Asked Questions

1. How does vendor lock-in apply to Apigee vs Kong?

Apigee has high vendor lock-in. Moving off Apigee often requires rewriting policy logic (which is stored in proprietary XML bundles) and extracting data from Google’s SaaS. It is a "stickier" platform.

Kong offers lower lock-in due to its open-source core. Configuration is often platform-agnostic (YAML), making it easier to migrate the data plane. However, heavy reliance on proprietary Kong Enterprise plugins can create "soft" lock-in.

2. Which platform is better for large enterprises?

Historically, Apigee has been the default for large enterprises due to its focus on "Business APIs" (Monetization, Partner Portals) and strict governance. However, Kong is increasingly being chosen by large enterprises (like major banks and retailers) specifically for their internal microservices and modernization efforts. Many large enterprises actually use both: Apigee for the external "front door" and Kong for the internal microservices mesh.

3. Is Kong a true alternative to the full Apigee API Management platform?

Yes, but with a caveat. With the introduction of Kong Konnect, Kong now offers the full suite: Developer Portal, Analytics, and Service Hub. It has closed the gap significantly. However, Apigee still holds an edge in complex, out-of-the-box monetization and billing features for B2B data sales.

4. How do the deployment models (SaaS vs. self-managed) compare?

Apigee X is primarily a managed SaaS control plane with a peered managed runtime. You do not manage the servers, but you also have less control over the network topology.

Kong offers true "Run Anywhere" flexibility. You can run the control plane and data plane entirely self-managed (on-prem, air-gapped), fully SaaS (Konnect), or in a Hybrid mode. This flexibility is vital for industries with strict data residency requirements.

5. Which platform offers better native support for Kubernetes and microservices?

Kong is the clear winner here. It allows for an Ingress Controller deployment pattern where the gateway sits natively inside the K8s cluster, managed via CRDs (Custom Resource Definitions). It feels like a native Kubernetes object. Apigee offers "Apigee Hybrid" for K8s, but it is architecturally heavier and more complex to operate and maintain than Kong's lightweight approach.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

%20(1).png)

.avif)