API Gateway

Mastering the API Gateway Pattern in a Microservices Architecture: Design, Trade-offs & Best Practices

Updated on:

In a microservices architecture, every client request becomes a small orchestration challenge. Mobile apps, web apps, and partner systems often need data from multiple services, each with its own API, version, protocol, and deployment cycle. Exposing these services directly creates fragile client code, excessive round-trips, inconsistent security, and a constantly shifting integration surface.

This is where the API Gateway pattern becomes essential. Instead of clients talking to dozens of services, the gateway offers a single, stable entry point that handles routing, aggregation, authentication, and protocol translation. Done right, it becomes the backbone of microservices communication; done poorly, it becomes a bottleneck. In this blog, we break down the pattern, benefits, traps, and how to implement it effectively.

Microservices architecture & the challenge of edge-communication

Microservices architecture breaks an application into small, autonomous services, each owning a single business capability and deployed independently. This decomposition allows teams to move faster, scale specific workloads, and evolve functionality without impacting the entire system. As organizations grow, this modular approach becomes a critical enabler of continuous delivery and distributed innovation.

But modularity also brings complexity. Each service has its own API surface, version lifecycle, data contract, and runtime environment. A single user action, like placing an order or loading a dashboard, may require orchestrating calls across many services. Without a clean pattern at the edge, clients must manage this growing web of dependencies themselves.

Key edge-communication challenges include:

- Too many endpoints to manage: Clients must know how to call multiple services individually.

- Chatty networks & performance issues: A single UI screen may trigger dozens of calls.

- Inconsistent security boundaries: Exposing many services increases the attack surface.

- Tight client–service coupling: Any internal change breaks client integrations.

- Protocol and payload mismatches: Clients expect REST/JSON; services may use gRPC, events, XML, or custom formats.

- Versioning chaos: Frontends must adjust every time a backend service evolves.

These challenges set the stage for the API Gateway pattern, which simplifies and stabilises the communication between clients and a distributed microservices ecosystem.

What is the API Gateway pattern?

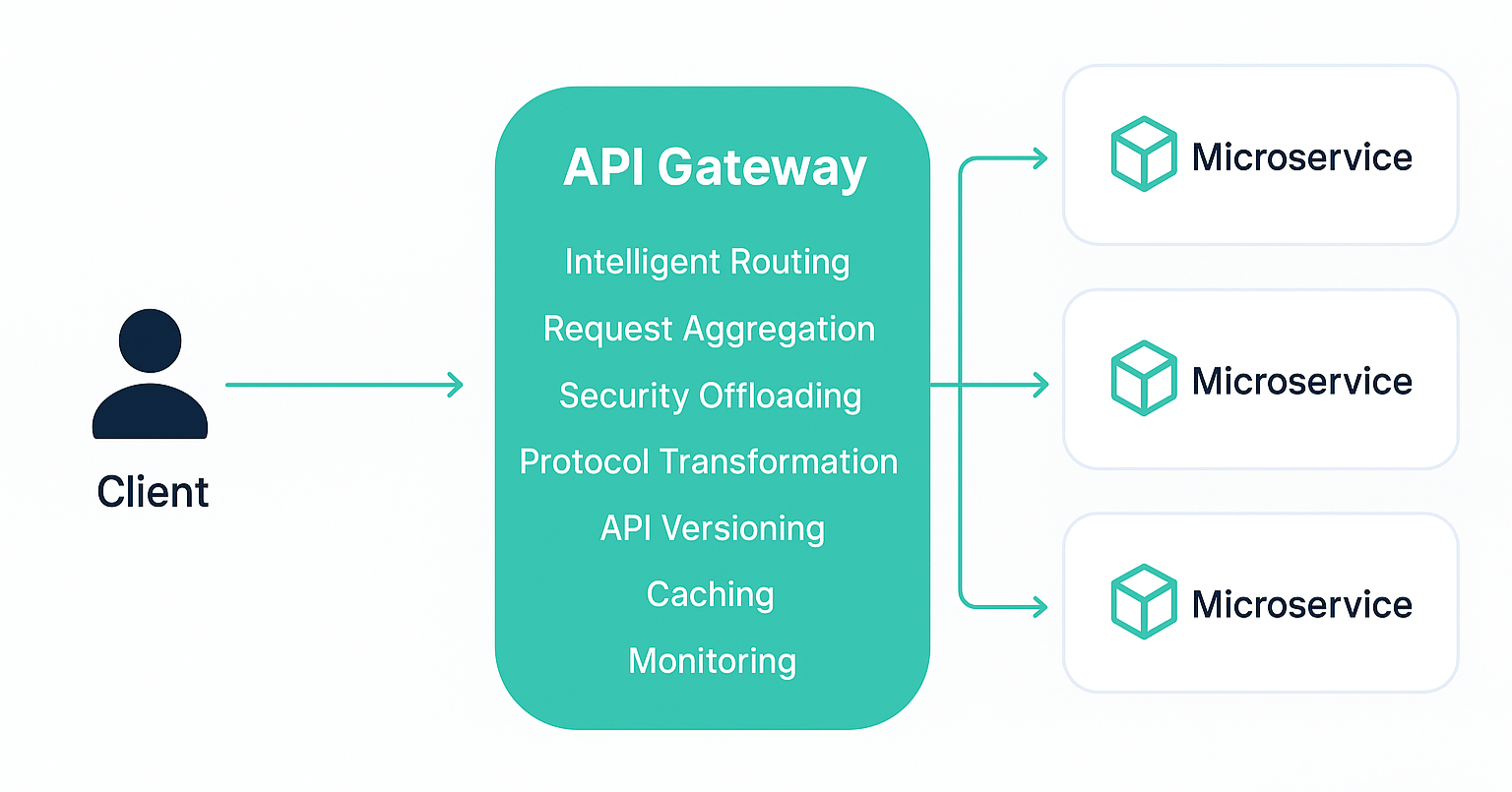

The API Gateway pattern is a foundational design approach in microservices where a single, unified entry point sits between client applications and the many backend services they need to interact with. Instead of allowing clients to call each microservice directly, the gateway acts as a central facade that routes requests, aggregates responses, enforces security, and translates protocols when required. Abstracting the complexity of internal services gives clients a clean, consistent, and stable interface, even as the underlying system evolves rapidly.

An API gateway also becomes the home for essential cross-cutting concerns. Authentication, authorization, rate limiting, caching, request validation, and traffic shaping can all be centralised here instead of being duplicated across individual services.

This reduces operational overhead and ensures uniform governance across the ecosystem. Additionally, gateways can combine data from multiple microservices into a single response, improving user experience by reducing round-trip and latency.

Ultimately, the API Gateway pattern offers clarity at the edge of a distributed system. It simplifies client communication, enhances security, and provides a flexible evolution path as teams independently modify or scale their services.

.png)

Why and when to use an API Gateway?

The API Gateway becomes essential when your microservices ecosystem reaches a point where clients cannot reliably or efficiently communicate with dozens of backend services. It provides a structured, secure, and scalable way to manage this complexity, ensuring that client applications stay simple even as the backend grows. Think of it as the “front door” to your distributed system, one that standardises how everything outside interacts with everything inside.

1. Unifying a fragmented service landscape

As microservices multiply, clients must otherwise track dozens of URLs, payloads, versions, and protocols. An API gateway hides this complexity behind a single, stable endpoint. It ensures that internal service reorganisation or refactoring doesn’t break client apps, allowing teams to evolve services independently without coordination overhead.

2. Reducing client-side round-trip and latency

Modern UIs, especially mobile apps, often need data from several microservices to render a single screen. Without a gateway, this results in multiple network requests, more bandwidth usage, and slower load times. The gateway aggregates responses and returns a single, optimised payload, improving performance significantly.

3. Centralising authentication, authorization, and governance

Instead of every microservice validating tokens, enforcing roles, or applying rate limits, the gateway handles these concerns uniformly. This reduces duplicated logic, ensures tighter governance, and prevents exposing internal services directly to the internet, shrinking the security attack surface.

4. Handling protocol and format translation

Clients typically speak REST/JSON, but backend services may use gRPC, GraphQL, SOAP, events, or binary protocols. The gateway translates between client-friendly formats and backend formats seamlessly. This decouples client technology choices from backend evolution and enables mixed architectural styles.

5. Managing API versioning and backward compatibility

As services evolve, breaking changes are inevitable. Instead of forcing every client to update instantly, the gateway provides versioned endpoints and backward-compatible facades. This lets teams modernise microservices internally while still supporting older clients in production.

6. Enhancing observability and traffic control

By funnelling all requests through one layer, you gain unmatched visibility into traffic patterns, errors, latency, and user behaviour. The gateway can throttle abusive clients, shape traffic during peak loads, and implement intelligent routing. This operational intelligence is invaluable for scaling and reliability.

7. Serving multiple client types gracefully (BFF pattern)

Different clients, mobile, web, partner APIs, IoT, often need different data shapes and performance behaviours. The gateway can expose tailored endpoints per client type using the Backend for Frontend pattern. This prevents bloated UIs and avoids forcing all clients to fit a single, generic API design.

Core patterns & capabilities inside the gateway

The API gateway pattern isn’t a single behaviour; it’s a collection of architectural capabilities that work together to simplify how clients communicate with a distributed microservices ecosystem. These capabilities help enforce consistency, reduce complexity, and ensure smooth, secure interaction between the outside world and internal services. Below are the core patterns traditionally implemented within an API gateway.

- Intelligent routing & reverse proxying: At its core, a gateway directs incoming requests to the right microservice based on path, headers, version, or rules. This decouples clients from knowing internal service locations, ports, or network topology. With built-in service discovery integration, routes automatically update as services scale or shift.

- Request aggregation & composition: Many client actions require data from multiple services. Gateways provide an aggregation layer that calls several microservices, stitches responses, and returns a single, optimised payload. This reduces client-side round-trips and improves the overall performance of applications, especially mobile apps.

- Security offloading & policy enforcement: Instead of repeated logic across services, the gateway centralises token validation, authentication, authorization, rate-limiting, and request validation. This ensures consistent governance while preventing direct exposure of internal microservices to the public internet.

- Protocol & data transformation: Gateways act as translators. They convert gRPC to REST, XML to JSON, or internal event-driven contracts into client-friendly formats. This allows backend teams to use the best-fit protocol without forcing changes on every client consuming the system.

- API versioning & compatibility management: As services evolve, old and new versions must coexist. Gateways provide versioned endpoints, deprecation workflows, header-based routing, and backward-compatible facades, ensuring smooth evolution without breaking existing consumers.

- Caching & performance optimisation: Gateways often include content caching, response compression, and static response handling to reduce backend load. This layer can dramatically lower latency for frequently accessed resources and reduce redundant service calls.

- Observability, monitoring & traffic shaping: Because all API traffic flows through the gateway, it becomes a powerful observability point. Gateways collect metrics, logs, traces, and performance insights, helping operators detect anomalies early. They can throttle abusive clients, shape traffic during spikes, and apply intelligent routing for reliability.

- Request/Response validation & sanitisation: By validating payloads, schemas, authentication tokens, and input parameters before they reach microservices, gateways protect backend systems from malformed or malicious requests. This significantly reduces the risk of cascading failures.

Trade-offs and how to mitigate API Gateway Pattern risks

While the API Gateway pattern simplifies client communication and strengthens governance, it also introduces its own set of architectural risks. These trade-offs don’t undermine the value of the gateway, but they do require careful design choices to avoid bottlenecks, failures, or operational overhead. By understanding these risks upfront, teams can implement the gateway confidently and sustainably.

1. Single point of failure

Risk: If the gateway goes down, all client traffic is blocked.

Mitigation: Deploy in active-active clusters, enable auto-scaling, and use health checks and failover mechanisms.

2. Performance bottleneck

Risk: Gateway becomes a choke point as traffic increases.

Mitigation: Use non-blocking I/O, enable caching, implement horizontal scaling, and offload heavy transformations.

3. Increased latency

Risk: Extra hop between client and backend services adds delay.

Mitigation: Keep gateway logic thin, cache frequent responses, and use asynchronous communication where possible.

4. Gateway is becoming a “Mini-Monolith”

Risk: Too much business logic accumulates inside the gateway.

Mitigation: Restrict gateway responsibilities to routing, security, and lightweight transformations; avoid domain logic entirely.

5. Operational & maintenance overhead

Risk: More components to configure, monitor, upgrade, and secure.

Mitigation: Use managed API gateway services or automate configuration with GitOps-style workflows.

6. Versioning & configuration complexity

Risk: Multiple client-specific endpoints or BFFs become difficult to manage.

Mitigation: Use templated routing rules, centralised API contracts, and consistent versioning policies.

7. Vendor lock-in concerns

Risk: Relying on proprietary gateway features may hinder portability.

Mitigation: Prefer open standards (OpenAPI, OAuth2, gRPC), avoid platform-specific extensions, and design for abstraction layers.

Best practices & checklist for architects

The API Gateway pattern is powerful, but its long-term success depends on thoughtful architectural decisions. A well-designed gateway creates clarity, consistency, and resilience at the system edge, while a poorly designed one becomes a bottleneck or mini-monolith. The following best practices help architects implement a gateway that stays scalable, predictable, and easy to evolve as microservices grow.

Architect’s Checklist

- Keep the gateway thin: Avoid business logic; restrict it to routing, security, and lightweight transformations.

- Adopt clear API contracts: Use OpenAPI/Swagger consistently and enforce schema governance.

- Define a strong versioning strategy: Support backward compatibility and deprecate versions gradually.

- Use service discovery, not hardcoded routes: Integrate with Consul, Eureka, Kubernetes, or similar registries.

- Enable uniform authentication and authorization: Centralise token validation, RBAC, rate limits, and access policies.

- Ensure horizontal scalability: Use load balancing, auto-scaling, and stateless gateway architectures.

- Add caching where it adds value: Cache frequently accessed resources to reduce backend load and latency.

- Instrument observability from day one: Collect logs, metrics, traces, and build dashboards for traffic insights.

- Apply traffic shaping and resilience patterns: Throttling, timeouts, circuit breakers, retries, and quota management.

- Align endpoints to client needs (BFF approach): Keep mobile, web, and partner APIs separated and optimized.

- Automate configuration & deployments: Use GitOps, CI/CD pipelines, and environment-specific configs.

- Regularly review gateway responsibilities: Prevent “scope creep” that turns the gateway into a central bottleneck.

Future trends & where the API Gateway Pattern in Microservices is heading

As modern systems evolve beyond traditional request–response models, the role of the API gateway is expanding from a simple routing layer to an intelligent, programmable control plane. Microservices, serverless workloads, event-driven systems, and AI-driven applications are pushing gateways to adapt rapidly. The pattern isn’t going away; instead, it’s being redefined to support richer protocols, smarter automation, and more autonomous ecosystems.

1. Rise of intelligent, policy-driven gateways

Gateways are moving from static configuration to dynamic, context-aware decision-making. Instead of predefined routing, they increasingly evaluate traffic in real time using telemetry, risk scores, or behavioural patterns. This shift brings adaptive rate limits, smarter threat detection, and automated failover. As enterprise systems scale, intelligent policy engines powered by analytics, or even AI, will become the default.

2. Convergence with service meshes & edge architectures

Gateways and service meshes are no longer treated as separate components. The industry is moving toward unified “north–south + east–west” control planes where gateways handle external traffic and meshes govern internal communication, but with shared policies, shared observability, and shared identity. At the same time, edge gateways are gaining prominence to handle low-latency workloads, IoT data bursts, and regional regulatory requirements.

3. API gateways becoming AI-ready and multi-protocol

Beyond rest, gateways now routinely support GraphQL, gRPC, Websockets, and event streams. The next wave is ai-native traffic: LLM calls, vector queries, agent workflows, and MCP/A2A protocols. Gateways will increasingly act as orchestrators for AI agent traffic, enforcing guardrails, validating inputs/Outputs, and managing cost-intensive inference pipelines. This positions the gateway as a foundational layer for the emerging agentic architectures.

Why Enterprises Choose DigitalAPI’s Helix Gateway for Microservices?

DigitalAPI’s Helix Gateway gives enterprises a lightweight, high-performance control point built for modern microservices rather than legacy, monolithic gateway architectures. It provides ultra-fast, low-latency routing with native support for REST, gRPC, events, GraphQL, and emerging AI-driven protocols, making it ideal for multi-team environments. Helix automatically discovers services, maintains consistent policies, and enforces authentication, rate limits, and schema governance without adding overhead to individual services.

Designed for scale, it runs in clustered, multi-cloud setups and integrates deeply with Kubernetes for auto-syncing, rollouts, and resilience. With built-in observability and adaptive traffic control, Helix ensures microservices stay reliable under unpredictable load. For enterprises moving fast, it offers a future-ready gateway that stays thin, predictable, and easy to evolve.

.png)

FAQs

1. What is the difference between an API gateway and a service mesh?

An API gateway manages north–south traffic, external clients calling internal services, handling routing, auth, rate limits, and aggregation. A service mesh manages east–west traffic between microservices, providing retries, mTLS, load balancing, and observability. Gateways sit at the edge; meshes operate inside the cluster. They complement each other rather than overlap, forming a complete communication and governance layer.

2. When should you not use an API gateway?

Avoid using an API gateway when your system is small, has only a few services, or your clients directly consume one stable API. Very early-stage architectures don’t benefit from the added operational overhead. If your services aren’t externally exposed or your traffic patterns are simple, a gateway may introduce unnecessary latency, configuration complexity, and maintenance work.

3. How many gateways should you have in a system?

Most mature systems use multiple gateways, not one. A typical setup includes separate gateways for web, mobile, partner APIs, or high-security domains. This prevents a single, overly complex gateway from becoming a monolith. The right number depends on client types, latency needs, organisational structure, and domain boundaries, but each gateway should remain focused and lightweight.

4. Does an API gateway replace API management?

No, an API gateway is only one part of the broader API management lifecycle. While gateways handle runtime traffic control, API management includes documentation, developer onboarding, versioning workflows, monetisation, access governance, analytics, and lifecycle automation. Gateways operate at the execution layer; API management platforms provide the operational, product, and governance layers required for enterprise-scale exposure.

5. How do you avoid the gateway becoming a bottleneck?

Keep gateway logic thin and avoid embedding business rules. Use horizontal scaling, low-latency architectures, and non-blocking I/O. Offload heavy transformations to backend services and enable caching for common responses. Continuously monitor latency, throughput, and error rates. Finally, split responsibilities using multiple gateways or BFFs so no single gateway grows too large or overloaded.

You’ve spent years battling your API problem. Give us 60 minutes to show you the solution.

.svg)

.avif)